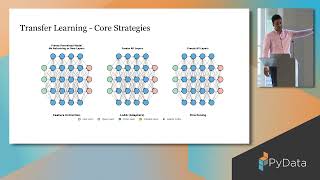

Transfer learning has revolutionised machine learning by enabling models trained on large datasets to generalise effectively to tasks with limited data. This talk explores strategies for adapting pretrained models to new domains, focusing on audio processing as a case study. Using YAMNet, Whisper, and wav2vec2 for laughter detection, we demonstrate how to extract meaningful representations, fine-tune models efficiently, and handle severe class imbalances. The session covers feature extraction, model fusion techniques, and best practices for optimising performance in data-scarce environments. Attendees will gain practical insights into applying transfer learning across various modalities beyond audio, maximising model effectiveness when labelled data is scarce.