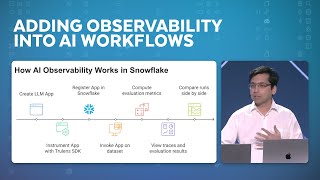

To gain trust in Generative AI applications, a robust observability framework is needed. In this session, we will demonstrate how users can instrument an AI application with Trulens, an open source observability framework, to trace each component of their application and use LLM-as-a-Judge evaluations to identify and improve points of failure. Attendees will learn about best practices in enabling observability in their AI workflows and common feedback functions used for evaluation. We will also explore using the observability framework as a mechanism to compare application versions to determine which version is best fit for production use.