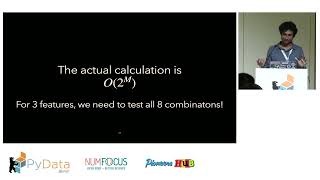

As machine learning models become more accurate and complex, explainability remains essential. Explainability helps not just with trust and transparency but also with generating actionable insights and guiding decision-making. One way of interpreting the model outputs is using SHapley Additive exPlanations (SHAP). In this talk, I will go through the concept of Shapley values and its mathematical intuition and then walk through a few real-world examples for different ML models. Attendees will gain a practical understanding of SHAP's strengths and limitations and how to use it to explain model predictions in their projects effectively.