How to Keep Your LLM Chatbots Real: A Metrics Survival Guide

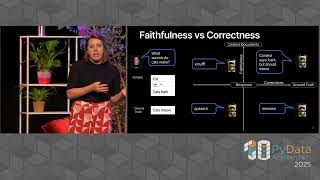

In this brave new world of vibe coding and YOLO-to-prod mentality, let’s take a step back and keep things grounded (pun intended). None of us would ever deploy a classical ML model to production without clearly defined metrics and proper evaluation, so let's talk about methodologies for measuring performance of LLM-powered chatbots. Think of retriever recall, answer relevancy, correctness, faithfulness and hallucination rates. With the wild west of metric standards still in full swing, I’ll guide you through the challenges of curating a synthetic test set, and selecting suitable metrics and open-source packages that help evaluating your use case. Everything is possible, from simple LLM-as-a-judge approaches like those inherent to many packages like MLFLow now up to complex multi-step quantification approaches with Ragas. If you work in the GenAI space or with LLM-powered chatbots, this session is for you! Prior or background knowledge is of advantage, but not required.