In the era of AI agents, data products, and exploding complexity, enterprises face an unprecedented challenge: how to give machines and people, the context needed to make decisions that are accurate, explainable, and trustworthy.

What if data teams could solve this?

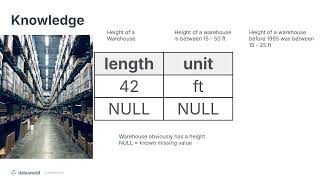

We'll explore a new opportunity: data teams as the architects and stewards of the “Enterprise Brain”, a unified knowledge layer that connects business concepts, data, and processes across the organization. By treating context as a first-class citizen, organizations can bridge structured and unstructured worlds, govern data and AI agents effectively, and unlock faster, more confident decision-making.

We'll unpack:

• Why context and knowledge are becoming the missing pieces of AI readiness.

• How structured and unstructured data are converging, and why this demands a new governance model.

• Why ontologies, knowledge graphs, and active governance are critical to managing the coming explosion of AI agents.

• The unique chance data teams have right now to lead and move from building pipelines to building the enterprise brain.

We won’t talk tools. We’ll talk possibility. By the end, you’ll see why the most successful organizations of the next decade will be the ones who make their data teams the creators and owners of knowledge itself.