Keynote: Zaheera Valani- Driving Data Democratization with the Databricks Data Intelligence Platform

Join us for our Keynote with Zaheera Valani

talk-data.com

talk-data.com

Event

Activities tracked

62

Sessions & talks

Showing 51–62 of 62 · Newest first

Join us for our Keynote with Zaheera Valani

The proliferation of AI/ML workloads across commercial enterprises, necessitates robust mechanisms to track, inspect and analyze their use of on-prem/cloud infrastructure. To that end, effective insights are crucial for optimizing cloud resource allocation with increasing workload demand, while mitigating cloud infrastructure costs and promoting operational stability.

This talk will outline an approach to systematically monitor, inspect and analyze AI/ML workloads’ properties like runtime, resource demand/utilization and cost attribution tags . By implementing granular inspection across multi-player teams and projects, organizations can gain actionable insights into resource bottlenecks, identify opportunities for cost savings, and enable AI/ML platform engineers to directly attribute infrastructure costs to specific workloads.

Cost attribution of infrastructure usage by AI/ML workloads focuses on key metrics such as compute node group information, cpu usage seconds, data transfer, gpu allocation , memory and ephemeral storage utilization. It enables platform administrators to identify competing workloads which lead to diminishing ROI. Answering questions from data scientists like "Why did my workload run for 6 hours today, when it took only 2 hours yesterday" or "Why did my workload start 3 hours behind schedule?" also becomes easier.

Through our work on Metaflow, we will showcase how we built a comprehensive framework for transparent usage reporting, cost attribution, performance optimization, and strategic planning for future AI/ML initiatives. Metaflow is a human centric python library that enables seamless scaling and management of AI/ML projects.

Ultimately, a well-defined usage tracking system empowers organizations to maximize the return on investment from their AI/ML endeavors while maintaining budgetary control and operational efficiency. Platform engineers and administrators will be able to gain insights into the following operational aspects of supporting a battle hardened ML Platform:

1.Optimize resource allocation: Understand consumption patterns to right-size clusters and allocate resources more efficiently, reducing idle time and preventing bottlenecks.

Proactively manage capacity: Forecast future resource needs based on historical usage trends, ensuring the infrastructure can scale effectively with increasing workload demand.

Facilitate strategic planning: Make informed decisions regarding future infrastructure investments and scaling strategies.

4.Diagnose workload execution delays: Identify resource contention, queuing issues, or insufficient capacity leading to delayed workload starts.

Data Scientists on the other hand will gain clarity on factors that influence workload performance. Tuning them can lead to efficiencies in runtime and associated cost profiles.

As generative AI systems become more powerful and widely deployed, ensuring safety and security is critical. This talk introduces AI red teaming—systematically probing AI systems to uncover potential risks—and demonstrates how to get started using PyRIT (Python Risk Identification Toolkit), an open-source framework for automated and semi-automated red teaming of generative AI systems. Attendees will leave with a practical understanding of how to identify and mitigate risks in AI applications, and how PyRIT can help along the way.

For the first time in computing history the paradigm of designing applications is following a probabilistic approach rather than a deterministic approach. Large language models have generated huge amounts of excitement, with investors, management, and engineers who are using them in product development. However both according to peer reviewed studies, and anecdotal observations, it has proven difficult to translate this optimism into business value.

Why can we solve some equations with neat formulas, while others stubbornly resist every trick we know? Equations with squares bow to the quadratic formula. Those with cubes and fourth powers also have solutions. But then the magic stops. And when we, as data scientists, add exponentials, logarithms, or trigonometric terms into models, the resulting equations often cross into territory where no closed-form solutions exist.

This talk is both fun and useful. With Python and SymPy, we’ll “cheat” our way through centuries of mathematics, testing families of equations to see when closed forms appear and when numerical methods are our only option. Attendees will enjoy surprising examples, a bit of mathematical history, and practical insight into when exact solutions exist — and when to stop searching and switch to numerical methods.

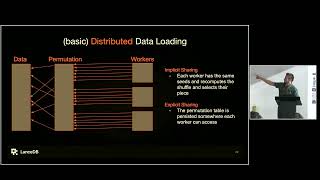

Data scientists need data to train their models. The process of feeding the training algorithm with data is loosely described as "data loading." This talk looks at the data loading process from a data engineer's perspective. We will describe common techniques such as splits, shuffling, clumping, epochs, and distribution. We will show how the way data is loaded can have impacts on training speed and model quality. Finally, we examine what constraints these workloads put on data systems and discuss best practices for preparing a database to serve as a source for data loading.

Agents need timely and relevant context data to work effectively in an interactive environment. If an agent takes more than a few seconds to react to an action in a client applicatoin, users will not perceive it as intelligent - just laggy.

Real-time context engineering involves building real-time data pipelines to pre-process application data and serve relevant and timely context to agents. This talk will focus on how you can leverage application identifiers (user ID, session ID, article ID, order ID, etc) to identify which real-time context data to provide to agents. We will contrast this approach with the more traditional RAG approach of using vector indexes to retrieve chunks of relevent text using the user query. Our approach will necessitate the introduction of the Agent-to-Agent protocol, an emerging standard for defining APIs for agents.

We will also demonstrate how we provide real-time context data from applications inside Python agents using the Hopsworks feature store. We will walk through an example of an interactive application (TikTok clone).

In this talk I'll discuss 3 goals I have when I try to communicate complicated topics. I'll then illustrate how I used these goals to guide the development of my most popular video, PCA Step-by-Step, which has over 3.4 million views.