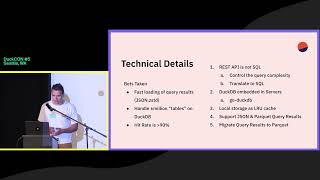

Speaker: Miguel Filipe (Dune Analytics) Slides: https://blobs.duckdb.org/events/duckcon5/miguel-filipe-delighting-users-with-restful-apis-and-duckdb.pdf

talk-data.com

talk-data.com

Topic

Application Programming Interface (API)

856

tagged

Speaker: Miguel Filipe (Dune Analytics) Slides: https://blobs.duckdb.org/events/duckcon5/miguel-filipe-delighting-users-with-restful-apis-and-duckdb.pdf

Discover how Apigee and Advanced API Security can help you stay protected from OWASP Top 10 API Security risks, and how to adopt a layered API security approach using Apigee and Cloud Armor together. You’ll also see how Apigee can send API security incident information to Google SecOps for centralized triage and correlation, and serve as an enforcement point for Model Armor to sanitize prompts and responses for LLMs fronted by APIs.

DevEx 2.0 means giving developers guardrails that accelerate delivery instead of slowing it. This session shows how continuous security integrates with Azure and GitHub pipelines. You’ll see IDE coaching and SAST at commit. With Microsoft Copilot and Azure OpenAI using GPT-5, developers can cut through false positives and receive actionable fixes directly in the code. Builds include SBOM generation, container scans, and dependency checks. Staging environments add DAST and API testing.

There are, by now, several well-established C++ JSON libraries. C++ developers can choose between DOM parsers, SAX parsers, and pull parsers. DOM parsers are by design slow and use a lot of memory, SAX parsers are clumsy to use, so pull parsers are the way to go. Our open-source JSON parser fills this gap between the existing parser libraries. It is a fully validating, fast, pull parser with O(1) memory usage. The key innovation lies in our API design. Unlike other parsers that solely validate JSON, ours enforces semantical constraints, requiring developers to define specific structures. This results in automatic error handling and simplifies code. You can also parse directly into your own data structures without any extra copies. This talk showcases our JSON parser API in action, comparing it with established counterparts. Additionally, we demonstrate elegant generic programming C++ techniques, making it accessible to both beginners and intermediate developers.

AI is reshaping NetOps from scripted automation to intelligent, data driven workflows. We will show uses: incident triage, knowledge retrieval, traffic analysis, prediction, and contrast legacy monitoring with ML, NLP, and LLMs. See how RAG, text to SQL, and agent workflows enable real time insights across hybrid data. We will outline data pipelines and MLOps, address accuracy, reliability, cost, compliance, and weigh build vs buy. We will cover API integration and human in the loop guardrails.

Access data insights through natural conversation. Build custom AI analytics apps with APIs.

This hands-on lab introduces Gemini 2.0 Flash, the powerful new multimodal AI model from Google DeepMind, available through the Gemini API in Vertex AI. You'll explore its significantly improved speed, performance, and quality while learning to leverage its capabilities for tasks like text and code generation, multimodal data processing, and function calling. The lab also covers advanced features such as asynchronous methods, system instructions, controlled generation, safety settings, grounding with Google Search, and token counting.

If you register for a Learning Center lab, please ensure that you sign up for a Google Cloud Skills Boost account for both your work domain and personal email address. You will need to authenticate your account as well (be sure to check your spam folder!). This will ensure you can arrive and access your labs quickly onsite. You can follow this link to sign up!

Unlock the power of code execution with Gemini 2.0 Flash! This hands-on lab demonstrates how to generate and run Python code directly within the Gemini API. Learn to use this capability for tasks like solving equations, processing text, and building code-driven applications.

If you register for a Learning Center lab, please ensure that you sign up for a Google Cloud Skills Boost account for both your work domain and personal email address. You will need to authenticate your account as well (be sure to check your spam folder!). This will ensure you can arrive and access your labs quickly onsite. You can follow this link to sign up!

Kayak through Seattle’s lakes with AI! Discover how Gemini can take you where you want to go, through rapid prototyping with its function calling, streaming, and multimodal APIs. Learn how you can build your own immersive AI experiences faster than ever.

Build a multimodal search engine with Gemini and Vertex AI. This hands-on lab demonstrates Retrieval Augmented Generation (RAG) to query documents containing text and images. Learn to extract metadata, generate embeddings, and search using text or image queries.

If you register for a Learning Center lab, please ensure that you sign up for a Google Cloud Skills Boost account for both your work domain and personal email address. You will need to authenticate your account as well (be sure to check your spam folder!). This will ensure you can arrive and access your labs quickly onsite. You can follow this link to sign up!

In this hands-on lab, discover how to govern AI Apps & Agents using AI Gateway in Azure API Management. Learn to apply governance best practices by onboarding AI models, monitoring and controlling token usage, enforcing safety and compliance, and boosting performance with semantic caching. You’ll also govern MCP-based agent architectures by creating secure, efficient servers from APIs or connecting to backend MCP servers, equipping you to deliver responsible, resilient AI solutions at scale.

Build a smarter image classification app with Windows ML, now available generally available. This hands-on lab walks through dynamically downloading execution providers (EPs) for NPUs, compiling models for hardware-specific EPs, and running inference locally. Learn to deploy, debug, and optimize your app using WinML APIs. Ideal for developers exploring AI acceleration on Windows devices—no pre-distributed EPs required.

Looking to add on-device AI to your apps? Not sure how to get started? Join our lab to learn how to integrate local AI capabilities into your Windows apps using Windows AI APIs. Discover how to implement Semantic Search and Retrieval-Augmented Generation (RAG) to power intelligent information retrieval, and use Phi Silica for on-device text processing. This lab will walk you through key APIs, and best practices to build on-device AI solutions for Copilot+ PCs. Developers of all levels welcome.

Experience Paddle Bounce, a classic game brought to life with the power of Gemini 2.0’s Multimodal Live API in action! Discover the potential of AI for understanding and responding in real time.

Create a story with Pixel Narrator! Provide an image & text prompt, then witness how Multimodal Live API translates visual details into a compelling text narrative in real-time.

In this mini course you will learn about different prompt design and engineering techniques commonly used in LLM-powered applications such as few-shot prompting and chain of thought reasoning. You will then apply these practices in a hands-on lab environment using the PaLM and Gemini Pro APIs.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.