Está no ar, o Data Hackers News !! Os assuntos mais quentes da semana, com as principais notícias da área de Dados, IA e Tecnologia, que você também encontra na nossa Newsletter semanal, agora no Podcast do Data Hackers !! Aperte o play e ouça agora, o Data Hackers News dessa semana ! Para saber tudo sobre o que está acontecendo na área de dados, se inscreva na Newsletter semanal: https://www.datahackers.news/ Conheça nossos comentaristas do Data Hackers News: Monique Femme Preencha a pesquisa State of Data Brazil: https://www.stateofdata.com.br/ Demais canais do Data Hackers: Site Linkedin Instagram Tik Tok You Tube

talk-data.com

talk-data.com

Topic

AI/ML

Artificial Intelligence/Machine Learning

9014

tagged

Activity Trend

Top Events

Send us a text In this episode of Data Topics, Ben speaks with Kim Smets, VP Data & AI at Telenet, about his journey from early machine learning work to leading enterprise-wide AI transformation at Telenet. Kim shares how he built a central data & AI team, shifted from fragmented reporting to product thinking, and embedded governance that actually works. They discuss the importance of simplicity, storytelling, and sustainable practices in making AI easy, relevant, and famous across the business. From GenAI exploration to real-world deployment, this episode is packed with practical insights on scaling AI with purpose.

In this episode of Hub & Spoken, Jason Foster, CEO and Founder of Cynozure, speaks with Shachar Meir, a data advisor who has worked with organisations from startups to the likes of Meta and Paypal. Together, they explore why so many companies, even those with skilled data teams, solid platforms and plenty of data, still struggle to deliver real business value. Shachar's take is clear: the problem isn't technology - it's people, process, and culture. Too often, data teams focus on building sophisticated platforms instead of understanding the business problems they're meant to solve. His summary: why guess when you can know? This episode is a practical conversation for anyone looking to move their organisation from data chaos to data clarity. 🎧 Listen now to discover how clarity beats complexity in data strategy. Cynozure is a leading data, analytics and AI company that helps organisations to reach their data potential. It works with clients on data and AI strategy, data management, data architecture and engineering, analytics and AI, data culture and literacy, and data leadership. The company was named one of The Sunday Times' fastest-growing private companies in both 2022 and 2023 and recognised as The Best Place to Work in Data by DataIQ in 2023 and 2024. Cynozure is a certified B Corporation.

The recent progression of AI brings several challenges to achieve compliance and security. We will discuss topics and ideas on how to ensure that AI practices and programs are done securely and compliantly. It is important that prevent not only data leakage, but also IP leakage. We will explore what this means in the new world of AI and techniques of how to manage it.

Discussion on cross-sector collaboration accelerating responsible AI innovation.

Discussion on applying Responsible AI principles in real-world organizational practices.

Conversation about building trust, transparency, and impact in AI systems.

At Target, creating relevant guest experiences at scale takes more than great creative — it takes great data. In this session, we’ll explore how Target’s Data Science team is using first-party data, machine learning, and GenAI to personalize marketing across every touchpoint.

You’ll hear how we’re building intelligence into the content supply chain, turning unified customer signals into actionable insights, and using AI to optimize creative, timing, and messaging — all while navigating a privacy-first landscape. Whether it’s smarter segmentation or real-time decisioning, we’re designing for both scale and speed.

As the Chief Analytics Officer for New York City, I witnessed firsthand how data science and AI can transform public service delivery while navigating the unique challenges of government implementation. This talk will share real-world examples of successful data science initiatives in the government context, from predictive analytics for fire department risk modeling to machine learning models that improve social service targeting.

However, government data science isn't just about technical skill—it's about accountability, equity, and transparency. I'll discuss critical pitfalls including algorithmic bias, privacy concerns, and the importance of explainable AI in public decision-making.

We'll explore how traditional data science skills must be adapted for the public sector context, where stakeholders include not just internal teams but taxpayers, elected officials, and community advocates.

Whether you're a data scientist considering public service or a government professional seeking to leverage analytics, this session will provide practical insights into building data capacity that serves the public interest while maintaining democratic values and citizen trust.

As organizations and individual workers increasingly adopt generative AI (GenAI) to improve productivity, there is limited understanding of how different modes of human-AI interactions affect worker experience.

In this study, we examine the ordering effect of human-AI collaboration on worker experience through a series of pre-registered laboratory and online experiments involving common professional writing tasks. We study three collaboration orders: AI-first when humans prompt AI to draft the work and then improve it, human-first when humans draft the work and ask AI to improve it, and no-AI. Our results reveal an important trade-off between worker productivity and worker experience: while workers completed the writing draft more quickly in the AI-first condition than in the human-first condition, they reported significantly lower autonomy and efficacy. This negative ordering effect affected primarily female workers, not male workers.

Furthermore, being randomly assigned to a collaboration mode increased workers’ likelihood of choosing the same mode for similar tasks in the future, especially for the human-first collaboration mode. In addition, writing products generated with the use of GenAI were longer, more complex, and required higher grade levels to comprehend. Together, our findings highlight the potential hidden risks of integrating GenAI into workflow and the imperative of designing human-AI collaborations to balance work productivity with human experiences.

As AI continues to shape human-computer interaction, there’s a growing opportunity and responsibility to ensure these technologies serve everyone, including people with communication disabilities. In this talk, I will present my ongoing work in developing a real-time American Sign Language (ASL) recognition system, and explore how integrating accessible design principles into AI research can expand both usability and impact.

The core of the talk will cover the Sign Language Recogniser project (available on GitHub), in which I used MediaPipe Studio together with TensorFlow, Keras, and OpenCV to train a model that classifies ASL letters from hand-tracking features.

I’ll share the methodology: data collection, feature extraction via MediaPipe, model training, and demo/testing results. I’ll also discuss challenges encountered, such as dealing with gesture variability, lighting and camera differences, latency constraints, and model generalization.

Beyond the technical implementation, I’ll reflect on the broader implications: how accessibility-focused AI projects can promote inclusion, how design decisions affect trust and usability, and how women in AI & data science can lead innovation that is both rigorous and socially meaningful. Attendees will leave with actionable insights for building inclusive AI systems, especially in domains involving rich human modalities such as gesture or sign.

Trust is the currency of successful AI adoption; without it, even the most accurate models risk rejection. This talk will focus on how to operationalize responsible AI deployment practices that embed trust, transparency, and accountability from day one. Using a case study in healthcare AI evaluation, we will walk through practical techniques: secure key management, explainable AI outputs, multi-metric evaluation frameworks, and mechanisms for stakeholder feedback integration. Beyond technical implementation, we will examine how ethical guardrails and clear governance structures transform AI from experimental models into systems people can rely on.

Artificial Intelligence (AI) and Generative AI (GenAI) are marketed as upgrades to data-driven decision making in education, promising faster predictions, personalization, and adaptive interventions. Yet these systems do not address the fundamental problems like over-reliance on quantifiable metrics, bias, inequity, and lack of transparency, embedded in educational data practices; they amplify them.

Across platforms such as Learning Management Systems (LMS), institutional dashboards, and predictive models, what is counted as “data” remains narrow: logins, clicks, scores, demographics, and test results. Excluded are lived experiences, complex identities, and structural inequities. These omissions are not accidental; they are design choices shaped by institutional priorities and power.

Drawing on O’Neil and Broussard, this session highlights how data-driven systems risk misinterpretation, reductionism, and exclusion. Participants will engage with scenarios that demonstrate both the promises and pitfalls of triangulating educational data. Together, we will discuss how such data might be misinterpreted, reduced, or stripped of context when filtered through AI systems.

As a starting point to navigate these problems, Critical Data Literacy is introduced as a framework for reimagining data practices through comprehension, critique, and participation. It equips participants engaging with data-driven systems in education and beyond to interrogate how data is produced, whose knowledge counts, and what is excluded or marginalized.

Participants will leave with reflective questions to guide their own practice: Better for whom? What is not on the screen? Whose goals are being personalized? Without this lens, AI risks accelerating inequities under the guise of objectivity.

Whether you call it wrangling, cleaning, or preprocessing, data prep is often the most expensive and time-consuming part of the analytical pipeline. It may involve converting data into machine-readable formats, integrating across many datasets or outlier detection, and it can be a large source of error if done manually. Lack of machine-readable or integrated data limits connectivity across fields and data accessibility, sharing, and reuse, becoming a significant contributor to research waste.

For students, it is perhaps the greatest barrier to adopting quantitative tools and advancing their coding and analytical skills. AI tools are available for automating the cleanup and integration, but due to the one-of-a-kind nature of these problems, these approaches still require extensive human collaboration and testing. I review some of the common challenges in data cleanup and integration, approaches for understanding dataset structures, and strategies for developing and testing workflows.

AI has the potential to transform learning, work, and daily life for millions of people, but only if we design with accessibility at the core. Too often, disabled people are underrepresented in datasets, creating systemic barriers that ripple through models and applications. This talk explores how data scientists and technologists can mitigate bias, from building synthetic datasets to fine-tuning LLMs on accessibility-focused corpora. We’ll look at opportunities in multimodal AI: voice, gesture, AR/VR, and even brain-computer interfaces, that open new pathways for inclusion. Beyond accuracy, we’ll discuss evaluation metrics that measure usability, comprehension, and inclusion, and why testing with humans is essential to closing the gap between model performance and lived experience. Attendees will leave with three tangible ways to integrate accessibility into their own work through datasets, open-source tools, and collaborations. Accessibility is not just an ethical mandate, it’s a driver of innovation, and it begins with thoughtful, human-centered data science.

This presentation will provide an overview of the California Transparency in Frontier Artificial intelligence Act, Colorado Artificial Intelligence Act, and Texas Responsible Artificial Intelligence Governance Act, which are scheduled to go into effect in 2026.

In a rapidly evolving advertising landscape where data, technology, and methodology converge, the pursuit of rigorous yet actionable marketing measurement is more critical—and complex—than ever. This talk will showcase how modern marketers and applied data scientists employ advanced measurement approaches—such as Marketing Mix Modeling (frequentist and Bayesian) and robust experimental designs, including randomized control trials and synthetic control-based counterfactuals—to drive causal inference in advertising effectiveness for meaningful business impact.

The talk will also address emergent aspects of applied marketing science- namely open-source methodologies, digital commerce platforms and artificial intelligence usage. Innovations from industry giants like Google and Meta, as well as open-source communities exemplified by PyMC-Marketing, have democratized access to advancement in methodologies. The emergence of digital commerce platforms such as Amazon and Walmart and the rich data they bring forward is transforming how customer journeys and campaign effectiveness are measured across channels. Artificial Intelligence is accelerating every facet of the data science workflow, streamlining processes like coding, modeling, and rapid prototyping (“vibe coding”) to enabling the integration of neural networks and deep learning techniques into traditional MMM toolkits. Collectively, these provide new and easy ways of quick experimentation and learning of complex nonlinear dynamics and hidden patterns in marketing data

Bringing these threads together, the talk will show how Ovative Group—a media and marketing technology firm—integrates domain expertise, open-source solutions, strategic partnerships, and AI automation into comprehensive measurement solutions. Attendees will gain practical insights on bridging academic rigor with business relevance, empowering careers in applied data science, and helping organizations turn marketing analytics into clear, actionable strategies.

As healthcare organizations accelerate their adoption of AI and data-driven systems, the challenge lies not only in innovation but in responsibly scaling these technologies within clinical and operational workflows. This session examines the technical and governance frameworks required to translate AI research into reliable and compliant real-world applications. We will explore best practices in model lifecycle management, data quality assurance, bias detection, regulatory alignment, and human-in-the-loop validation, grounded in lessons from implementing AI solutions across complex healthcare environments. Emphasizing cross-functional collaboration among clinicians, data scientists, and business leaders, the session highlights how to balance technical rigor with clinical relevance and ethical accountability. Attendees will gain actionable insights into building trustworthy AI pipelines, integrating MLOps principles in regulated settings, and delivering measurable improvements in patient care, efficiency, and organizational learning.

Large language models are powerful, but their true potential emerges when they evolve into AI agents which are systems that can reason, plan, and take action autonomously. My talk will explore the shift from using models as passive tools to designing agents that actively interact with data, systems, and people.

I will cover: - Gen AI and Agentic AI – How are These Different - Single Agent (monolithic) and Multi Agent Architectures (modular / distributed) - Open Source and Closed Source AI Systems - Challenges of Integrating Agents with Existing Systems

I will break down the technical building blocks of AI agents, including memory, planning loops, tool integration, and feedback mechanisms. Examples will be used to highlight how agents are being used in workflow automation, knowledge management, and decision support.

I will wrap up with where limitations of AI Agents still pose risks: - Assessing Maturity Cycle of Agents - Cybersecurity Risks of Agents

By the end, attendees will understand: - What makes AI agents different from LLMs - Technical considerations required to build AI Agents responsibly - Applicable knowledge to begin experimenting with agents.

Is artificial intelligence going to take over the world? Have big tech scientists created an artificial lifeform that can think on its own? Is it going to put authors, artists, and others out of business? Are we about to enter an age where computers are better than humans at everything? The answer to these questions, we respond: is “no,” “they wish,” “LOL,” and “definitely not.” This kind of thinking is a symptom of a phenomenon known as “AI hype.” Hype looks and smells fishy: It twists words and helps the rich get richer by justifying data theft, motivating surveillance capitalism, and devaluing human creativity in order to replace meaningful work with jobs that treat people like machines.

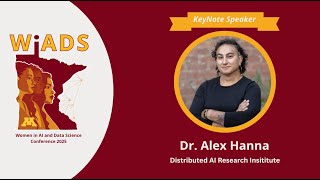

In this talk, I discuss our book The AI Con, (coauthored with Dr. Emily M. Bender), which offers a sharp, witty, and wide-ranging take-down of AI hype across its many forms. We show you how to spot AI hype, how to deconstruct it, and how to expose the power grabs it aims to hide. Armed with these tools, you will be prepared to push back against AI hype at work, as a consumer in the marketplace, as a skeptical newsreader, and as a citizen holding policymakers to account. Together, we expose AI hype for what it is: a mask for Big Tech’s drive for profit, with little concern for who it affects.