Register here to save your spot! Join Tristan from dbt and Barry from Hex for a fireside chat and Q&A, discussing everything dbt launched at Coalesce, the future of the data stack, and why they’re bullish on data teams. Plus, network with your peers and fellow conference-goers over happy hour drinks, snacks and data-filled discussions.

talk-data.com

talk-data.com

Topic

dbt

dbt (data build tool)

87

tagged

Activity Trend

Top Events

Take a break from the hustle and bustle to come hang out with the LGBTQIA+ data nerds. dbt Labs’ Queeries ERG is hosting a casual, alcohol-free hangout with snacks and good vibes in the cozy dbt full refresh Room. At seven, we'll head to the Coalesce Party together with all our new friends. All are welcome.

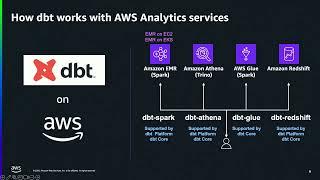

As organizations increasingly adopt modern data stacks, the combination of dbt and AWS Analytics services emerged as a powerful pairing for analytics engineering at scale. This session will explore proven strategies and hard-learned lessons for optimizing this technology stack to use dbt-athena, dbt-redshift, and dbt-glue to deliver reliable, performant data transformations. We will also cover case studies, best practices, and modern lakehouse scenarios with Apache Iceberg and Amazon S3 Tables.

Delivering trusted data at scale doesn’t have to mean ballooning costs or endless rework. In this session, we’ll explore how state-aware orchestration, powered by Fusion, drives leaner, smarter pipelines in the dbt platform. We’ll cover advanced configurations for even greater efficiency, practitioner tips that save resources, and testing patterns that cut redundancy. The result: faster runs, lower spend, and more time for impactful work.

Hear how EF (Education First) modernized its data stack from a legacy SQL Server-based environment to a modern Snowflake and dbt-based data stack. Find out what challenges and key decisions were handled during the process, and learn how to incorporate some of their key takeaways into your work.

Fifth Third Bank transformed its MLOps using a feature store built with dbt Cloud. The result? Improved model governance, reduced risk and faster product innovation. In this session, learn how they defined features, automated pipelines and delivered real-time and historical feature views.

AI and dbt unlocks the potential for any data analyst to work like full-stack dbt developers. But without the right guardrails, that freedom can quickly turn into chaos and technical debt. At WHOOP, we embraced analyst autonomy, and scaled it responsibly. In this session, you’ll learn how we empowered analysts to build in dbt while protecting data quality, staying aligned with the broader team, and avoiding technical debt. If you’re looking to give analysts more ownership without giving up control, this session will show you how to get there.

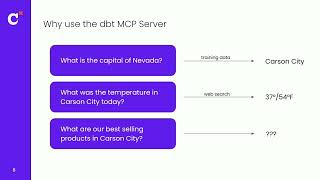

Continue the conversation from Building High-Quality AI Workflows with the dbt MCP Server in this interactive roundtable. We’ll explore how dbt’s MCP Server connects governed data to AI systems, dive deeper into the practical use cases, and talk through how organizations can adopt AI safely and effectively. This is your chance to: Ask detailed technical questions and get answers from product experts. Share your team’s challenges and learn from peers who are experimenting with MCP. Explore what’s possible today and influence where we go next. Attendance at the breakout session is encouraged but not required. Come ready to join the discussion and leave with new ideas!

Join us for a roundtable discussion where we’ll go deeper into the ideas shared in the dbt development in the age of AI: Improving the developer experience in the dbt platform. Bring your questions, share your take, and connect with peers and presenters. Note attendance in the breakout session is not required.

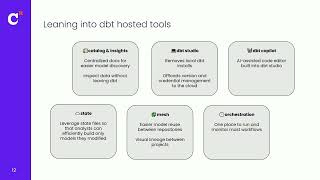

This workshop will cover new analyst focused user interfaces in dbt. Understand how to develop in dbt Canvas, how to explore within the Insights query page, and how to navigate across your data control plane in the dbt Catalog. What to bring: You will need to bring your own laptop to complete the hands-on exercises. We will provide all the other sandbox environments for dbt and a data platform.

Eliminate 80% of the manual effort spent writing dbt tests, chasing noisy alerts, and fixing data issues. In this session, you'll see how data teams are using an AI Data SRE that detects, triages, and resolves issues across the entire data stack. We’ll cover everything from AI architecture to optimised incident management–and even show an agent writing production-ready PRs!

In this session we will cover the strategic and intentional tools that Workday took a bet on to create a true AI focused roadmap for data and analytics. You’ll learn about the strategy, alignment, tooling, and architecture put in place, covering both the journey & the technology.

Platform engineers from global pharmaceutical company invites you to explore our journey in creating a Cloud native, Federated Data Platform using dbt Cloud, Snowflake, and Data Mesh. Discover how we established foundational tools, standards, and developed automation and self-service capabilities.

Manual modeling is out. Multi-agent systems are in. In this session, Dylan shows how his team at Mammoth Growth paired dbt with autonomous agents to handle complex modeling tasks. The result was days of work completed in minutes. You'll see how the system works, what it solved, and how it's reshaped their internal development workflows.

Iceberg is an open storage format for large analytical datasets that is now interoperable with most modern data platforms. But the setup is complicated, and caveats abound. Jeremy Cohen will tour the archipelago of Iceberg integrations — across data warehouses, catalogs, and dbt — and demonstrate the promise of cross platform dbt Mesh to provide flexibility and collaboration for data teams. The more the merrier.

Join us for a roundtable discussion where we’ll go deeper into the ideas shared in the Turn metadata into meaning: Build context, share insights, and make better decisions with dbt Catalog breakout. Bring your questions, share your take, and connect with peers and presenters. Note attendance in the breakout session is not required.

Get certified at Coalesce! Choose from two certification exams: The dbt Analytics Engineering Certification Exam is designed to evaluate your ability to: Build, test, and maintain models to make data accessible to others Use dbt to apply engineering principles to analytics infrastructure We recommend that you have at least SQL proficiency and have had 6+ months of experience working in dbt (self-hosted dbt or the dbt platform) before attempting the exam. The dbt Architect Certification Exam assesses your ability to: Design secure, scalable dbt implementations, with a focus on environment orchestration Role-based access control Integrations with other tools Collaborative development workflows aligned with best practices What to expect Your purchase includes sitting for one attempt at one of the two in-person exams at Coalesce You will let the proctor know which certification you are sitting for Please arrive on time, this is a closed-door certification, and attendees will not be let in after the doors are closed What to bring You will need to bring your own laptop to take the exam Duration: 2 Hours Fee: $100 Trainings and certifications are not offered separately and must be purchased with a Coalesce pass Trainings and certifications are not available for Coalesce Online passes If you no-show for your certification, you will not be refunded

Come see how dbt empowers teams to harness Apache Iceberg and Snowflake Open Catalog for reliable, scalable, and agile analytics pipelines

With 700+ monthly BI users, Cribl scales self-service through governance, SDLC workflows, and smart AI practices. Join Priya Gupta and Chris Merrick to see how Git, Omni’s dbt integration, and a $20 auto-doc hack to enrich 100+ models helps deliver fast and trusted AI-powered insights.

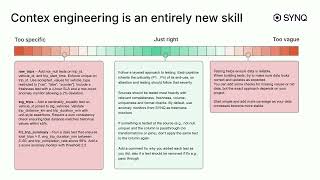

Modern data teams are tasked with bringing AI into the analytics stack in a way that is trustworthy, scalable, and deeply integrated. In this session, we’ll show how the dbt MCP server connects LLMs and agents to structured and governed data to power use cases for development, discovery, and querying.