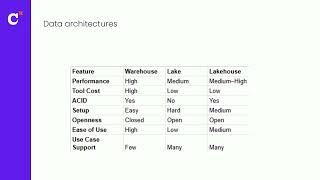

Snowflake is reshaping data management by integrating AI, analytics, and enterprise workloads into a single cloud platform. Snowflake: The Definitive Guide is a comprehensive resource for data architects, engineers, and business professionals looking to harness Snowflake's evolving capabilities, including Cortex AI, Snowpark, and Polaris Catalog for Apache Iceberg. This updated edition provides real-world strategies and hands-on activities for optimizing performance, securing data, and building AI-driven applications. With hands-on SQL examples and best practices, this book helps readers process structured and unstructured data, implement scalable architectures, and integrate Snowflake's AI tools seamlessly. Whether you're setting up accounts, managing access controls, or leveraging generative AI, this guide equips you with the expertise to maximize Snowflake's potential. Implement AI-powered workloads with Snowflake Cortex Explore Snowsight and Streamlit for no-code development Ensure security with access control and data governance Optimize storage, queries, and computing costs Design scalable data architectures for analytics and machine learning