PySpark’s Arrow-based Python UDFs open the door to dramatically faster data processing by avoiding expensive serialization overhead. At the same time, Polars, a high-performance DataFrame library built on Rust, offers zero-copy interoperability with Apache Arrow. This talk shows how combining these two technologies unlocks new performance gains: writing Arrow UDFs with Polars in PySpark can deliver performance speedups compared to Python UDFs. Attendees will learn how Arrow UDFs work in PySpark, how it can be used with other data processing libraries, and how to apply this approach to real-world Spark pipelines for faster, more efficient workloads.

talk-data.com

talk-data.com

Topic

Arrow

Apache Arrow

46

tagged

Activity Trend

Top Events

We were told to scale compute. But what if the real problem was never about big data, but about bad data access? In this talk, we’ll unpack two powerful, often misunderstood techniques—projection pushdown and predicate pushdown—and why they matter more than ever in a world where we want lightweight, fast queries over large datasets. These optimizations aren’t just academic—they’re the difference between querying a terabyte in seconds vs. minutes. We’ll show how systems like Flink and DuckDB leverage these techniques, what limits them (hello, Protobuf), and how smart schema and storage design—especially in formats like Iceberg and Arrow can unlock dramatic speed gains. Along the way, we’ll highlight the importance of landing data in queryable formats, and why indexing and query engines matter just as much as compute. This talk is for anyone who wants to stop fully scanning their data lakes just to read one field.

Sparrow is a lightweight C++20 idiomatic implementation of the Apache Arrow memory specification. Designed for compatibility with the Arrow C data interface, Sparrow enables seamless data exchange with other libraries supporting the Arrow format. It also offers high-level APIs, ensuring interoperability with standard modern C++ algorithms.

Challenges in economics and governance models for open-source scientific projects

In this presentation, the CEOs of two companies at the forefront of open-source scientific software development - Sylvain Corlay of QuantStack and Yann Lechelle of Probabl - examine the intricate challenges of open-source funding and governance and reflect on how these two aspects interconnect.

We start by reflecting on the origins of the open-source movement within the scientific community, and delve into the contemporary challenges of operating businesses and identifying sustainable economic models that both leverage and contribute to open-source software.

In particular, we highlight the unique approaches and experiences of QuantStack and Probabl, which primarily contribute to multi-stakeholder scientific projects such as scikit-learn, Jupyter, Apache Arrow, or conda-forge.

Four years ago, I had no idea what PyArrow was—or how open source development worked. But through mentorship, collaboration, and learning in public, I found not just a place in the community, but a sense of how open source evolves and connects.

In this keynote, I’ll share my experience on how complex projects like Apache Arrow evolve through shared protocols, cross-project conversations, and the people behind them. Along the way, we’ll look at the human side of technical work, the quiet strength of standards, and how imposter syndrome, while uncomfortable, has sharpened my curiosity and helped me find my own way of contributing.

Keynote talk by Alenka Frim, Apache Arrow maintainer, data engineer at United.Cloud.

The practice of data science in genomics and computational biology is fraught with friction. This is largely due to a tight coupling of bioinformatic tools to file input/output. While omic data is specialized and the storage formats for high-throughput sequencing and related data are often standardized, the adoption of emerging open standards not tied to bioinformatics can help better integrate bioinformatic workflows into the wider data science, visualization, and AI/ML ecosystems. Here, we present two bridge libraries as short vignettes for composable bioinformatics. First, we present Anywidget, an architecture and toolkit based on modern web standards for sharing interactive widgets across all Jupyter-compatible runtimes, including JupyterLab, Google Colab, VSCode, and more. Second, we present Oxbow, a Rust and Python-based adapter library that unifies access to common genomic data formats by efficiently transforming queries into Apache Arrow, a standard in-memory columnar representation for tabular data analytics. Together, we demonstrate the composition of these libraries to build a custom connected genomic analysis and visualization environments. We propose that components such as these, which leverage scientific domain-agnostic standards to unbundle specialized file manipulation, analytics, and web interactivity, can serve as reusable building blocks for composing flexible genomic data analysis and machine learning workflows as well as systems for exploratory data analysis and visualization.

We’ll explore how CipherOwl Inc. constructed a near real-time, multi-chain data lakehouse to power anti-money laundering (AML) monitoring at a petabyte scale. We will walk through the end-to-end architecture, which integrates cutting-edge open-source technologies and AI-driven analytics to handle massive on-chain data volumes seamlessly. Off-chain intelligence complements this to meet rigorous AML requirements. At the core of our solution is ChainStorage, an OSS started by Coinbase that provides robust blockchain data ingestion and block-level serving. We enhanced it with Apache Spark™ and Arrow™, coupled for high-throughput processing and efficient data serialization, backed by Delta Lake and Kafka. For the serving layer, we employ StarRocks to deliver lightning-fast SQL analytics over vast datasets. Finally, our system incorporates machine learning and AI agents for continuous data curation and near real-time insights, which are crucial for tackling on-chain AML challenges.

Apache Spark™ has introduced Arrow-optimized APIs such as Pandas UDFs and the Pandas Functions API, providing high performance for Python workloads. Yet, many users continue to rely on regular Python UDFs due to their simple interface, especially when advanced Python expertise is not readily available. This talk introduces a powerful new feature in Apache Spark that brings Arrow optimization to regular Python UDFs. With this enhancement, users can leverage performance gains without modifying their existing UDFs — simply by enabling a configuration setting or toggling a UDF-level parameter. Additionally, we will dive into practical tips and features for using Arrow-optimized Python UDFs effectively, exploring their strengths and limitations. Whether you’re a Spark beginner or an experienced user, this session will allow you to achieve the best of both simplicity and performance in your workflows with regular Python UDFs.

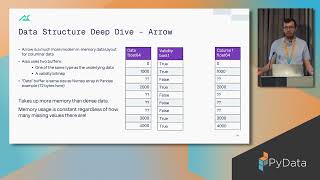

Lots of data in the real world has missing values, but historically prevalent data science tools have had limited support for such data. This talk will compare traditional numerical approaches, the more modern alternative Arrow, as well as ArcticDB, the client-side Dataframe database developed at Man Group.

Wes McKinney and I chat about Positron, Arrow, how he created Pandas and Arrow, and what makes him tick.

Summary The rapid growth of generative AI applications has prompted a surge of investment in vector databases. While there are numerous engines available now, Lance is designed to integrate with data lake and lakehouse architectures. In this episode Weston Pace explains the inner workings of the Lance format for table definitions and file storage, and the optimizations that they have made to allow for fast random access and efficient schema evolution. In addition to integrating well with data lakes, Lance is also a first-class participant in the Arrow ecosystem, making it easy to use with your existing ML and AI toolchains. This is a fascinating conversation about a technology that is focused on expanding the range of options for working with vector data. Announcements Hello and welcome to the Data Engineering Podcast, the show about modern data managementImagine catching data issues before they snowball into bigger problems. That’s what Datafold’s new Monitors do. With automatic monitoring for cross-database data diffs, schema changes, key metrics, and custom data tests, you can catch discrepancies and anomalies in real time, right at the source. Whether it’s maintaining data integrity or preventing costly mistakes, Datafold Monitors give you the visibility and control you need to keep your entire data stack running smoothly. Want to stop issues before they hit production? Learn more at dataengineeringpodcast.com/datafold today!Your host is Tobias Macey and today I'm interviewing Weston Pace about the Lance file and table format for column-oriented vector storageInterview IntroductionHow did you get involved in the area of data management?Can you describe what Lance is and the story behind it?What are the core problems that Lance is designed to solve?What is explicitly out of scope?The README mentions that it is straightforward to convert to Lance from Parquet. What is the motivation for this compatibility/conversion support?What formats does Lance replace or obviate?In terms of data modeling Lance obviously adds a vector type, what are the features and constraints that engineers should be aware of when modeling their embeddings or arbitrary vectors?Are there any practical or hard limitations on vector dimensionality?When generating Lance files/datasets, what are some considerations to be aware of for balancing file/chunk sizes for I/O efficiency and random access in cloud storage?I noticed that the file specification has space for feature flags. How has that aided in enabling experimentation in new capabilities and optimizations?What are some of the engineering and design decisions that were most challenging and/or had the biggest impact on the performance and utility of Lance?The most obvious interface for reading and writing Lance files is through LanceDB. Can you describe the use cases that it focuses on and its notable features?What are the other main integrations for Lance?What are the opportunities or roadblocks in adding support for Lance and vector storage/indexes in e.g. Iceberg or Delta to enable its use in data lake environments?What are the most interesting, innovative, or unexpected ways that you have seen Lance used?What are the most interesting, unexpected, or challenging lessons that you have learned while working on the Lance format?When is Lance the wrong choice?What do you have planned for the future of Lance?Contact Info LinkedInGitHubParting Question From your perspective, what is the biggest gap in the tooling or technology for data management today?Links Lance FormatLanceDBSubstraitPyArrowFAISSPineconePodcast EpisodeParquetIcebergPodcast EpisodeDelta LakePodcast EpisodePyLanceHilbert CurvesSIFT VectorsS3 ExpressWekaDataFusionRay DataTorch Data LoaderHNSW == Hierarchical Navigable Small Worlds vector indexIVFPQ vector indexGeoJSONPolarsThe intro and outro music is from The Hug by The Freak Fandango Orchestra / CC BY-SA

Summary In this episode of the Data Engineering Podcast, Adrian Broderieux and Marcin Rudolph, co-founders of DLT Hub, delve into the principles guiding DLT's development, emphasizing its role as a library rather than a platform, and its integration with lakehouse architectures and AI application frameworks. The episode explores the impact of the Python ecosystem's growth on DLT, highlighting integrations with high-performance libraries and the benefits of Arrow and DuckDB. The episode concludes with a discussion on the future of DLT, including plans for a portable data lake and the importance of interoperability in data management tools. Announcements Hello and welcome to the Data Engineering Podcast, the show about modern data managementImagine catching data issues before they snowball into bigger problems. That’s what Datafold’s new Monitors do. With automatic monitoring for cross-database data diffs, schema changes, key metrics, and custom data tests, you can catch discrepancies and anomalies in real time, right at the source. Whether it’s maintaining data integrity or preventing costly mistakes, Datafold Monitors give you the visibility and control you need to keep your entire data stack running smoothly. Want to stop issues before they hit production? Learn more at dataengineeringpodcast.com/datafold today!Your host is Tobias Macey and today I'm interviewing Adrian Brudaru and Marcin Rudolf, cofounders at dltHub, about the growth of dlt and the numerous ways that you can use it to address the complexities of data integrationInterview IntroductionHow did you get involved in the area of data management?Can you describe what dlt is and how it has evolved since we last spoke (September 2023)?What are the core principles that guide your work on dlt and dlthub?You have taken a very opinionated stance against managed extract/load services. What are the shortcomings of those platforms, and when would you argue in their favor?The landscape of data movement has undergone some interesting changes over the past year. Most notably, the growth of PyAirbyte and the rapid shifts around the needs of generative AI stacks (vector stores, unstructured data processing, etc.). How has that informed your product development and positioning?The Python ecosystem, and in particular data-oriented Python, has also undergone substantial evolution. What are the developments in the libraries and frameworks that you have been able to benefit from?What are some of the notable investments that you have made in the developer experience for building dlt pipelines?How have the interfaces for source/destination development improved?You recently published a post about the idea of a portable data lake. What are the missing pieces that would make that possible, and what are the developments/technologies that put that idea within reach?What is your strategy for building a sustainable product on top of dlt?How does that strategy help to form a "virtuous cycle" of improving the open source foundation?What are the most interesting, innovative, or unexpected ways that you have seen dlt used?What are the most interesting, unexpected, or challenging lessons that you have learned while working on dlt?When is dlt the wrong choice?What do you have planned for the future of dlt/dlthub?Contact Info AdrianLinkedInMarcinLinkedInParting Question From your perspective, what is the biggest gap in the tooling or technology for data management today?Closing Announcements Thank you for listening! Don't forget to check out our other shows. Podcast.init covers the Python language, its community, and the innovative ways it is being used. The AI Engineering Podcast is your guide to the fast-moving world of building AI systems.Visit the site to subscribe to the show, sign up for the mailing list, and read the show notes.If you've learned something or tried out a project from the show then tell us about it! Email [email protected] with your story.Links dltPodcast EpisodePyArrowPolarsIbisDuckDBPodcast Episodedlt Data ContractsRAG == Retrieval Augmented GenerationAI Engineering Podcast EpisodePyAirbyteOpenAI o1 ModelLanceDBQDrant EmbeddedAirflowGitHub ActionsArrow DataFusionApache ArrowPyIcebergDelta-RSSCD2 == Slowly Changing DimensionsSQLAlchemySQLGlotFSSpecPydanticSpacyEntity RecognitionParquet File FormatPython DecoratorREST API ToolkitOpenAPI Connector GeneratorConnectorXPython no-GILDelta LakePodcast EpisodeSQLMeshPodcast EpisodeHamiltonTabularPostHogPodcast.init EpisodeAsyncIOCursor.AIData MeshPodcast EpisodeFastAPILangChainGraphRAGAI Engineering Podcast EpisodeProperty GraphPython uvThe intro and outro music is from The Hug by The Freak Fandango Orchestra / CC BY-SA

Dive into efficient data handling with 'In-Memory Analytics with Apache Arrow.' This book explores Apache Arrow, a powerful open-source project that revolutionizes how tabular and hierarchical data are processed. You'll learn to streamline data pipelines, accelerate analysis, and utilize high-performance tools for data exchange. What this Book will help me do Understand and utilize the Apache Arrow in-memory data format for your data analysis needs. Implement efficient and high-speed data pipelines using Arrow subprojects like Flight SQL and Acero. Enhance integration and performance in analysis workflows by using tools like Parquet and Snowflake with Arrow. Master chaining and reusing computations across languages and environments with Arrow's cross-language support. Apply in real-world scenarios by integrating Apache Arrow with analytics systems like Dremio and DuckDB. Author(s) Matthew Topol, the author of this book, brings 15 years of technical expertise in the realm of data processing and analysis. Having worked across various environments and languages, Matthew offers insights into optimizing workflows using Apache Arrow. His approachable writing style ensures that complex topics are comprehensible. Who is it for? This book is tailored for developers, data engineers, and data scientists eager to enhance their analytic toolset. Whether you're a beginner or have experience in data analysis, you'll find the concepts actionable and transformative. If you are curious about improving the performance and capabilities of your analytic pipelines or tools, this book is for you.

Almost all modern CPU have a vector processing unit, making it possible to write faster code for a large category of problems, at the cost of portability - there a re many different instruction sets in the wild! The xsimd library makes it possible to write portable C++ code that targets different architectures and sub-architectures. The specialization choice can be made at compile-time or at runtime, using a provided dispatching mechanism. Intel, ARM, RiscV and Webassembly are supported, and the library has already been adopted by Xtensor, Pythran, Apache Arrow and Firefox.

Apache Arrow has become a de-facto standard for efficient in-memory columnar data representation. Beyond the standardized and language-independent columnar memory format for tabular data, the Apache Arrow project also has a growing set of supplementary specifications and language implementations. This talk will give an overview of the recent developments in the Apache Arrow ecosystem, including ADBC, nanoarrow, new data types, and the Arrow PyCapsule protocol.

Over the last decade, QuestDB has been at the forefront of handling time series data with a focus on speed and efficiency. In this talk, I’ll share practical insights from our experience serving thousands of users, highlighting what we’ve learned about building and maintaining a fast database that can ingest millions of events per second.

QuestDB, an open-source time series database, has traditionally relied on a custom-built, non-standard data storage format designed for performance. As we move forward, we’re actively developing its architecture to support open formats like Apache Parquet and Arrow, reflecting a broader industry shift. I’ll discuss the engineering challenges we’ve faced during this transition, the new possibilities it creates, and why these changes are crucial for the evolving database landscape.

Through live demos, I’ll showcase QuestDB’s performance in real-time data ingestion and queries, and demonstrate some of the features enabled by these new formats.

Jami McGraw, Director of Technology at Arrow electronics joins us today on the podcast to talk about where we are in the evolution of data hardware. We also discuss how AI is bringing leaps and bounds in technology, deciding the right compute for your workflow, and tools you can use to feel more confident about your technology decisions. @ArrowFiveYearsOut #data #ai #artificialintelligence #datastorage #edgetocloud #datascience #technology Cyberpunk by jiglr | https://soundcloud.com/jiglrmusic Music promoted by https://www.free-stock-music.com Creative Commons Attribution 3.0 Unported License https://creativecommons.org/licenses/by/3.0/deed.en_US Hosted on Acast. See acast.com/privacy for more information.