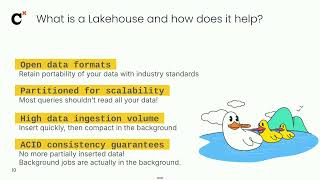

Engineering Lakehouses with Open Table Formats introduces the architecture and capabilities of open table formats like Apache Iceberg, Apache Hudi, and Delta Lake. The book guides you through the design, implementation, and optimization of lakehouses that can handle modern data processing requirements effectively with real-world practical insights. What this Book will help me do Understand the fundamentals of open table formats and their benefits in lakehouse architecture. Learn how to implement performant data processing using tools like Apache Spark and Flink. Master advanced topics like indexing, partitioning, and interoperability between data formats. Explore data lifecycle management and integration with frameworks like Apache Airflow and dbt. Build secure lakehouses with regulatory compliance using best practices detailed in the book. Author(s) Dipankar Mazumdar and Vinoth Govindarajan are seasoned professionals with extensive experience in big data processing and software architecture. They bring their expertise from working with data lakehouses and are known for their ability to explain complex technical concepts clearly. Their collaborative approach brings valuable insights into the latest trends in data management. Who is it for? This book is ideal for data engineers, architects, and software professionals aiming to master modern lakehouse architectures. If you are familiar with data lakes or warehouses and wish to transition to an open data architectural design, this book is suited for you. Readers should have basic knowledge of databases, Python, and Apache Spark for the best experience.