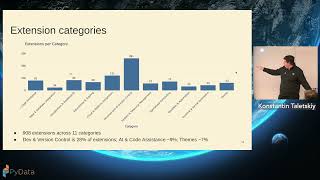

What does the JupyterLab extension ecosystem actually look like in 2025? While extensions drive much of JupyterLab's practical value, their overall landscape remains largely unexplored. This talk analyzes public PyPI (via BigQuery) and GitHub data to quantify growth, momentum, and health: monthly downloads by category, release recency, star-download relationships, and the rise of AI-focused extensions. I will present my approach for building this analysis pipeline and offer lessons learned. Finally, I will demonstrate of an open, read-only web catalog built on this data set.