Date: 2024-10-22; Time: 10:00-12:00 EST. What You Will Learn: Introduction to AWS cloud security; Key AWS security services and best practices; Strategies for improving your AWS security posture; Overview of AWS certification paths and preparation tips.

talk-data.com

talk-data.com

Topic

AWS

Amazon Web Services (AWS)

837

tagged

Activity Trend

Top Events

The "LLM Engineer's Handbook" is your comprehensive guide to mastering Large Language Models from concept to deployment. Written by leading experts, it combines theoretical foundations with practical examples to help you build, refine, and deploy LLM-powered solutions that solve real-world problems effectively and efficiently. What this Book will help me do Understand the principles and approaches for training and fine-tuning Large Language Models (LLMs). Apply MLOps practices to design, deploy, and monitor your LLM applications effectively. Implement advanced techniques such as retrieval-augmented generation (RAG) and preference alignment. Optimize inference for high performance, addressing low-latency and high availability for production systems. Develop robust data pipelines and scalable architectures for building modular LLM systems. Author(s) Paul Iusztin and Maxime Labonne are experienced AI professionals specializing in natural language processing and machine learning. With years of industry and academic experience, they are dedicated to making complex AI concepts accessible and actionable. Their collaborative authorship ensures a blend of theoretical rigor and practical insights tailored for modern AI practitioners. Who is it for? This book is tailored for AI engineers, NLP professionals, and LLM practitioners who wish to deepen their understanding of Large Language Models. Ideal readers possess some familiarity with Python, AWS, and general AI concepts. If you aim to apply LLMs to real-world scenarios or enhance your expertise in AI-driven systems, this handbook is designed for you.

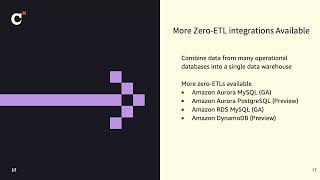

AWS offers the most scalable, highest performing data services to keep up with the growing volume and velocity of data to help organizations to be data-driven in real-time. AWS helps customers unify diverse data sources by investing in a zero ETL future and enable end-to-end data governance so your teams are free to move faster with data. Data teams running dbt Cloud are able to deploy analytics code, following software engineering best practices such as modularity, continuous integration and continuous deployment (CI/CD), and embedded documentation. In this session, we will dive deeper into how to get near real-time insight on petabytes of transaction data using Amazon Aurora zero-ETL integration with Amazon Redshift and dbt Cloud for your Generative AI workloads.

Speakers: Neela Kulkarni Solutions Architect AWS

Neeraja Rentachintala Director, Product Management Amazon

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements

Unlock the potential of serverless data transformations by integrating Amazon Athena with dbt (Data Build Tool). In this presentation, we'll explore how combining Athena's scalable, serverless query service with dbt's powerful SQL-based transformation capabilities simplifies data workflows and eliminates the need for infrastructure management. Discover how this integration addresses common challenges like managing large-scale data transformations and needing agile analytics, enabling your organization to accelerate insights, reduce costs, and enhance decision-making.

Speakers: BP Yau Partner Solutions Architect AWS

Darshit Thakkar Technical Product Manager AWS

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements

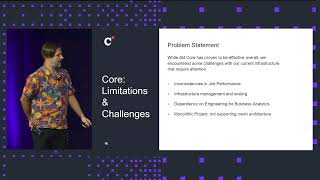

Join us as we share our journey of migrating from dbt Core to dbt Cloud. We'll discuss why we made this shift – focusing on security, ownership, and standardization. Starting with separate team-based projects on dbt Core, we moved towards a unified structure, and eventually embraced dbt Cloud. Now, all teams follow a common structure and standardized requirements, ensuring better security and collaboration.

In our session, we'll explore how we improved our data analytics processes by migrating from dbt Core to dbt Cloud. Initially, each team had its way of working on dbt Core, leading to security risks and inconsistent practices. To address this, we transitioned to a more unified approach on dbt Core. This year we migrated dbt Cloud, which allowed us to centralize our data analytics workflows, enhancing security and promoting collaboration.

For scheduling we manage our own Airflow instance using AWS EKS. We use Datahub as data catalog.

Key points: Enhanced Security: dbt Cloud provided robust security features, helping us safeguard our data pipelines. Ownership and Collaboration: With dbt Cloud, teams took ownership of their projects while collaborating more effectively. Standardization: We enforced standardized requirements across all projects, ensuring consistency and efficiency, using dbt-project-evaluator.

Speakers: Alejandro Ivanez Platform Engineer DPG Media

Mathias Lavaert Principal Platform Engineer DPG Media

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements

Since the beginning of 2024, the Warner Brothers Discovery team supporting the CNN data platform has been undergoing an extensive migration project from dbt Core to dbt Cloud. Concurrently, the team is also segmenting their project into multi-project frameworks utilizing dbt Mesh. In this talk, Zachary will review how this transition has simplified data pipelines, improved pipeline performance and data quality, and made data collaboration at scale more seamless.

He'll discuss how dbt Cloud features like the Cloud IDE, automated testing, documentation, and code deployment have enabled the team to standardize on a single developer platform while also managing dependencies effectively. He'll share details on how the automation framework they built using Terraform streamlines dbt project deployments with dbt Cloud to a ""push-button"" process. By leveraging an infrastructure as code experience, they can orchestrate the creation of environment variables, dbt Cloud jobs, Airflow connections, and AWS secrets with a unified approach that ensures consistency and reliability across projects.

Speakers: Mamta Gupta Staff Analytics Engineer Warner Brothers Discovery

Zachary Lancaster Manager, Data Engineering Warner Brothers Discovery

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements

Navnit Shukla is a solutions architect with AWS. He joins me to chat about data wrangling and architecting solutions on AWS, writing books, and much more.

Navnit is also in the Coursera Data Engineering Specialization, dropping knowledge on data engineering on AWS. Check it out!

Data Wrangling on AWS: https://www.amazon.com/Data-Wrangling-AWS-organize-analysis/dp/1801810907

LinkedIn: https://www.linkedin.com/in/navnitshukla/

This book covers modern data engineering functions and important Python libraries, to help you develop state-of-the-art ML pipelines and integration code. The book begins by explaining data analytics and transformation, delving into the Pandas library, its capabilities, and nuances. It then explores emerging libraries such as Polars and CuDF, providing insights into GPU-based computing and cutting-edge data manipulation techniques. The text discusses the importance of data validation in engineering processes, introducing tools such as Great Expectations and Pandera to ensure data quality and reliability. The book delves into API design and development, with a specific focus on leveraging the power of FastAPI. It covers authentication, authorization, and real-world applications, enabling you to construct efficient and secure APIs using FastAPI. Also explored is concurrency in data engineering, examining Dask's capabilities from basic setup to crafting advanced machine learning pipelines. The book includes development and delivery of data engineering pipelines using leading cloud platforms such as AWS, Google Cloud, and Microsoft Azure. The concluding chapters concentrate on real-time and streaming data engineering pipelines, emphasizing Apache Kafka and workflow orchestration in data engineering. Workflow tools such as Airflow and Prefect are introduced to seamlessly manage and automate complex data workflows. What sets this book apart is its blend of theoretical knowledge and practical application, a structured path from basic to advanced concepts, and insights into using state-of-the-art tools. With this book, you gain access to cutting-edge techniques and insights that are reshaping the industry. This book is not just an educational tool. It is a career catalyst, and an investment in your future as a data engineering expert, poised to meet the challenges of today's data-driven world. What You Will Learn Elevate your data wrangling jobs by utilizing the power of both CPU and GPU computing, and learn to process data using Pandas 2.0, Polars, and CuDF at unprecedented speeds Design data validation pipelines, construct efficient data service APIs, develop real-time streaming pipelines and master the art of workflow orchestration to streamline your engineering projects Leverage concurrent programming to develop machine learning pipelines and get hands-on experience in development and deployment of machine learning pipelines across AWS, GCP, and Azure Who This Book Is For Data analysts, data engineers, data scientists, machine learning engineers, and MLOps specialists

At TIER Mobility, we successfully reduced our cloud expenses by over 60% in less than two years. While this was a significant achievement, the journey wasn’t without its challenges. In this presentation, I’ll share insights into the potential pitfalls of cost reduction strategies that might end up being more expensive in the long run.

Presenter: Sana Shah. Part of the AWS Women’s User Group Berlin event.

Fundamentals of Generative AI and LLMs; best practices for building AI solutions on AWS; overview of AWS services like SageMaker; real-world applications and case studies.

Telenet, an affiliate of Liberty Global, is a market leading telecom known for its continuous customer-centric innovation using AI and data analytics. As an early adopter of Snowflake, they use data to drive cutting edge innovation such as hyper personalized customer services and privacy compliant data sharing with networking and broadcast partners. To further spur innovation, Telenet wants to make it easier for analysts and AI engineers to find and access data. In this session you will learn how Telenet is using Snowflake, AWS and Raito to give data analysts and AI engineers access to data in a fast and secure way.

How about a workplace where generative AI accelerates every data management task, transforming routine into innovative experiences? A vision which can be in production for the AWS customers in just 60 days through a combination of Amazon Bedrock, which enables rapid development and deployment of AI applications, and Stratio Generative AI Data Fabric, which provides accurate output based on quality data with business meaning. Join us to learn how a combination of these products is empowering data managers and chief data officers to drive innovation and efficiency across their organizations.

Está no ar, o Data Hackers News !! Os assuntos mais quentes da semana, com as principais notícias da área de Dados, IA e Tecnologia, que você também encontra na nossa Newsletter semanal, agora no Podcast do Data Hackers !!

Aperte o play e ouça agora, o Data Hackers News dessa semana !

Para saber tudo sobre o que está acontecendo na área de dados, se inscreva na Newsletter semanal:

https://www.datahackers.news/

Conheça nossos comentaristas do Data Hackers News:

Monique Femme

Matérias/assuntos comentados:

CEO da AWS diz que desenvolvedores não codificarão mais em 2 anos;

Apple lança iPhone 16 com AI Generativa;

Canva diz que seus recursos de IA valem o aumento de preço de 300 por cento.

Baixe o relatório completo do State of Data Brazil e os highlights da pesquisa :

Demais canais do Data Hackers:

Site

Linkedin

Instagram

Tik Tok

You Tube

Master Amazon DynamoDB, the serverless NoSQL database designed for lightning-fast performance and scalability, with this definitive guide. You'll delve into its features, learn advanced concepts, and acquire practical skills to harness DynamoDB for modern application development. What this Book will help me do Understand AWS DynamoDB fundamentals for real-world applications. Model and optimize NoSQL databases with advanced techniques. Integrate DynamoDB into scalable, high-performance architectures. Utilize DynamoDB indexing, caching, and analytical features effectively. Plan and execute RDBMS to NoSQL data migrations successfully. Author(s) None Dhingra, an AWS DynamoDB solutions expert, and None Mackay, a seasoned NoSQL architect, bring their combined expertise straight from Amazon Web Services to guide you step-by-step in mastering DynamoDB. Combining comprehensive technical knowledge with approachable explanations, they empower readers to implement practical and efficient data strategies. Who is it for? This book is ideal for software developers and architects seeking to deepen their knowledge about AWS solutions like DynamoDB, engineering managers aiming to incorporate scalable NoSQL solutions into their projects, and data professionals transitioning from RDBMS towards a serverless data approach. Individuals with basic knowledge in cloud computing or database systems and those ready to advance in DynamoDB will find this book particularly beneficial.

When writing a fullstack application a common problem facing developers today is keeping data consistency between the frontend and backend. What the backend expects and what the frontend sends is not always the same. In this talk we’ll look at this problem and different approaches to solve it. We’ll take a look at how AWS Amplify approaches this problem and show a demo. We’ll also compare and contrast other TypeScript approaches with React and Next.

Date: 2024-08-22; Time: 10:00-12:00 EST. AWS Discovery Day - Introduction to Prompt Engineering.

Date: 2024-08-22; Time: 10:00-12:00 EST. AWS Discovery Day - Introduction to Prompt Engineering.

Date: 2024-08-22; Time: 10:00-12:00 EST. AWS Discovery Day - Introduction to Prompt Engineering.