Active metadata is not a type of metadata, it’s a way of using metadata to power systems. Active metadata is a critical feature of modern data architectures such as data fabric and data mesh. It makes things work such as data access management, data classification, and data quality management. Published at: https://www.eckerson.com/articles/active-metadata-the-critical-factor-for-mastering-modern-data-management

talk-data.com

talk-data.com

Topic

Fabric

Microsoft Fabric

323

tagged

Activity Trend

Top Events

The need for adaptable data management architecture has never been more pressing. Yet getting there seems to be more confusing than ever. The field is rampant with buzzwords: data lake, data lakehouse, data fabric, data mesh, data hub, data as a network. Making sense of the confusion begins with sorting out the buzzwords. Published at: https://www.eckerson.com/articles/data-architecture-complex-vs-complicated

- There are unique challenges associated with working with big data for finance (volume of data, disparate storage, variable sharing protocols etc...)

- Leveraging open source technologies, like Databricks' Delta Sharing, in combination with a flexible data management stack, can allow organizations to be more nimble in testing and deploying more strategies

- Live demonstration of Delta Sharing in combination with Nasdaq Data Fabric

Connect with us: Website: https://databricks.com Facebook: https://www.facebook.com/databricksinc Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/data... Instagram: https://www.instagram.com/databricksinc/

Amgen has developed a suite of enterprise data & analytics platforms powered by modern, cloud native and open source technologies, that have played a vital role in building game changing analytics capabilities within the organization. Our platforms include a mature Data Lake with extensive self service capabilities, a Data Fabric with semantically connected data, a Data Marketplace for advanced cataloging, an intelligent Enterprise search among others to solve for a range of high value business problems. In this talk, we - Amgen and our partner ZS Associates - will share learning from our journey so far, best practices for building enterprise scale data & analytics platforms, and describe several business use cases and how we leverage modern technologies such as Databricks to enable our business teams. We will cover use cases related to Delta Lake, microservices, platform monitoring, fine grained security, and more.

Connect with us: Website: https://databricks.com Facebook: https://www.facebook.com/databricksinc Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/data... Instagram: https://www.instagram.com/databricksinc/

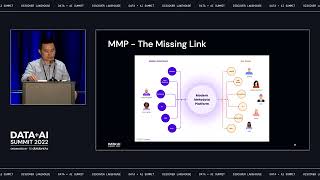

Recently there has been a lot of buzz in the data community on the topic of metadata management. It’s often discussed in the context of data discovery, data provenance, data governance, and data privacy. Even Gartner and Forrester have created the new Active Metadata Management and Enterprise Data Fabric categories to highlight the development in this area.

However, metadata management isn’t actually a new problem. It has just taken on a whole new dimension with the widespread adoption of the Modern Data Stack. What used to be a small, esoteric issue that only concerned the core data team has exploded into complex, organizational challenges that plagued companies large and small.

In this talk, we’ll explain how a Modern Metadata Platform (MMP) can help solve these new challenges and the key ingredients to building a scalable and extensible MMP.

Connect with us: Website: https://databricks.com Facebook: https://www.facebook.com/databricksinc Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/data... Instagram: https://www.instagram.com/databricksinc/

Companies are investing in new solutions—such as data fabric, data access governance, and data observability—to keep pace with expanding business appetite for data. Pervasive use of metadata to solve data management problems means that metadata is itself a valuable data asset that we must proactively manage. Published at: https://www.eckerson.com/articles/metadata-is-data-so-manage-it-like-data

Nothing has galvanized the data community more in recent months than two new architectural paradigms for managing enterprise data. On one side there is the data fabric: a centralized architecture that runs a variety of analytic services and applications on top of a layer of universal connectivity. On the other side, is a data mesh: a decentralized architecture that empowers domain owners to manage their own data according to enterprise standards and make it available to peers as they desire.

Most data leaders are still trying to ferret out the implications of both approaches for their own data environments. One of those is Srinivasan Sankar, the enterprise data & analytics leader at Hanover Insurance Group. In this wide-ranging, back-and-forth discussion, Sankar and Eckerson explore the suitability of the data mesh for Hanover, how the Data Fabric might support a Data Mesh, whether a Data Mesh obviates the need for a data warehouse, and practical steps Hanover might to take implement a Data Mesh built on top of a Data Fabric.

Key Takeaways:

- What is the essence of a data mesh?

- How does it relate to the data fabric?

- Does the data mesh require a cultural transformation?

- Does the data mesh obviate the need for a data warehouse?

- How does data architecture as a service fit with the data mesh?

- What is the best way to roll out a data mesh?

- What's the role of a data catalog?

- What is a suitable roadmap for full implementation?

Nothing has galvanized the data community more in recent months than two new architectural paradigms for managing enterprise data. On one side there is the data fabric: a centralized architecture that runs a variety of analytic services and applications on top of a layer of universal connectivity. On the other side, is a data mesh: a decentralized architecture that empowers domain owners to manage their own data according to enterprise standards and make it available to peers as they desire.

Most data leaders are still trying to ferret out the implications of both approaches for their own data environments. One of those is Srinivasan Sankar, the enterprise data & analytics leader at Hanover Insurance Group. In this wide-ranging, back-and-forth discussion, Sankar and Eckerson explore the suitability of the data mesh for Hanover, how the Data Fabric might support a Data Mesh, whether a Data Mesh obviates the need for a data warehouse, and practical steps Hanover might to take implement a Data Mesh built on top of a Data Fabric.

Enterprise networks are large and rely on numerous connected endpoints to ensure smooth operational efficiency. However, they also present a challenge from a security perspective. The focus of this Blueprint is to demonstrate an early threat detection against the network fabric that is powered by Brocade that uses IBM® QRadar®. It also protects the same if a cyberattack or an internal threat by rouge user within the organization occurs. The publication also describes how to configure the syslog that is forwarding on Brocade SAN FOS. Finally, it explains how the forwarded audit events are used for detecting the threat and runs the custom action to mitigate the threat. The focus of this publication is to proactively start a cyber resilience workflow from IBM QRadar to block an IP address when multiple failed logins on Brocade switch are detected. As part of early threat detection, a sample rule that us used by IBM QRadar is shown. A Python script that also is used as a response to block the user's IP address in the switch is provided. Customers are encouraged to create control path or data path use cases, customized IBM QRadar rules, and custom response scripts that are best-suited to their environment. The use cases, QRadar rules, and Python script that are presented here are templates only and cannot be used as-is in an environment.

Engineer privacy into your systems with these hands-on techniques for data governance, legal compliance, and surviving security audits. In Data Privacy you will learn how to: Classify data based on privacy risk Build technical tools to catalog and discover data in your systems Share data with technical privacy controls to measure reidentification risk Implement technical privacy architectures to delete data Set up technical capabilities for data export to meet legal requirements like Data Subject Asset Requests (DSAR) Establish a technical privacy review process to help accelerate the legal Privacy Impact Assessment (PIA) Design a Consent Management Platform (CMP) to capture user consent Implement security tooling to help optimize privacy Build a holistic program that will get support and funding from the C-Level and board Data Privacy teaches you to design, develop, and measure the effectiveness of privacy programs. You’ll learn from author Nishant Bhajaria, an industry-renowned expert who has overseen privacy at Google, Netflix, and Uber. The terminology and legal requirements of privacy are all explained in clear, jargon-free language. The book’s constant awareness of business requirements will help you balance trade-offs, and ensure your user’s privacy can be improved without spiraling time and resource costs. About the Technology Data privacy is essential for any business. Data breaches, vague policies, and poor communication all erode a user’s trust in your applications. You may also face substantial legal consequences for failing to protect user data. Fortunately, there are clear practices and guidelines to keep your data secure and your users happy. About the Book Data Privacy: A runbook for engineers teaches you how to navigate the trade-offs between strict data security and real world business needs. In this practical book, you’ll learn how to design and implement privacy programs that are easy to scale and automate. There’s no bureaucratic process—just workable solutions and smart repurposing of existing security tools to help set and achieve your privacy goals. What's Inside Classify data based on privacy risk Set up capabilities for data export that meet legal requirements Establish a review process to accelerate privacy impact assessment Design a consent management platform to capture user consent About the Reader For engineers and business leaders looking to deliver better privacy. About the Author Nishant Bhajaria leads the Technical Privacy and Strategy teams for Uber. His previous roles include head of privacy engineering at Netflix, and data security and privacy at Google. Quotes I wish I had had this text in 2015 or 2016 at Netflix, and it would have been very helpful in 2008–2012 in a time of significant architectural evolution of our technology. - From the Foreword by Neil Hunt, Former CPO, Netflix Your guide to building privacy into the fabric of your organization. - John Tyler, JPMorgan Chase The most comprehensive resource you can find about privacy. - Diego Casella, InvestSuite Offers some valuable insights and direction for enterprises looking to improve the privacy of their data. - Peter White, Charles Sturt University

This IBM® Redpaper® publication describes best practices for deploying and using advanced Broadcom Fabric Operating System (FOS) features to identify, monitor, and protect Fibre Channel (FC) SANs from problematic devices and media behavior. Note that this paper primarily focuses on the FOS command options and features that are available since version 8.2 with some coverage of new features that were introduced in 9.0. This paper covers the following recent changes: SANnav Fabric Performance Impact Notification

Send us a text Want to be featured as a guest on Making Data Simple? Reach out to us at [[email protected]] and tell us why you should be next.

Abstract Hosted by Al Martin, VP, IBM Expert Services Delivery, Making Data Simple provides the latest thinking on big data, A.I., and the implications for the enterprise from a range of experts.

This week on Making Data Simple, we have Paul Zikopoulos. Paul is the VP of IBM Technology Sales – Skills Vitality & Enablement Global Markets. Paul is an award winning speaker and author and has been at IBM for 28 years.

Show Notes 3:40 – Is Skills Vitality not the prefect job? 5:08 – What’s been your journey at IBM? 8:29 – Hybrid Cloud Operation and Artificial Intelligence is this the right strategy? 21:13 – What is the new maturity curve? 23:53 – Define Data Fabric 26:53 – Why is the Challenger Seller book so important? 29:14 – What makes a great leader? Books Energy Bus Grit Challenger Sale Challenger Customer Effortless Experience Connect with the Team Producer Kate Brown - LinkedIn. Producer Steve Templeton - LinkedIn. Host Al Martin - LinkedIn and Twitter. Want to be featured as a guest on Making Data Simple? Reach out to us at [email protected] and tell us why you should be next. The Making Data Simple Podcast is hosted by Al Martin, WW VP Technical Sales, IBM, where we explore trending technologies, business innovation, and leadership ... while keeping it simple & fun.

This IBM Redpaper publication describes best practices for deploying and using advanced Cisco NX-OS features to identify, monitor, and protect Fibre Channel (FC) Storage Area Networks (SANs) from problematic devices and media behavior. The paper focuses on the IBM c-type SAN switches with firmware Cisco MDS NX-OS Release 8.4(2a).

Summary Gartner analysts are tasked with identifying promising companies each year that are making an impact in their respective categories. For businesses that are working in the data management and analytics space they recognized the efforts of Timbr.ai, Soda Data, Nexla, and Tada. In this episode the founders and leaders of each of these organizations share their perspective on the current state of the market, and the challenges facing businesses and data professionals today.

Announcements

Hello and welcome to the Data Engineering Podcast, the show about modern data management When you’re ready to build your next pipeline, or want to test out the projects you hear about on the show, you’ll need somewhere to deploy it, so check out our friends at Linode. With their managed Kubernetes platform it’s now even easier to deploy and scale your workflows, or try out the latest Helm charts from tools like Pulsar and Pachyderm. With simple pricing, fast networking, object storage, and worldwide data centers, you’ve got everything you need to run a bulletproof data platform. Go to dataengineeringpodcast.com/linode today and get a $100 credit to try out a Kubernetes cluster of your own. And don’t forget to thank them for their continued support of this show! Atlan is a collaborative workspace for data-driven teams, like Github for engineering or Figma for design teams. By acting as a virtual hub for data assets ranging from tables and dashboards to SQL snippets & code, Atlan enables teams to create a single source of truth for all their data assets, and collaborate across the modern data stack through deep integrations with tools like Snowflake, Slack, Looker and more. Go to dataengineeringpodcast.com/atlan today and sign up for a free trial. If you’re a data engineering podcast listener, you get credits worth $3000 on an annual subscription Have you ever had to develop ad-hoc solutions for security, privacy, and compliance requirements? Are you spending too much of your engineering resources on creating database views, configuring database permissions, and manually granting and revoking access to sensitive data? Satori has built the first DataSecOps Platform that streamlines data access and security. Satori’s DataSecOps automates data access controls, permissions, and masking for all major data platforms such as Snowflake, Redshift and SQL Server and even delegates data access management to business users, helping you move your organization from default data access to need-to-know access. Go to dataengineeringpodcast.com/satori today and get a $5K credit for your next Satori subscription. Your host is Tobias Macey and today I’m interviewing Saket Saurabh, Maarten Masschelein, Akshay Deshpande, and Dan Weitzner about the challenges facing data practitioners today and the solutions that are being brought to market for addressing them, as well as the work they are doing that got them recognized as "cool vendors" by Gartner.

Interview

Introduction How did you get involved in the area of data management? Can you each describe what you view as the biggest challenge facing data professionals? Who are you building your solutions for and what are the most common data management problems are you all solving? What are different components of Data Management and why is it so complex? What will simplify this process, if any? The report covers a lot of new data management terminology – data governance, data observability, data fabric, data mesh, DataOps, MLOps, AIOps – what does this all mean and why is it important for data engineers? How has the data management space changed in recent times? Describe the current data management landscape and any key developments. From your perspective, what are the biggest challenges in the data management space today? What modern data management features are lacking in existing databases? Gartner imagines a future where data and analytics leaders need to be prepared to rely on data manage

Send us a text Want to be featured as a guest on Making Data Simple? Reach out to us at [[email protected]] and tell us why you should be next.

Abstract Hosted by Al Martin, VP, IBM Expert Services Delivery, Making Data Simple provides the latest thinking on big data, A.I., and the implications for the enterprise from a range of experts.

This week on Making Data Simple, we have Trent Gray-Donald Distinguished Engineer, IBM Data and AI, Dakshi Agrawal IBM Fellow and CTO, IBM AI. Trent Gray-Donald spend his first 16 years on manage language runtime, then moved over to Data and AI, and then Cloud Pak for Data. Dakshi Agrawal joined IBM right after his Phd in IBM Research, then Dakshi moved into software development, and in the 6 years in AI. Show Notes .15 - 5:23 - Repeat of introductions from Part 1 5:50 – What is AI Anywhere? 9:09 – Does it make our development more difficult? 11:22 – Does data virtualization work? 15:31 - How do we get started with AI? 17:41 – Customer success stories Connect with the Team Producer Kate Brown - LinkedIn. Producer Steve Templeton - LinkedIn. Host Al Martin - LinkedIn and Twitter. Want to be featured as a guest on Making Data Simple? Reach out to us at [email protected] and tell us why you should be next. The Making Data Simple Podcast is hosted by Al Martin, WW VP Technical Sales, IBM, where we explore trending technologies, business innovation, and leadership ... while keeping it simple & fun.

Send us a text Want to be featured as a guest on Making Data Simple? Reach out to us at [[email protected]] and tell us why you should be next.

Abstract Hosted by Al Martin, VP, IBM Expert Services Delivery, Making Data Simple provides the latest thinking on big data, A.I., and the implications for the enterprise from a range of experts.

This week on Making Data Simple, we have Trent Gray-Donald Distinguished Engineer, IBM Data and AI, Dakshi Agrawal IBM Fellow and CTO, IBM AI. Trent Gray-Donald spend his first 16 years on manage language runtime, then moved over to Data and AI, and then Cloud Pak for Data. Dakshi Agrawal joined IBM right after his Phd in IBM Research, then Dakshi moved into software development, and in the 6 years in AI. Show Notes 5:24 – Why is IBM Watson important? 10:28 – How does data fabric fit in? 15:25 – How would you describe the customer journey around data fabric? 17:10 – Is the ultimate destination AI? Connect with the Team Producer Kate Brown - LinkedIn. Producer Steve Templeton - LinkedIn. Host Al Martin - LinkedIn and Twitter. Want to be featured as a guest on Making Data Simple? Reach out to us at [email protected] and tell us why you should be next. The Making Data Simple Podcast is hosted by Al Martin, WW VP Technical Sales, IBM, where we explore trending technologies, business innovation, and leadership ... while keeping it simple & fun.

Data fabric is a hot concept in data management today. By encompassing the data ecosystem your company already has in place, this architectural design pattern provides your staff with one reliable place to go for data. In this report, author Alice LaPlante shows CIOs, CDOs, and CAOs how data fabric enables their users to spend more time analyzing than wrangling data. The best way to thrive during this intense period of digital transformation is through data. But after roaring through 2019, progress on getting the most out of data investments has lost steam. Only 38% of companies now say they've created a data-driven organization. This report describes how a data fabric can help you reach the all-important goal of data democratization. Learn how data fabric handles data prep, data delivery, and serves as a data catalog Use data fabric to handle data variety, a top challenge for many organizations Learn how data fabric spans any environment to support data for users and use cases from any source Examine data fabric's capabilities including data and metadata management, data quality, integration, analytics, visualization, and governance Get five pieces of advice for getting started with data fabric

Businesses manage data to understand the connections between their customers, products or services, features, markets, and anything else that affects the business. With a knowledge graph, you can represent these connections directly to analyze and understand the compound relationships that drive business innovation. This report introduces knowledge graphs and examines their ability to weave business data and business knowledge into an architecture known as a data fabric . Authors Sean Martin, Ben Szekely, and Dean Allemang explain graph data and knowledge representation and demonstrate the value of combining these two things in a knowledge graph. You'll learn how knowledge graphs enable an enterprise-scale data fabric and discover what to expect in the near future as this technology evolves. This report also examines the evolution of databases, data integration, and data analysis to help you understand how the industry reached this point. Learn how graph technology enables you to represent knowledge and link it to data Understand how graph technology emphasizes the connected nature of data Use a data fabric to support other data-intensive tasks, including machine learning and data analysis Examine how a data fabric supports intense data-driven business initiatives more robustly than a simple database or data architecture

In this paper, we outline some IBM® Spectrum Virtualize HyperSwap® SAN implementation and design best practices for optimum resiliency of the SAN Volume Controller cluster. It provides IBM Spectrum® Virtualize HyperSwap and Stretched Cluster configuration details. Note: In this book, for brevity, we use HyperSwap to refer to both HyperSwap and Stretched Cluster. The documentation there details the minimum requirements. However, it does not describe the design of the storage area network (SAN) in detail, nor does it describe the recommended way to implement those requirements on a SAN. In this IBM Redpaper publication, we outline some of the best practices for SAN design and implementation that leads to optimum resiliency of the SAN Volume Controller (SVC) cluster, and we explain why each recommendation is made. This paper is SAN vendor-neutral wherever possible. Any mention of a specific SAN switch vendor, or terms used by a specific switch vendor, is made only where relevant to a specific context, and does not imply an endorsement of a specific switch vendor. Note: Some of the figures in this document might not depict redundant fabrics or storage configurations. This was done for simplicity, and it should be assumed that any recommendations made for fabric design assume that there are two redundant fabrics.

The IBM HyperSwap® high availability (HA) function allows business continuity in a hardware failure, power failure, connectivity failure, or disasters, such as fire or flooding. It is available on the IBM SAN Volume Controller and IBM FlashSystem products. This IBM Redbooks publication covers the preferred practices for implementing Cisco VersaStack with IBM HyperSwap. The following are some of the topics covered in this book: Cisco Application Centric Infrastructure to showcase Cisco's ACI with Nexus 9Ks Cisco Fabric Interconnects and Unified Computing System (UCS) management capabilities Cisco Multilayer Director Switch (MDS) to showcase fabric channel connectivity Overall IBM HyperSwap solution architecture Differences between HyperSwap and Metro Mirroring, Volume Mirroring, and Stretch Cluster Multisite IBM SAN Volume Controller (SVC) deployment to showcase HyperSwap configuration and capabilities This book is intended for pre-sales and post-sales technical support professionals and storage administrators who are tasked with deploying a VersaStack solution with IBM HyperSwap.