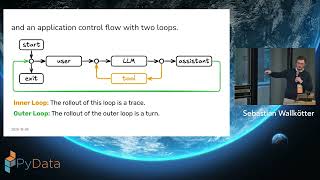

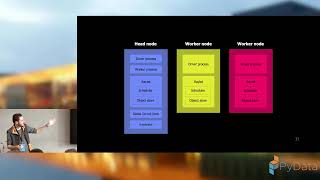

AI agents need the right context at the right time to do a good job. Too much input increases cost and harms accuracy, while too little causes instability and hallucinations. Context Engineering with DSPy introduces a practical, evaluation-driven way to design AI systems that remain reliable, predictable, and easy to maintain as they grow. AI engineer and educator Mike Taylor explains DSPy in a clear, approachable style, showing how its modular structure, portable programs, and built-in optimizers help teams move beyond guesswork. Through real examples and step-by-step guidance, you'll learn how DSPy's signatures, modules, datasets, and metrics work together to solve context engineering problems that evolve as models change and workloads scale. This book supports AI engineers, data scientists, machine learning practitioners, and software developers building AI agents, retrieval-augmented generation (RAG) systems, and multistep reasoning workflows that hold up in production. Understand the core ideas behind context engineering and why they matter Structure LLM pipelines with DSPy's maintainable, reusable components Apply evaluation-driven optimizers like GEPA and MIPROv2 for measurable improvements Create reproducible RAG and agentic workflows with clear metrics Develop AI systems that stay robust across providers, model updates, and real-world constraints