As we venture into the future of data engineering, streaming and serverless technologies take center stage. In this fun, hands-on, in-depth and interactive session you can learn about the essence of future data engineering today.

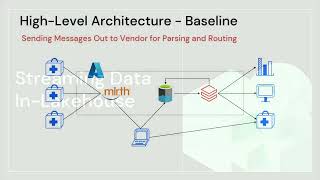

We will tackle the challenge of processing streaming events continuously created by hundreds of sensors in the conference room from a serverless web app (bring your phone and be a part of the demo). The focus is on the system architecture, the involved products and the solution they provide. Which Databricks product, capability and settings will be most useful for our scenario? What does streaming really mean and why does it make our life easier? What are the exact benefits of serverless and how "serverless" is a particular solution?

Leveraging the power of the Databricks Lakehouse Platform, I will demonstrate how to create a streaming data pipeline with Delta Live Tables ingesting data from AWS Kinesis. Further, I’ll utilize advanced Databricks workflows triggers for efficient orchestration and real-time alerts feeding into a real-time dashboard. And since I don’t want you to leave with empty hands - I will use Delta Sharing to share the results of the demo we built with every participant in the room. Join me in this hands-on exploration of cutting-edge data engineering techniques and witness the future in action.

Talk by: Frank Munz

Connect with us: Website: https://databricks.com Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/databricks Instagram: https://www.instagram.com/databricksinc Facebook: https://www.facebook.com/databricksinc