How the Texas Rangers Revolutionized Baseball Analytics with a Modern Data Lakehouse

Don't miss this session where we demonstrate how the Texas Rangers baseball team organized their predictive models by using MLflow and the MLRegistry inside Databricks. They started using Databricks as a simple solution to centralizing our development on the cloud. This helped lessen the issue of siloed development in our team, and allowed us to leverage the benefits of distributed cloud computing.

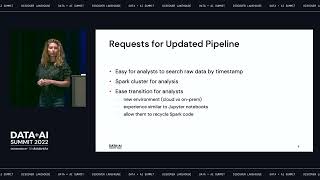

But we quickly found that Databricks was a perfect solution to another problem that we faced in our data engineering stack. Specifically, cost, complexity, and scalability issues hampered our data architecture development for years, and we decided we needed to modernize our stack by migrating to a lakehouse. With Databricks Lakehouse, ad-hoc-analytics, ETL operations, and MLOps all living within Databricks, development at scale has never been easier for our team.

Going forward, we hope to fully eliminate the silos of development, and remove the disconnect between our analytics and data engineering teams. From computer vision, pose analytics, and player tracking, to pitch design, base stealing likelihood, and more, come see how the Texas Rangers are using innovative cloud technologies to create action-driven reports from the current sea of big data.

Talk by: Alexander Booth and Oliver Dykstra

Connect with us: Website: https://databricks.com Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/databricks Instagram: https://www.instagram.com/databricksinc Facebook: https://www.facebook.com/databricksinc