Optimizing Incremental Ingestion in the Context of a Lakehouse

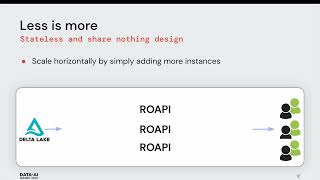

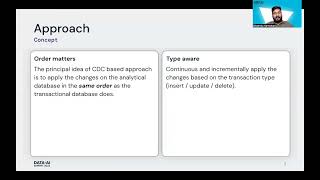

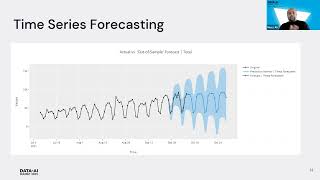

Incremental ingestion of data is often trickier than one would assume, particularly when it comes to maintaining data consistency: for example, specific challenges arise depending on whether the data is ingested in a streaming or a batched fashion. In this session we want to share the real-life challenges encountered when setting up incremental ingestion pipeline in the context of a Lakehouse architecture.

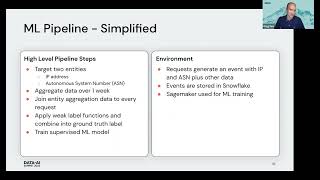

In this session we outline how we used the recently introduced Databricks features, such as Autoloader and Change Data Feed, in addition to some more mature features, such as Spark Structured Streaming and Trigger Once functionality. These functionalities allowed us to transform batch processes into a “streaming” setup without having the need for the cluster to always run. This setup – which we are keen to share to the community - does not require reloading large amounts of data, and therefore represents a computationally, and consequently economically, cheaper solution.

In our presentation we dive deeper into each of the different aspects of the setup, with some extra focus on some essential Autoloader functionalities, such as schema inference, recovery mechanisms and file discovery modes.

Connect with us: Website: https://databricks.com Facebook: https://www.facebook.com/databricksinc Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/data... Instagram: https://www.instagram.com/databricksinc/