Summary

All of the advancements in our technology is based around the principles of abstraction. These are valuable until they break down, which is an inevitable occurrence. In this episode the host Tobias Macey shares his reflections on recent experiences where the abstractions leaked and some observances on how to deal with that situation in a data platform architecture.

Announcements

Hello and welcome to the Data Engineering Podcast, the show about modern data management

RudderStack helps you build a customer data platform on your warehouse or data lake. Instead of trapping data in a black box, they enable you to easily collect customer data from the entire stack and build an identity graph on your warehouse, giving you full visibility and control. Their SDKs make event streaming from any app or website easy, and their extensive library of integrations enable you to automatically send data to hundreds of downstream tools. Sign up free at dataengineeringpodcast.com/rudderstack

Your host is Tobias Macey and today I'm sharing some thoughts and observances about abstractions and impedance mismatches from my experience building a data lakehouse with an ELT workflow

Interview

Introduction

impact of community tech debt

hive metastore

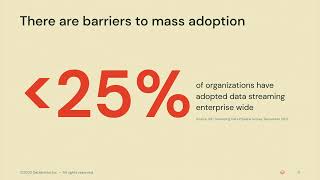

new work being done but not widely adopted

tensions between automation and correctness

data type mapping

integer types

complex types

naming things (keys/column names from APIs to databases)

disaggregated databases - pros and cons

flexibility and cost control

not as much tooling invested vs. Snowflake/BigQuery/Redshift

data modeling

dimensional modeling vs. answering today's questions

What are the most interesting, unexpected, or challenging lessons that you have learned while working on your data platform?

When is ELT the wrong choice?

What do you have planned for the future of your data platform?

Contact Info

LinkedIn

Parting Question

From your perspective, what is the biggest gap in the tooling or technology for data management today?

Closing Announcements

Thank you for listening! Don't forget to check out our other shows. Podcast.init covers the Python language, its community, and the innovative ways it is being used. The Machine Learning Podcast helps you go from idea to production with machine learning.

Visit the site to subscribe to the show, sign up for the mailing list, and read the show notes.

If you've learned something or tried out a project from the show then tell us about it! Email [email protected]) with your story.

To help other people find the show please leave a review on Apple Podcasts and tell your friends and co-workers

Links

dbt

Airbyte

Podcast Episode

Dagster

Podcast Episode

Trino

Podcast Episode

ELT

Data Lakehouse

Snowflake

BigQuery

Redshift

Technical Debt

Hive Metastore

AWS Glue

The intro and outro music is from The Hug by The Freak Fandango Orchestra / CC BY-SA

Sponsored By:

Rudderstack:

RudderStack provides all your customer data pipelines in one platform. You can collect, transform, and route data across your entire stack with its event streaming, ETL, and reverse ETL pipelines.

RudderStack’s warehouse-first approach means it does not store sensitive information, and it allows you to leverage your existing data warehouse/data lake infrastructure to build a single source of truth for every team.

RudderStack also supports real-time use cases. You can Implement RudderStack SDKs once, then automatically send events to your warehouse and 150+ business tools, and you’ll never have to worry about API changes again.

Visit dataengineeringpodcast.com/rudderstack to sign up for free today, and snag a free T-Shirt just for being a Data Engineering Podcast listener.Support Data Engineering Podcast