LLMs seem like a hot solution now, until you try deploying one. In this episode, Andriy Burkov, machine learning expert and author of The Hundred-Page Machine Learning Book, joins us for a grounded, sometimes blunt conversation about why many LLM applications fail. We talk about sentiment analysis, difficulty with taxonomy, agents getting tripped up on formatting, and why MCP might not solve your problems. If you're tired of the hype and want to understand the real state of applied LLMs, this episode delivers. What You'll Learn: What is often misunderstood about LLMs The reliability of sentiment analysis How can we make agents more resilient? 📚 Check out Andriy's books on machine learning and LLMs: The Hundred-Page Machine Learning Book The Hundred-Page Language Models Book: hands-on with Pytorch 🤝 Follow Andriy on LinkedIn! Register for free to be part of the next live session: https://bit.ly/3XB3A8b Follow us on Socials: LinkedIn YouTube Instagram (Mavens of Data) Instagram (Maven Analytics) TikTok Facebook Medium X/Twitter

talk-data.com

talk-data.com

Topic

Analytics

4552

tagged

Activity Trend

Top Events

How will the combination of data, AI and insights/analytics shape the future of finance. What does this mean for how organisations interact, given regulations like PSD2, and what opportunities does this yield for the custodians of data.

In this episode of Hub & Spoken, host Jason Foster, CEO & Founder of Cynozure, is joined by James Lupton, Chief Technology Officer at Cynozure, to explore the findings from What Matters Most for Insurers Now — a new report shaped by insights from 35 senior leaders across the insurance industry. While the report focuses on insurers, its lessons resonate far more widely. Jason and James discuss how organisations across sectors are wrestling with the same issues: outdated and overly customised legacy systems that hold back innovation, a persistent gap between the ambition to build a data-driven culture and the actions taken to achieve it, and the importance of leadership support that goes beyond lip service to meaningful investment and behaviour change. They also consider the next frontier: AI agents. With many firms experimenting but few ready to deploy, Jason and James unpack what true readiness looks like and why success requires more than just technology. This episode offers practical reflections for leaders in complex, regulated industries who are striving to "fix forward" and unlock the real value of data and AI. Download What Matters Most for Insurers Now here ***** Cynozure is a leading data, analytics and AI company that helps organisations to reach their data potential. It works with clients on data and AI strategy, data management, data architecture and engineering, analytics and AI, data culture and literacy, and data leadership. The company was named one of The Sunday Times' fastest-growing private companies in both 2022 and 2023 and recognised as The Best Place to Work in Data by DataIQ in 2023 and 2024. Cynozure is a certified B Corporation.

In this episode, I talk with Ilya Preston, co-founder and CEO of PAXAFE, a logistics orchestration and decision intelligence platform for temperature-controlled supply chains (aka “cold chain”). Ilya explains how PAXAFE helps companies shipping sensitive products, like pharmaceuticals, vaccines, food, and produce, by delivering end-to-end visibility and actionable insights powered by analytics and AI that reduce product loss, improve efficiency, and support smarter real-time decisions.

Ilya shares the challenges of building a configurable system that works for transportation, planning, and quality teams across industries. We also discuss their product development philosophy, team structure, and use of AI for document processing, diagnostics, and workflow automation.

Highlights/ Skip to:

Intro to Paxafe (2:13) How PAXAFE brings tons of cold chain data together in one user experience (2:33) Innovation in cold chain analytics is up, but so is cold chain product loss. (4:42) The product challenge of getting sufficient telemetry data at the right level of specificity to derive useful analytical insights (7:14) Why and how PAXAFE pivoted away from providing IoT hardware to collect telemetry (10:23) How PAXAFE supports complex customer workflows, cold chain logistics, and complex supply chains (13:57) Who the end users of PAXAFE are, and how the product team designs for these users (20:00) Pharma loses around $40 billion a year relying on ‘Bob’s intuition’ in the warehouse. How Paxafe balances institutional user knowledge with the cold hard facts of analytics (42:43) Lessons learned when Ilya’s team fell in love with its own product and didn’t listen to the market (23:57)

Quotes from Today’s Episode "Our initial vision for what PAXAFE would become was 99.9% spot on. The only thing we misjudged was market readiness—we built a product that was a few years ahead of its time." –IIya

"As an industry, pharma is losing $40 billion worth of product every year because decisions are still based on warehouse intuition about what works and what doesn’t. In production, the problem is even more extreme, with roughly $800 billion lost annually due to temperature issues and excursions." -IIya

"With our own design, our initial hypothesis and vision for what Pacaf could be really shaped where we are today. Early on, we had a strong perspective on what our customers needed—and along the way, we fell in love with our own product and design.." -IIya

"We spent months perfecting risk scores… only to hear from customers, ‘I don’t care about a 71 versus a 62—just tell me what to do.’ That single insight changed everything." -IIya

"If you’re not talking to customers or building a product that supports those conversations, you’re literally wasting time. In the zero-to-product-market-fit phase, nothing else matters, you need to focus entirely on understanding your customers and iterating your product around their needs..” -IIya

"Don’t build anything on day one, probably not on day two, three, or four either. Go out and talk to customers. Focus not on what they think they need, but on their real pain points. Understand their existing workflows and the constraints they face while trying to solve those problems." -IIya

Links

PAXAFE: https://www.paxafe.com/ LinkedIn for Ilya Preston: https://www.linkedin.com/in/ilyapreston/ LinkedIn for company: https://www.linkedin.com/company/paxafe/

AI, data, numbers—without uploads. Hash, mask, and redact PII, then run data analytics locally for time-saving and privacy. In this episode, we build a No-Upload AI Analyst that keeps your PII safe: HMAC SHA-256 hashing, masking, and redaction using policy presets and client-side transforms. We’ll: • Reframe the problem (insights > risk) • Set four hard constraints (no uploads, local preferred, policy presets, human-readable audit) • Use rules-first privacy + schema semantics • Walk the 5-step workflow (paste headers → pick preset → set secret → transform → analyze) • Show real-world cases (HIPAA/HITECH-aware analytics, FERPA contexts, product analytics) • Share a checklist + quiz + local Streamlit approach Perfect for data teams in healthcare, finance, education, and privacy-sensitive orgs. Key takeaways Stop uploading customer data. Transform it client-side first.Use HMAC hashing to keep joins without exposing raw emails/IDs.Mask for human-readable UI; redact when you don’t need the field.Ship a data-handling report with every analysis.Run the app locally for maximum privacy.Affiliate note: I record with Riverside (affiliate) and host on RSS.com (affiliate). Links in show notes. Links Blog version: (Free): https://mukundansankar.substack.com/p/the-no-upload-ai-analyst-v4-secure Join the Discussion (comments hub): https://mukundansankar.substack.com/notesTools I use for my Podcast and Affiliate PartnersRecording Partner: Riverside → Sign up here (affiliate)Host Your Podcast: RSS.com (affiliate )Research Tools: Sider.ai (affiliate)Sourcetable AI: Join Here(affiliate)🔗 Connect with Me:Free Email NewsletterWebsite: Data & AI with MukundanGitHub: https://github.com/mukund14Twitter/X: @sankarmukund475LinkedIn: Mukundan SankarYouTube: Subscribe

Here are 5 exciting and unique data analyst projects that will build your skills and impress hiring managers! These range from beginner to advanced and are designed to enhance your data storytelling abilities. ✨ Try Julius today at https://landadatajob.com/Julius-YT Where I Go To Find Datasets (as a data analyst) 👉 https://youtu.be/DHfuvMyBofE?si=ABsdUfzgG7Nsbl89 💌 Join 10k+ aspiring data analysts & get my tips in your inbox weekly 👉 https://www.datacareerjumpstart.com/newsletter 🆘 Feeling stuck in your data journey? Come to my next free "How to Land Your First Data Job" training 👉 https://www.datacareerjumpstart.com/training 👩💻 Want to land a data job in less than 90 days? 👉 https://www.datacareerjumpstart.com/daa 👔 Ace The Interview with Confidence 👉 https://www.datacareerjumpstart.com/interviewsimulator

⌚ TIMESTAMPS 00:00 - Introduction 00:24 - Project 1: Stock Price Analysis 03:46 - Project 2: Real Estate Data Analysis (SQL) 07:52 - Project 3: Personal Finance Dashboard (Tableau or Power BI) 11:20 - Project 4: Pokemon Analysis (Python) 14:16 - Project 5: Football Data Analysis (any tool)

🔗 CONNECT WITH AVERY 🎥 YouTube Channel: https://www.youtube.com/@averysmith 🤝 LinkedIn: https://www.linkedin.com/in/averyjsmith/ 📸 Instagram: https://instagram.com/datacareerjumpstart 🎵 TikTok: https://www.tiktok.com/@verydata 💻 Website: https://www.datacareerjumpstart.com/ Mentioned in this episode: Join the last cohort of 2025! The LAST cohort of The Data Analytics Accelerator for 2025 kicks off on Monday, December 8th and enrollment is officially open!

To celebrate the end of the year, we’re running a special End-of-Year Sale, where you’ll get: ✅ A discount on your enrollment 🎁 6 bonus gifts, including job listings, interview prep, AI tools + more

If your goal is to land a data job in 2026, this is your chance to get ahead of the competition and start strong.

👉 Join the December Cohort & Claim Your Bonuses: https://DataCareerJumpstart.com/daa https://www.datacareerjumpstart.com/daa

What is "process" in analytics? On the one hand, it can be seen as a detailed sequence of minutia by which anything that needs to be repeated in the world of analytics gets carried out in a structured and consistent manner. On the other hand, that's the sort of definition that strikes terror and rage in the hearts of many souls. Some of those souls are co-hosts of this podcast. Even the more process-oriented co-hosts bristle at such a definition (but for different reasons). So, what ARE some of the core processes in analytics? And, what is the appropriate balance between establishing a prescriptive structure and leaving sufficient flexibility to allow human judgment to adapt a process to fit specific situations? Those are the sorts of questions tackled on this episode, which was released on time with all of its underlying component parts thanks to a reasonably robust…process. For complete show notes, including links to items mentioned in this episode and a transcript of the show, visit the show page.

Learn how to harness the power of OpenSearch effectively with 'The Definitive Guide to OpenSearch'. This book explores installation, configuration, query building, and visualization, guiding readers through practical use cases and real-world implementations. Whether you're building search experiences or analyzing data patterns, this guide equips you thoroughly. What this Book will help me do Understand core OpenSearch principles, architecture, and the mechanics of its search and analytics capabilities. Learn how to perform data ingestion, execute advanced queries, and produce insightful visualizations on OpenSearch Dashboards. Implement scaling strategies and optimum configurations for high-performance OpenSearch clusters. Explore real-world case studies that demonstrate OpenSearch applications in diverse industries. Gain hands-on experience through practical exercises and tutorials for mastering OpenSearch functionality. Author(s) Jon Handler, Soujanya Konka, and Prashant Agrawal, celebrated experts in search technologies and big data analysis, bring their years of experience at AWS and other domains to this book. Their collective expertise ensures that readers receive both core theoretical knowledge and practical applications to implement directly. Who is it for? This book is aimed at developers, data professionals, engineers, and systems operators who work with search systems or analytics platforms. It is especially suitable for individuals in roles handling large-scale data, who want to improve their skills or deploy OpenSearch in production environments. Early learners and seasoned experts alike will find valuable insights.

Summary In this episode of the Data Engineering Podcast Serge Gershkovich, head of product at SQL DBM, talks about the socio-technical aspects of data modeling. Serge shares his background in data modeling and highlights its importance as a collaborative process between business stakeholders and data teams. He debunks common misconceptions that data modeling is optional or secondary, emphasizing its crucial role in ensuring alignment between business requirements and data structures. The conversation covers challenges in complex environments, the impact of technical decisions on data strategy, and the evolving role of AI in data management. Serge stresses the need for business stakeholders' involvement in data initiatives and a systematic approach to data modeling, warning against relying solely on technical expertise without considering business alignment.

Announcements Hello and welcome to the Data Engineering Podcast, the show about modern data managementData migrations are brutal. They drag on for months—sometimes years—burning through resources and crushing team morale. Datafold's AI-powered Migration Agent changes all that. Their unique combination of AI code translation and automated data validation has helped companies complete migrations up to 10 times faster than manual approaches. And they're so confident in their solution, they'll actually guarantee your timeline in writing. Ready to turn your year-long migration into weeks? Visit dataengineeringpodcast.com/datafold today for the details.Enterprises today face an enormous challenge: they’re investing billions into Snowflake and Databricks, but without strong foundations, those investments risk becoming fragmented, expensive, and hard to govern. And that’s especially evident in large, complex enterprise data environments. That’s why companies like DirecTV and Pfizer rely on SqlDBM. Data modeling may be one of the most traditional practices in IT, but it remains the backbone of enterprise data strategy. In today’s cloud era, that backbone needs a modern approach built natively for the cloud, with direct connections to the very platforms driving your business forward. Without strong modeling, data management becomes chaotic, analytics lose trust, and AI initiatives fail to scale. SqlDBM ensures enterprises don’t just move to the cloud—they maximize their ROI by creating governed, scalable, and business-aligned data environments. If global enterprises are using SqlDBM to tackle the biggest challenges in data management, analytics, and AI, isn’t it worth exploring what it can do for yours? Visit dataengineeringpodcast.com/sqldbm to learn more.Your host is Tobias Macey and today I'm interviewing Serge Gershkovich about how and why data modeling is a sociotechnical endeavorInterview IntroductionHow did you get involved in the area of data management?Can you start by describing the activities that you think of when someone says the term "data modeling"?What are the main groupings of incomplete or inaccurate definitions that you typically encounter in conversation on the topic?How do those conceptions of the problem lead to challenges and bottlenecks in execution?Data modeling is often associated with data warehouse design, but it also extends to source systems and unstructured/semi-structured assets. How does the inclusion of other data localities help in the overall success of a data/domain modeling effort?Another aspect of data modeling that often consumes a substantial amount of debate is which pattern to adhere to (star/snowflake, data vault, one big table, anchor modeling, etc.). What are some of the ways that you have found effective to remove that as a stumbling block when first developing an organizational domain representation?While the overall purpose of data modeling is to provide a digital representation of the business processes, there are inevitable technical decisions to be made. What are the most significant ways that the underlying technical systems can help or hinder the goals of building a digital twin of the business?What impact (positive and negative) are you seeing from the introduction of LLMs into the workflow of data modeling?How does tool use (e.g. MCP connection to warehouse/lakehouse) help when developing the transformation logic for achieving a given domain representation? What are the most interesting, innovative, or unexpected ways that you have seen organizations address the data modeling lifecycle?What are the most interesting, unexpected, or challenging lessons that you have learned while working with organizations implementing a data modeling effort?What are the overall trends in the ecosystem that you are monitoring related to data modeling practices?Contact Info LinkedInParting Question From your perspective, what is the biggest gap in the tooling or technology for data management today?Links sqlDBMSAPJoe ReisERD == Entity Relation DiagramMaster Data ManagementdbtData ContractsData Modeling With Snowflake book by Serge (affiliate link)Type 2 DimensionData VaultStar SchemaAnchor ModelingRalph KimballBill InmonSixth Normal FormMCP == Model Context ProtocolThe intro and outro music is from The Hug by The Freak Fandango Orchestra / CC BY-SA

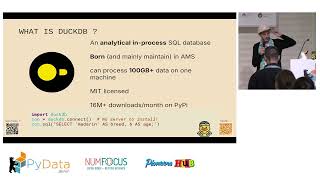

Most Python developers reach for Pandas or Polars when working with tabular data—but DuckDB offers a powerful alternative that’s more than just another DataFrame library. In this tutorial, you’ll learn how to use DuckDB as an in-process analytical database: building data pipelines, caching datasets, and running complex queries with SQL—all without leaving Python. We’ll cover common use cases like ETL, lightweight data orchestration, and interactive analytics workflows. You’ll leave with a solid mental model for using DuckDB effectively as the “SQLite for analytics.”

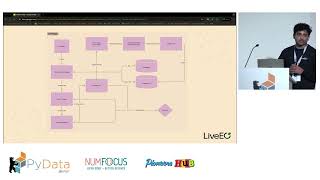

I will share how our team built an end-to-end system to transform raw satellite imagery into analysis-ready datasets for use cases like vegetation monitoring, deforestation detection, and identifying third-party activity. We streamlined the entire pipeline from automated acquisition and cloud storage to preprocessing that ensures spatial, spectral, and temporal consistency. By leveraging Prefect for orchestration, Anyscale Ray for scalable processing, and the open source STAC standard for metadata indexing, we reduced processing times from days to near real-time. We addressed challenges like inconsistent metadata and diverse sensor types, building a flexible system capable of supporting large-scale geospatial analytics and AI workloads.

Mark, Cris, and Marisa talk about the increasingly shaky state of the economy after reviewing the week’s data. They preview next week’s jobs report and the likelihood of further downward revisions and negative payroll numbers. The trio then ponders some dark scenarios regarding Fed independence or lack thereof, and what that could mean for the growth and inflation outlooks. Hosts: Mark Zandi – Chief Economist, Moody’s Analytics, Cris deRitis – Deputy Chief Economist, Moody’s Analytics, and Marisa DiNatale – Senior Director - Head of Global Forecasting, Moody’s Analytics Follow Mark Zandi on 'X' and BlueSky @MarkZandi, Cris deRitis on LinkedIn, and Marisa DiNatale on LinkedIn

Questions or Comments, please email us at [email protected]. We would love to hear from you. To stay informed and follow the insights of Moody's Analytics economists, visit Economic View.

Hosted by Simplecast, an AdsWizz company. See pcm.adswizz.com for information about our collection and use of personal data for advertising.

In this episode, we sit down with Joe Dery, Vice President of Customer Success at Aera Technology, to explore a concept that's shifting how organizations think about analytics: Decision Intelligence. We unpack what decision intelligence really means (hint: it's not just another buzzword), why some data scientists still resist it, and how this discipline helps surface causal insights — not just correlations. Joe brings firsthand use cases from the field, showing how DI is helping organizations not only analyze what's happening but also decide what to do about it. Whether you're a data scientist, product manager, or exec who wants to move from dashboards to decisions, this one's for you. What You'll Learn: What decision intelligence is — and what it isn't Why some in the data space push back against it How DI gets you closer to causality (not just correlation) Real-world use cases where DI created business impact Why decision metadata is the secret sauce for long-term success Follow Joe on LinkedIn! Register for free to be part of the next live session: https://bit.ly/3XB3A8b Follow us on Socials: LinkedIn YouTube Instagram (Mavens of Data) Instagram (Maven Analytics) TikTok Facebook Medium X/Twitter

AI, data, and analytics pick three cookable dinners from the ingredients and appliances you already have—no grocery run. We use AI, data, and a rules-first analytics score to rank real meals you can make tonight with what’s in your pantry. A lightweight rules engine avoids AI hallucinations; Chef-AI adds safe swaps and one-line directions. You’ll learn a copy-paste AI prompt, how to reduce waste, and how analytics rank time, fit, and vibe. 3 bullets (skimmable): Rules > raw AI for reliable, cookable resultsAnalytics score to rank fastest/best-fit mealsCopy-paste prompt for 3 ideas in under a minuteYou’ll learn Why a rules engine beats raw AI for reliable, cookable recipesHow an analytics score prioritizes the best matches fastA copy-paste AI prompt that returns 3 make-tonight ideas in under a minuteHow to reduce waste and keep weeknight meals simple & tastyTry this prompt: I have [3–5 ingredients] and these appliances: [list]. Suggest 3 meals I can make in under 30 minutes. If something’s missing, suggest simple pantry substitutions. Keep it realistic and give one-line directions for each. Quick quiz True or False — If you only rely on AI, it may assume tools you don’t have and suggest impossible recipes. Answer: True. Start with rules; use AI for riffs and swaps. Discussion question When you’re deciding on dinner, do you want structure (reliable classics) or creativity (something new)? Reply on Substack or X—I'll share the poll next week. Resources & links Blog Link: https://mukundansankar.substack.com/p/pantry-plate-the-aifirst-way-to-decideKey takeaways Put rules before AI for cookable results.One clear AI prompt can end dinner indecision in minutes.AI is a partner, not the chef.Affiliate partners (links below): RSS: your podcast, get free transcripts, and earn ad revenue with as few as 10 monthly downloads. Sign up here.Sider AI. AI-powered research and productivity assistant for breaking down job descriptions into keywords. Try Sider here.Riverside FM: Record your podcast in studio-quality audio and 4K video from anywhere. Get started with Riverside here.Affiliate disclosure: Some links may be affiliates. If you use them, I may earn at no extra cost to you. Answer: True. Keywords: ai, ai meal planner, data, data analytics, analytics, time-saving tools, pantry, dinner ideas, recipe generator, meal planning

Josh Gledhill was a music‑industry professional who, after 1,026 days of unemployment, landed not one but two data job offers. In this episode, he shares how he overcame dyslexia and how he used Threads, a 40‑page PRINTED Portfolio, and the SPN Method to become a data analyst at Staffordshire County Council. ✨ Try Julius today at https://landadatajob.com/Julius-YT 💌 Join 10k+ aspiring data analysts & get my tips in your inbox weekly 👉 https://www.datacareerjumpstart.com/newsletter 🆘 Feeling stuck in your data journey? Come to my next free "How to Land Your First Data Job" training 👉 https://www.datacareerjumpstart.com/training 👩💻 Want to land a data job in less than 90 days? 👉 https://www.datacareerjumpstart.com/daa 👔 Ace The Interview with Confidence 👉 https://www.datacareerjumpstart.com/interviewsimulator

⌚ TIMESTAMPS 00:00 - Introduction 01:36 - Josh's Background in Music and Transition to Data Analytics 07:13 - Overcoming Dyslexia and Study Tips 10:44 - Building a Personal Brand on Threads 16:19 - The SPN Method 22:06 - Navigating the Interview Process (and flopping the technical interview) 33:04 - Differences in Data Jobs: UK vs. US 41:29 - Ethics and AI in the UK

🔗 CONNECT WITH JOSH 🧵 Threads: https://www.threads.com/@databyjosh 🤝 LinkedIn: https://www.linkedin.com/in/josh-gledhill/ 🎥 YouTube Channel: https://www.youtube.com/channel/UCSzkvTFrQdKAdESHepjSP3Q 🤝 X: https://x.com/macinjosh

🔗 CONNECT WITH AVERY 🎥 YouTube Channel: https://www.youtube.com/@averysmith 🤝 LinkedIn: https://www.linkedin.com/in/averyjsmith/ 📸 Instagram: https://instagram.com/datacareerjumpstart 🎵 TikTok: https://www.tiktok.com/@verydata 💻 Website: https://www.datacareerjumpstart.com/ Mentioned in this episode: Join the last cohort of 2025! The LAST cohort of The Data Analytics Accelerator for 2025 kicks off on Monday, December 8th and enrollment is officially open!

To celebrate the end of the year, we’re running a special End-of-Year Sale, where you’ll get: ✅ A discount on your enrollment 🎁 6 bonus gifts, including job listings, interview prep, AI tools + more

If your goal is to land a data job in 2026, this is your chance to get ahead of the competition and start strong.

👉 Join the December Cohort & Claim Your Bonuses: https://DataCareerJumpstart.com/daa https://www.datacareerjumpstart.com/daa

Tristan digs deep into the world of Apache Iceberg. There's a lot happening beneath the surface: multiple catalog interfaces, evolving REST specs, and competing implementations across open source, proprietary, and academic contexts. Christian Thiel, co-founder of Lakekeeper, one of the most widely used Iceberg catalogs, joins to walk through the state of the Iceberg ecosystem. For full show notes and to read 6+ years of back issues of the podcast's companion newsletter, head to https://roundup.getdbt.com. The Analytics Engineering Podcast is sponsored by dbt Labs.

From Fed Chair Powell’s confirmation of coming interest rate cuts to digital wallets, this episode dives deep into the evolving world of digital currencies with guest Ananya Kumar from the Atlantic Council. Whether you're managing your portfolio like co-host Crypto Cris or just trying to keep up with changing technologies, we’ve got you covered. Guest: Ananya Kumar, Deputy Director of Future of Money, Atlantic Council For Ananya's article on Chinese Stablecoin click here: https://www.atlanticcouncil.org/blogs/econographics/everybody-wants-a-stablecoin-even-china/ Hosts: Mark Zandi – Chief Economist, Moody’s Analytics, Cris deRitis – Deputy Chief Economist, Moody’s Analytics, and Marisa DiNatale – Senior Director - Head of Global Forecasting, Moody’s Analytics Follow Mark Zandi on 'X' and BlueSky @MarkZandi, Cris deRitis on LinkedIn, and Marisa DiNatale on LinkedIn

Questions or Comments, please email us at [email protected]. We would love to hear from you. To stay informed and follow the insights of Moody's Analytics economists, visit Economic View.

Hosted by Simplecast, an AdsWizz company. See pcm.adswizz.com for information about our collection and use of personal data for advertising.

AI is moving fast, but are organizations prepared to keep up? In this episode, data professional Laura Madsen joins us to unpack why most companies are lagging behind, how tech debt is holding businesses back, and why knowledge graphs are the way forward. Join us for a bold conversation on why the AI revolution needs better data governance, not just bigger models. What You'll Learn: Who's thriving in disruption, which industries embrace AI, and why others are stuck The hidden cost of tech debt and why most organizations avoid real transformation The power of knowledge graphs, and why they're the key to making AI work at scale What AI still can't do for us, and the gaps we need to fill with human expertise Follow Laura on LinkedIn! Register for free to be part of the next live session: https://bit.ly/3XB3A8b Follow us on Socials: LinkedIn YouTube Instagram (Mavens of Data) Instagram (Maven Analytics) TikTok Facebook Medium X/Twitter