In this session I will demo how you can migrate vm's from VMWare or Hyper-V to Azure Local using Azure Migrate. I will demo how you configure the Azure Migrate Project, how you deploy the Azure Migrate Appliance, how you enable synchronization and migrate and finalize the cutover. During this theater session you should have a good understanding on how you can migrate your vm's to Azure Local with a short down time for migration and for free without buying expensive third-party tools.

talk-data.com

talk-data.com

Topic

Virtual Machine

65

tagged

Activity Trend

Top Events

AI for enterprises, particularly in the era of GenAI, requires rapid experimentation and the ability to productionize models and agents quickly and at scale. Compliance, resilience and commercial flexibility drive the need to serve models across regions. As cloud providers struggle with rising demand for GPUs in environments, VM shortages have become commonplace, and add to the pressure of general cloud outages. Enterprises that can quickly leverage GPU capacity in other cloud regions will be better equipped to capitalize on the promise of AI, while staying flexible to serve distinct user bases and complying with regulations. In this presentation we will show and discuss how to implement AI deployments across cloud regions, deploying a model across regions and using a load balancer to determine where to best route a user request.

The first Google Axion virtual machine (VM), C4A, provides the best price performance for general purpose workloads in the industry, but don’t take it from us. We’ll share how Axion benchmarks for key workloads. Then, we’ll take a deeper look at leading customers using Google Axion VMs. They’ll discuss their use cases and how they architected their stack to run on Arm VMs, providing valuable tips for other customers considering a similar transition.

Maximize performance with innovations in Compute Engine. Come learn about the latest innovations and portfolio additions from Compute Engine. Learn about new virtual machines (VMs) that are purpose-built to deliver leadership performance for all your workloads, including AI and machine learning (ML), databases, enterprise applications, and network and security compliances. Understand how to pick the right VM. We’ll cover product capabilities, best practices, and announce exciting new products targeted at making it easy for you to operate your Compute Engine environment.

In this episode, we explore how microgravity affects muscle structure and function, using Caenorhabditis elegans as a model organism. Spaceflight-induced muscle atrophy is a major challenge for astronauts, and understanding the molecular and genetic mechanisms behind these changes is key to developing countermeasures.

This discussion is based on the review article: “Advancing Insights into Microgravity-Induced Muscle Changes Using Caenorhabditis elegans as a Model Organism” Beckett LJ, Williams PM, Toh LS, Hessel V, Gerstweiler L, Fisk I, Toronjo-Urquiza L, Chauhan VM. Published in npj Microgravity (2024). 📖 Read the full paper: https://doi.org/10.1038/s41526-024-00418-z

🔬 Learn how C. elegans provides unique insights into metabolic changes, gene expression, and protein regulation during spaceflight, offering potential strategies to counteract muscle degradation.

🌍 Follow for more research-based discussions on nematodes, space biology, and biomedical science.

This podcast is generated with artificial intelligence and curated by Veeren. If you’d like your publication featured on the show, please get in touch.

📩 More info: 🔗 www.veerenchauhan.com 📧 [email protected]

Embark on a journey through the diverse landscape of website deployment options available on Google Cloud. In this session, we’ll guide you through various ways to deploy a website, offering insights into the array of tools and services Google Cloud provides for hosting, scaling, and optimizing web applications. From traditional virtual machine instances to containerized solutions with Kubernetes, and serverless deployment with Cloud Functions and Cloud Run, this session will cover the entire spectrum of deployment strategies.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

In this game, you will monitor Google Compute Engine virtual machine (VM) instance with Cloud Monitoring, create and deploy a Cloud Function using the Cloud Platform Command Line, schedule a Cloud Function to identify, clean up unused IP addresses, and to identify and clean up unused and orphaned persistent disks.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

In this game you will create and manage permissions for Google Cloud resources, run structured queries on BigQuery and Cloud SQL, create several VPC networks and VM instances and test connectivity across networks, and monitor a Google Compute Engine VM instance with Cloud Monitoring.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

Congratulations! You have successfully deployed your VM-based application on Compute Engine. Now you must understand how you can operate and optimize your applications for reliability, performance, and security. In this session, we will talk about how you can optimize day 2 management activities for your workloads and how you can use different products to address this challenge. Learn best practices and new features from Google Cloud on automating your infrastructure management, from application deployment to monitoring and troubleshooting.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

Come learn about the latest innovations and portfolio additions from Google Compute Engine. Hear about new VMs that are purpose-built to deliver leadership performance for all your workloads, including AI/ML, databases such as SAP, enterprise applications, and network/security appliances. Understand how to pick the right VM. We’ll cover product capabilities, best practices, and exciting new product announcements targeted at making it easy for you to operate your Compute Engine environment.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

Your choice of cloud infrastructure – virtual machines upon which your critical applications run, can make a huge impact on your IT Spend. In this Cloud Talk, we’ll quickly review the benefits of AMD EPYC™ powered Google Cloud instances and walk you through selecting the ideal VM for workloads such as AI inferencing to optimize your cloud investment and performance. By attending this session, your contact information may be shared with the sponsor for relevant follow up for this event only.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

In this game you will create and manage permissions for Google Cloud resources, run structured queries on BigQuery and Cloud SQL, create several VPC networks and VM instances and test connectivity across networks, and monitor a Google Compute Engine VM instance with Cloud Monitoring.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

REX Migrations vers le Cloud Public - Application Liquidités (Accélération des rapports de liquidité réglementaires de Société Générale).

Did you finish the Photon whitepaper and think, wait, what? I know I did; it’s my job to understand it, explain it, and then use it. If your role involves using Apache Spark™ on Databricks, then you need to know about Photon and where to use it. Join me, chief dummy, nay "supreme" dummy, as I break down this whitepaper into easy to understand explanations that don’t require a computer science degree. Together we will unravel mysteries such as:

- Why is a Java Virtual Machine the current bottleneck for Spark enhancements?

- What does vectorized even mean? And how was it done before?

- Why is the relationship status between Spark and Photon "complicated?"

In this session, we’ll start with the basics of Apache Spark, the details we pretend to know, and where those performance cracks are starting to show through. Only then will we start to look at Photon, how it’s different, where the clever design choices are and how you can make the most of this in your own workloads. I’ve spent over 50 hours going over the paper in excruciating detail; every reference, and in some instances, the references of the references so that you don’t have to.

Talk by: Holly Smith

Connect with us: Website: https://databricks.com Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/databricks Instagram: https://www.instagram.com/databricksinc Facebook: https://www.facebook.com/databricksinc

Elixir is an Erlang-VM bytecode-compatible programming language that is growing in popularity.

In this session I will show how you can apply Elixir towards solving data engineering problems in novel ways.

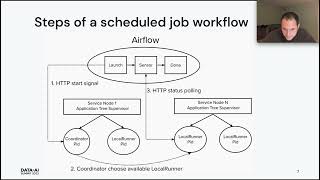

Examples include: • How to leverage Erlang's lightweight distributed process coordination to run clusters of workers across docker containers and perform data ingestion. • A framework that hooks Elixir functions as steps into Airflow graphs. • How to consume and process Kafka events directly within Elixir microservices.

For each of the above I'll show real system examples and walk through the key elements step by step. No prior familiarity with Erlang or Elixir will be required.

Connect with us: Website: https://databricks.com Facebook: https://www.facebook.com/databricksinc Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/data... Instagram: https://www.instagram.com/databricksinc/

This IBM® Redpaper publication delivers an updated guide for high availability and disaster recovery (HADR) planning in a multicloud environment for IBM Power. This publication describes the ideas from studies that were performed in a virtual collaborative team of IBM Business Partners, technical focal points, and product managers who used hands-on experience to implement case studies to show HADR management aspects to develop this technical update guide for a hybrid multicloud environment. The goal of this book is to deliver a HADR guide for backup and data management on-premises and in a multicloud environment. This document updates HADR on-premises and in the cloud with IBM PowerHA® SystemMirror®, IBM VM Recovery Manager (VMRM), and other solutions that are available on IBM Power for IBM AIX®, IBM i, and Linux. This publication highlights the available offerings at the time of writing for each operating system (OS) that is supported in IBM Power, including best practices. This book addresses topics for IT architects, IT specialists, sellers, and anyone looking to implement and manage HADR on-premises and in the cloud. Moreover, this publication provides documentation to transfer how-to skills to the technical teams and solution guidance to the sales team. This book complements the documentation that is available at IBM Documentation and aligns with the educational materials that are provided by IBM Systems Technical Training.

IBM® Power Virtualization Center (IBM® PowerVC™) is an advanced enterprise virtualization management offering for IBM Power Systems. This IBM Redbooks® publication introduces IBM PowerVC and helps you understand its functions, planning, installation, and setup. It also shows how IBM PowerVC can integrate with systems management tools such as Ansible or Terraform and that it also integrates well into a OpenShift container environment. IBM PowerVC Version 2.0.0 supports both large and small deployments, either by managing IBM PowerVM® that is controlled by the Hardware Management Console (HMC), or by IBM PowerVM NovaLink. With this capability, IBM PowerVC can manage IBM AIX®, IBM i, and Linux workloads that run on IBM POWER® hardware. IBM PowerVC is available as a Standard Edition, or as a Private Cloud Edition. IBM PowerVC includes the following features and benefits: Virtual image capture, import, export, deployment, and management Policy-based virtual machine (VM) placement to improve server usage Snapshots and cloning of VMs or volumes for backup or testing purposes Support of advanced storage capabilities such as IBM SVC vdisk mirroring of IBM Global Mirror Management of real-time optimization and VM resilience to increase productivity VM Mobility with placement policies to reduce the burden on IT staff in a simple-to-install and easy-to-use graphical user interface (GUI) Automated Simplified Remote Restart for improved availability of VMs ifor when a host is down Role-based security policies to ensure a secure environment for common tasks The ability to enable an administrator to enable Dynamic Resource Optimization on a schedule IBM PowerVC Private Cloud Edition includes all of the IBM PowerVC Standard Edition features and enhancements: A self-service portal that allows the provisioning of new VMs without direct system administrator intervention. There is an option for policy approvals for the requests that are received from the self-service portal. Pre-built deploy templates that are set up by the cloud administrator that simplify the deployment of VMs by the cloud user. Cloud management policies that simplify management of cloud deployments. Metering data that can be used for chargeback. This publication is for experienced users of IBM PowerVM and other virtualization solutions who want to understand and implement the next generation of enterprise virtualization management for Power Systems. Unless stated otherwise, the content of this publication refers to IBM PowerVC Version 2.0.0.

This IBM® Redbooks® publication describes the IBM VM Recovery Manager for Power Systems, and addresses topics to help answer customers' complex high availability (HA) and disaster recovery (DR) requirements for IBM AIX® and Linux on IBM Power Systems servers to help maximize systems' availability and resources, and provide technical documentation to transfer the how-to skills to users and support teams. The IBM VM Recovery Manager for Power Systems product is an easy to use and economical HA and DR solution. Automation software, installation services, and remote-based software support help you streamline the process of recovery, which raises availability and recovery testing, and maintains a state-of-the-art HA and DR solution. Built-in functions and IBM Support can decrease the need for expert-level skills and shorten your recovery time objective (RTO), improve your recovery point objective (RPO), optimize backups, and better manage growing data volumes. This book examines the IBM VM Recovery Manager solution, tools, documentation, and other resources that are available to help technical teams develop, implement, and support business resilience solutions in IBM VM Recovery Manager for IBM Power Systems environments. This publication targets technical professionals (consultants, technical support staff, IT Architects, and IT Specialists) who are responsible for providing HA and DR solutions and support for IBM Power Systems.

IBM® Spectrum Archive Enterprise Edition for the IBM TS4500, IBM TS3500, IBM TS4300, and IBM TS3310 tape libraries provides seamless integration of IBM Linear Tape File System (LTFS) with IBM Spectrum® Scale by creating an LTFS tape tier. You can run any application that is designed for disk files on tape by using IBM Spectrum Archive. IBM Spectrum Archive can play an important role in reducing the cost of storage for data that does not need the access performance of primary disk. The IBM Spectrum Archive Virtual Appliance can be deployed in minutes and key features can be tried along with this user guide. The virtual machine (VM) has a pre-configured IBM Spectrum Scale and a virtual tape library that allows to quickly test the IBM Spectrum Archive features without connecting to a physical tape library. The virtual appliance is provided as a VirtualBox .ova file.

Summary DataDog is one of the most successful companies in the space of metrics and monitoring for servers and cloud infrastructure. In order to support their customers, they need to capture, process, and analyze massive amounts of timeseries data with a high degree of uptime and reliability. Vadim Semenov works on their data engineering team and joins the podcast in this episode to discuss the challenges that he works through, the systems that DataDog has built to power their business, and how their teams are organized to allow for rapid growth and massive scale. Getting an inside look at the companies behind the services we use is always useful, and this conversation was no exception.

Announcements

Hello and welcome to the Data Engineering Podcast, the show about modern data management When you’re ready to build your next pipeline, or want to test out the projects you hear about on the show, you’ll need somewhere to deploy it, so check out our friends at Linode. With 200Gbit private networking, scalable shared block storage, and a 40Gbit public network, you’ve got everything you need to run a fast, reliable, and bullet-proof data platform. If you need global distribution, they’ve got that covered too with world-wide datacenters including new ones in Toronto and Mumbai. And for your machine learning workloads, they just announced dedicated CPU instances. Go to dataengineeringpodcast.com/linode today to get a $20 credit and launch a new server in under a minute. And don’t forget to thank them for their continued support of this show! You listen to this show to learn and stay up to date with what’s happening in databases, streaming platforms, big data, and everything else you need to know about modern data management. For even more opportunities to meet, listen, and learn from your peers you don’t want to miss out on this year’s conference season. We have partnered with organizations such as O’Reilly Media, Corinium Global Intelligence, ODSC, and Data Council. Upcoming events include the Software Architecture Conference in NYC, Strata Data in San Jose, and PyCon US in Pittsburgh. Go to dataengineeringpodcast.com/conferences to learn more about these and other events, and take advantage of our partner discounts to save money when you register today. Your host is Tobias Macey and today I’m interviewing Vadim Semenov about how data engineers work at DataDog

Interview

Introduction How did you get involved in the area of data management? For anyone who isn’t familiar with DataDog, can you start by describing the types and volumes of data that you’re dealing with? What are the main components of your platform for managing that information? How are the data teams at DataDog organized and what are your primary responsibilities in the organization? What are some of the complexities and challenges that you face in your work as a result of the volume of data that you are processing?

What are some of the strategies which have proven to be most useful in overcoming those challenges?

Who are the main consumers of your work and how do you build in feedback cycles to ensure that their needs are being met? Given that the majority of the data being ingested by DataDog is timeseries, what are your lifecycle and retention policies for that information? Most of the data that you are working with is customer generated from your deployed agents and API integrations. How do you manage cleanliness and schema enforcement for the events as they are being delivered? What are some of the upcoming projects that you have planned for the upcoming months and years? What are some of the technologies, patterns, or practices that you are hoping to adopt?

Contact Info

LinkedIn @databuryat on Twitter

Parting Question

From your perspective, what is the biggest gap in the tooling or technology for data management today?

Closing Announcements

Thank you for listening! Don’t forget to check out our other show, Podcast.init to learn about the Python language, its community, and the innovative ways it is being used. Visit the site to subscribe to the show, sign up for the mailing list, and read the show notes. If you’ve learned something or tried out a project from the show then tell us about it! Email [email protected]) with your story. To help other people find the show please leave a review on iTunes and tell your friends and co-workers Join the community in the new Zulip chat workspace at dataengineeringpodcast.com/chat

Links

DataDog Hadoop Hive Yarn Chef SRE == Site Reliability Engineer Application Performance Management (APM) Apache Kafka RocksDB Cassandra Apache Parquet data serialization format SLA == Service Level Agreement WatchDog Apache Spark

Podcast Episode

Apache Pig Databricks JVM == Java Virtual Machine Kubernetes SSIS (SQL Server Integration Services) Pentaho JasperSoft Apache Airflow

Podcast.init Episode

Apache NiFi

Podcast Episode

Luigi Dagster

Podcast Episode

Prefect

The intro and outro music is from The Hug by The Freak Fandango Orchestra / CC BY-SA

Support Data Engineering Podcast