Easy, fast, and scalable: pick 3. MotherDuck’s managed DuckLake data lakehouse blends the cost efficiency, scale, and openness of a lakehouse with the speed of a warehouse for truly joyful dbt pipelines. We will show you how!

talk-data.com

talk-data.com

Topic

489

tagged

Easy, fast, and scalable: pick 3. MotherDuck’s managed DuckLake data lakehouse blends the cost efficiency, scale, and openness of a lakehouse with the speed of a warehouse for truly joyful dbt pipelines. We will show you how!

L'explosion des données IoT dans les environnements industriels nécessite des architectures de données robustes et évolutives. Cette présentation explore comment les data lakes, et plus spécifiquement l'architecture Lakehouse, répondent aux défis du stockage et du traitement de volumes massifs de données IoT hétérogènes.

À travers l'exemple concret du monitoring opérationnel d'un parc éolien offshore, nous démontrerons comment une solution Lakehouse permet de gérer efficacement les flux de données haute fréquence provenant de capteurs industriels. Nous détaillerons le processus complet : de l'ingestion des données de télémétrie en temps réel au déploiement de modèles de maintenance prédictive, en passant par l'entraînement d'algorithmes de détection d'anomalies et de forecasting.

Cette étude de cas illustrera les avantages clés du data lake pour l'IoT industriel : flexibilité de stockage multi-formats, capacité de traitement en temps réel et en batch, intégration native des outils de machine learning, et optimisation des coûts opérationnels. L'objectif est de fournir un retour d'expérience pratique sur l'implémentation de cette architecture dans un contexte d'Asset Integrity Management, applicable à de nombreux secteurs industriels.

Avec Starburst, découvrez comment transformer vos architectures data et exploiter pleinement le potentiel de vos données grâce aux data products et aux agents IA.

Dans cette session, vous découvrez comment :

*Partager et interroger vos données entre environnements on-premises, hybrides ou multi-clouds, en langage naturel via des agents IA et avec vos propres modèles LLM.

*Transformer des données brutes et inaccessibles en données exploitables, gouvernées et prêtes à l’emploi, enrichies et accessibles grâce aux data products et aux agents IA.

*Accélérer l’innovation et la prise de décision, et instaurer une culture de démocratisation de la donnée où chacun peut en extraire plus de valeur.

Le résultat : plus d’agilité et une organisation où la donnée devient un véritable levier d’innovation.

Starburst est une plateforme de données qui permet de construire un data lakehouse moderne (au format Iceberg par exemple), gouverner vos données et vos modèles LLM, et accéder ou fédérer toutes les sources de données, où qu’elles se trouvent.

Learn how Trade Republic builds its analytical data stack as a modern, real-time Lakehouse with ACID guarantees. Using Debezium for change data capture, we stream database changes and events into our data lake. We leverage Apache Iceberg to ensure interoperability across our analytics platform, powering operational reporting, data science, and executive dashboards.

L’architecture data lakehouse est devenue la référence pour passer d’une BI “classique” centrée sur des bases analytiques à une plateforme de données unifiée, ouverte et évolutive, capable de supporter à la fois l’analytique moderne et l’IA/ML. Mais sa mise en œuvre reste exigeante (ingestion, optimisation, gouvernance).

Cette session montre comment Qlik Open Lakehouse intègre Apache Iceberg dans votre infrastructure pour bâtir un lakehouse gouverné, opérationnel, sans complexité.

À la clé : time-to-value accéléré, TCO réduit, interopérabilité ouverte sans vendor lock-in.

Un socle fiable qui aligne IT & métiers et propulse analytique et IA à l’échelle.

Vous souhaitez en savoir plus ? Toute l'équipe Qlik vous donne rendez-vous sur le stand D38 pour des démos live, des cas d'usage et des conseils d'experts.

Rethink how you build open, connected, and governed data lakehouses: integrate any Iceberg REST compatible catalog to Snowflake to securely read from and write to any Iceberg table with Catalog Linked Databases. Unlock insights and AI from semi-structured data with support for VARIANT data types. And enjoy enterprise-grade security with Snowflake's managed service for Apache Polaris™, Snowflake Open Catalog.

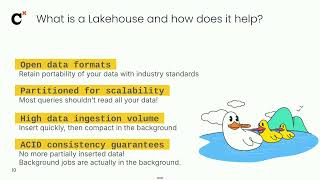

Have you ever spun up a Spark cluster just to update three rows in a Delta table? In this talk, we’ll explore how modern Python libraries can power lightweight, production-grade Data Lakehouse workflows—helping you avoid over-engineering your data stack.

Every data architecture diagram out there makes it abundantly clear who's in charge: At the bottom sits the analyst, above that is an API server, and on the very top sits the mighty data warehouse. This pattern is so ingrained we never ever question its necessity, despite its various issues like slow data response time, multi-level scaling issues, and massive cost.

But there is another way: Disconnect of storage and compute enables localization of query processing closer to people, leading to much snappier responses, natural scaling with client-side query processing, and much reduced cost.

In this talk, it will be discussed how modern data engineering paradigms like decomposition of storage, single-node query processing, and lakehouse formats enable a radical departure from the tired three-tier architecture. By inverting the architecture we can put user's needs first. We can rely on commoditised components like object store to enable fast, scalable, and cost-effective solutions.

In this session, we’ll share our transformation journey from a traditional, centralised data warehouse to a modern data lakehouse architecture, powered by data mesh principles. We’ll explore the challenges we faced with legacy systems, the strategic decisions that led us to adopt a lakehouse model, and how data mesh enabled us to decentralise ownership, improve scalability, and enhance data governance.

Large Language Models (LLMs) are transformative, but static knowledge and hallucinations limit their direct enterprise use. Retrieval-Augmented Generation (RAG) is the standard solution, yet moving from prototype to production is fraught with challenges in data quality, scalability, and evaluation.

This talk argues the future of intelligent retrieval lies not in better models, but in a unified, data-first platform. We'll demonstrate how the Databricks Data Intelligence Platform, built on a Lakehouse architecture with integrated tools like Mosaic AI Vector Search, provides the foundation for production-grade RAG.

Looking ahead, we'll explore the evolution beyond standard RAG to advanced architectures like GraphRAG, which enable deeper reasoning within Compound AI Systems. Finally, we'll show how the end-to-end Mosaic AI Agent Framework provides the tools to build, govern, and evaluate the intelligent agents of the future, capable of reasoning across the entire enterprise.

https://www.bigdataldn.com/en-gb/conference/session-details.4500.251876.building-an-ai_ready-open-lakehouse-on-google-cloud.html

Discover how to build a powerful AI Lakehouse and unified data fabric natively on Google Cloud. Leverage BigQuery's serverless scale and robust analytics capabilities as the core, seamlessly integrating open data formats with Apache Iceberg and efficient processing using managed Spark environments like Dataproc. Explore the essential components of this modern data environment, including data architecture best practices, robust integration strategies, high data quality assurance, and efficient metadata management with Google Cloud Data Catalog. Learn how Google Cloud's comprehensive ecosystem accelerates advanced analytics, preparing your data for sophisticated machine learning initiatives and enabling direct connection to services like Vertex AI.

Send us a text In this episode, we're joined by Sam Debruyn and Dorian Van den Heede who reflect on their talks at SQL Bits 2025 and dive into the technical content they presented. Sam walks through how dbt integrates with Microsoft Fabric, explaining how it improves lakehouse and warehouse workflows by adding modularity, testing, and documentation to SQL development. He also touches on Fusion’s SQL optimization features and how it compares to tools like SQLMesh. Dorian shares his MLOps demo, which simulates beating football bookmakers using historical data,nshowing how to build a full pipeline with Azure ML, from feature engineering to model deployment. They discuss the role of Python modeling in dbt, orchestration with Azure ML, and the practical challenges of implementing MLOps in real-world scenarios. Toward the end, they explore how AI tools like Copilot are changing the way engineers learn and debug code, raising questions about explainability, skill development, and the future of junior roles in tech. It’s rich conversation covering dbt, MLOps, Python, Azure ML, and the evolving role of AI in engineering.

"Extract, transform, load" (ETL) is at the center of every application of data, from business intelligence to AI. Constant shifts in the data landscape—including the implementations of lakehouse architectures and the importance of high-scale real-time data—mean that today's data practitioners must approach ETL a bit differently. This updated technical guide offers data engineers, engineering managers, and architects an overview of the modern ETL process, along with the challenges you're likely to face and the strategic patterns that will help you overcome them. You'll come away equipped to make informed decisions when implementing ETL and confident about choosing the technology stack that will help you succeed. Discover what ETL looks like in the new world of data lakehouses Learn how to deal with real-time data Explore low-code ETL tools Understand how to best achieve scale, performance, and observability

Join Amperity’s Principal Product Architect to learn more about innovations in the Lakehouse space and how companies are building efficient and durable architectures. This session will include a deep dive into building a composable data ecosystem centered around a lakehouse, followed by reviewing real world application of these concepts through Amperity Bridge, an exciting new technology to plug software solutions into a lakehouse.

The modern enterprise is increasingly defined by the need for open, governed, and intelligent data access. This session explores how Apache Iceberg, Dremio, and the Model Context Protocol (MCP) come together to enable the Agentic Lakehouse. A data platform that is interoperable, high-performing, and AI-ready.

We’ll begin with Apache Iceberg, which provides the foundation for data interoperability across teams and organisations, ensuring shared datasets can be reliably accessed and evolved. From there, we’ll highlight how Dremio extends Iceberg with turnkey governance, management, and performance acceleration, unifying your lakehouse with databases and warehouses under one platform. Finally, we’ll introduce MCP and showcase how innovations like the Dremio MCP server enable natural-language analytics on your data.

With the power of Dremio’s built-in semantic layer, AI agents and humans alike can ask complex business questions in plain language and receive accurate, governed answers.

Join us to learn how to unlock the next generation of data interaction with the Agentic Lakehouse.

Unlock the true potential of your data with the Qlik Open Lakehouse, a revolutionary approach to Iceberg integration designed for the enterprise. Many organizations face the pain points of managing multiple, costly data platforms and struggling with low-latency ingestion. While Apache Iceberg offers robust features like ACID transactions and schema evolution, achieving optimal performance isn't automatic; it requires sophisticated maintenance. Introducing the Qlik Open Lakehouse, a fully managed and optimized solution built on Apache Iceberg, powered by Qlik's Adaptive Iceberg Optimizer. Discover how you can do data differently and achieve 10x faster queries, a 33-42% reduction in file API overhead, and ultimately, a 50% reduction in costs through streamlined operations and compute savings.

Do data differently with Qlik Open Lakehouse: optimized Iceberg performance, faster queries, and reduced cost at scale.

In this session, Paul Wilkinson, Principal Solutions Architect at Redpanda, will demonstrate Redpanda's native Iceberg capability: a game-changing addition that bridges the gap between real-time streaming and analytical workloads, eliminating the complexity of traditional data lake architectures while maintaining the performance and simplicity that Redpanda is known for.

Paul will explore how this new capability enables organizations to seamlessly transition streaming data into analytical formats without complex ETL pipelines or additional infrastructure overhead in a follow-along demo - allowing you to build your own streaming lakehouse and show it to your team!