We’re improving DataFramed, and we need your help! We want to hear what you have to say about the show, and how we can make it more enjoyable for you—find out more here. We’re often caught chasing the dream of “self-serve” data—a place where data empowers stakeholders to answer their questions without a data expert at every turn. But what does it take to reach that point? How do you shape tools that empower teams to explore and act on data without the usual bottlenecks? And with the growing presence of natural language tools and AI, is true self-service within reach, or is there still more to the journey? Sameer Al-Sakran is the CEO at Metabase, a low-code self-service analytics company. Sameer has a background in both data science and data engineering so he's got a practitioner's perspective as well as executive insight. Previously, he was CTO at Expa and Blackjet, and the founder of SimpleHadoop and Adopilot. In the episode, Richie and Sameer explore self-serve analytics, the evolution of data tools, GenAI vs AI agents, semantic layers, the challenges of implementing self-serve analytics, the problem with data-driven culture, encouraging efficiency in data teams, the parallels between UX and data projects, exciting trends in analytics, and much more. Links Mentioned in the Show: MetabaseConnect with SameerArticles from Metabase on jargon, information budgets, analytics mistakes, and data model mistakesCourse: Introduction to Data CultureRelated Episode: Towards Self-Service Data Engineering with Taylor Brown, Co-Founder and COO at FivetranRewatch Sessions from RADAR: Forward Edition New to DataCamp? Learn on the go using the DataCamp mobile appEmpower your business with world-class data and AI skills with DataCamp for business

talk-data.com

talk-data.com

Topic

GenAI

Generative AI

1517

tagged

Activity Trend

Top Events

Understand the full potential of Microsoft Power Platform with this comprehensive guide, designed to provide you with the knowledge and tools needed to create intelligent business applications, automate workflows, and drive data-driven insights for business growth. Whether you're a novice or an experienced professional, this book offers a step-by-step approach to mastering the Power Platform. This book comes with an extensive array of essential concepts, architectural patterns and techniques. It will also guide you with practical insights to navigate the Power Platform effortlessly while integrating on Azure. Starting with exploring Power Apps for building enterprise applications, the book delves into Dataverse, Copilot Studio, AI Builder, managing platforms and Application life cycle management. You will then demonstrate testing strategy followed by a detailed examination of Dataverse and intelligent AI-powered Applications. Additionally, you will cover Power pages for external websites and AI-infused solutions. Each section is meticulously structured, offering step-by-step guidance, hands-on exercises, and real-world scenarios to reinforce learning. After reading the book, you will be able to optimize your utilization of the Power Platform for creating effective business solutions. What You Will Learn: Understand the core components and capabilities of Power Platform Explore how Power Platform integrates with Azure services Understand the key features and benefits of using Power Platform for business applications Discover best practices for governance to ensure compliance and efficient management Explore techniques for optimizing the performance of data integration and export processes on Azure Who This Book Is For: Application developers, Enterprise Architects and business decision-makers.

Jeremy Forman joins us to open up about the hurdles– and successes that come with building data products for pharmaceutical companies. Although he’s new to Pfizer, Jeremy has years of experience leading data teams at organizations like Seagen and the Bill and Melinda Gates Foundation. He currently serves in a more specialized role in Pfizer’s R&D department, building AI and analytical data products for scientists and researchers. .

Jeremy gave us a good luck at his team makeup, and in particular, how his data product analysts and UX designers work with pharmaceutical scientists and domain experts to build data-driven solutions.. We talked a good deal about how and when UX design plays a role in Pfizer’s data products, including a GenAI-based application they recently launched internally.

Highlights/ Skip to:

(1:26) Jeremy's background in analytics and transition into working for Pfizer (2:42) Building an effective AI analytics and data team for pharma R&D (5:20) How Pfizer finds data products managers (8:03) Jeremy's philosophy behind building data products and how he adapts it to Pfizer (12:32) The moment Jeremy heard a Pfizer end-user use product management research language and why it mattered (13:55) How Jeremy's technical team members work with UX designers (18:00) The challenges that come with producing data products in the medical field (23:02) How to justify spending the budget on UX design for data products (24:59) The results we've seen having UX design work on AI / GenAI products (25:53) What Jeremy learned at the Bill & Melinda Gates Foundation with regards to UX and its impact on him now (28:22) Managing the "rough dance" between data science and UX (33:22) Breaking down Jeremy's GenAI application demo from CDIOQ (36:02) What would Jeremy prioritize right now if his team got additional funding (38:48) Advice Jeremy would have given himself 10 years ago (40:46) Where you can find more from Jeremy

Quotes from Today’s Episode

“We have stream-aligned squads focused on specific areas such as regulatory, safety and quality, or oncology research. That’s so we can create functional career pathing and limit context switching and fragmentation. They can become experts in their particular area and build a culture within that small team. It’s difficult to build good [pharma] data products. You need to understand the domain you’re supporting. You can’t take somebody with a financial background and put them in an Omics situation. It just doesn’t work. And we have a lot of the scars, and the failures to prove that.” - Jeremy Forman (4:12) “You have to have the product mindset to deliver the value and the promise of AI data analytics. I think small, independent, autonomous, empowered squads with a product leader is the only way that you can iterate fast enough with [pharma data products].” - Jeremy Forman (8:46) “The biggest challenge is when we say data products. It means a lot of different things to a lot of different people, and it’s difficult to articulate what a data product is. Is it a view in a database? Is it a table? Is it a query? We’re all talking about it in different terms, and nobody’s actually delivering data products.” - Jeremy Forman (10:53) “I think when we’re talking about [data products] there’s some type of data asset that has value to an end-user, versus a report or an algorithm. I think it’s even hard for UX people to really understand how to think about an actual data product. I think it’s hard for people to conceptualize, how do we do design around that? It’s one of the areas I think I’ve seen the biggest challenges, and I think some of the areas we’ve learned the most. If you build a data product, it’s not accurate, and people are getting results that are incomplete… people will abandon it quickly.” - Jeremy Forman (15:56) “ I think that UX design and AI development or data science work is a magical partnership, but they often don’t know how to work with each other. That’s been a challenge, but I think investing in that has been critical to us. Even though we’ve had struggles… I think we’ve also done a good job of understanding the [user] experience and impact that we want to have. The prototype we shared [at CDIOQ] is driven by user experience and trying to get information in the hands of the research organization to understand some portfolio types of decisions that have been made in the past. And it’s been really successful.” - Jeremy Forman (24:59) “If you’re having technology conversations with your business users, and you’re focused only the technology output, you’re just building reports. [After adopting If we’re having technology conversations with our business users and only focused on the technology output, we’re just building reports. [After we adopted a human-centered design approach], it was talking [with end-users] about outcomes, value, and adoption. Having that resource transformed the conversation, and I felt like our quality went up. I felt like our output went down, but our impact went up. [End-users] loved the tools, and that wasn’t what was happening before… I credit a lot of that to the human-centered design team.” - Jeremy Forman (26:39) “When you’re thinking about automation through machine learning or building algorithms for [clinical trial analysis], it becomes a harder dance between data scientists and human-centered design. I think there’s a lack of appreciation and understanding of what UX can do. Human-centered design is an empathy-driven understanding of users’ experience, their work, their workflow, and the challenges they have. I don’t think there’s an appreciation of that skill set.” - Jeremy Forman (29:20) “Are people excited about it? Is there value? Are we hearing positive things? Do they want us to continue? That’s really how I’ve been judging success. Is it saving people time, and do they want to continue to use it? They want to continue to invest in it. They want to take their time as end-users, to help with testing, helping to refine it. Those are the indicators. We’re not generating revenue, so what does the adoption look like? Are people excited about it? Are they telling friends? Do they want more? When I hear that the ten people [who were initial users] are happy and that they think it should be rolled out to the whole broader audience, I think that’s a good sign.” - Jeremy Forman (35:19)

Links Referenced LinkedIn: https://www.linkedin.com/in/jeremy-forman-6b982710/

We’re improving DataFramed, and we need your help! We want to hear what you have to say about the show, and how we can make it more enjoyable for you—find out more here. Data is no longer just for coders. With the rise of low-code tools, more people across organizations can access data insights without needing programming skills. But how can companies leverage these tools effectively? And what steps should they take to integrate them into existing workflows while upskilling their teams? Michael Berthold is CEO and co-founder at KNIME, an open source data analytics company. He has more than 25 years of experience in data science, working in academia, most recently as a full professor at Konstanz University (Germany) and previously at University of California (Berkeley) and Carnegie Mellon, and in industry at Intel’s Neural Network Group, Utopy, and Tripos. Michael has published extensively on data analytics, machine learning, and artificial intelligence. In the episode, Adel and Michael explore low-code data science, the adoption of low-code data tools, the evolution of data science workflows, upskilling, low-code and code collaboration, data literacy, integration with AI and GenAI tools, the future of low-code data tools and much more. Links Mentioned in the Show: KNIMEConnect with MichaelCode Along: Low-Code Data Science and Analytics with KNIMECourse: Introduction to KNIMERelated Episode: No-Code LLMs In Practice with Birago Jones & Karthik Dinakar, CEO & CTO at Pienso New to DataCamp? Learn on the go using the DataCamp mobile appEmpower your business with world-class data and AI skills with DataCamp for business

Send us a text

Welcome to the cozy corner of the tech world where ones and zeros mingle with casual chit-chat. Datatopics Unplugged is your go-to spot for relaxed discussions around tech, news, data, and society. Dive into conversations that should flow as smoothly as your morning coffee (but don’t), where industry insights meet laid-back banter. Whether you’re a data aficionado or just someone curious about the digital age, pull up a chair, relax, and let’s get into the heart of data, unplugged style! In this episode, we are joined by special guest Nico for a lively and wide-ranging tech chat. Grab your headphones and prepare for: Strava’s ‘Athlete Intelligence’ feature: A humorous dive into how workout apps are getting smarter—and a little sassier.Frontend frameworks: HTMX is a tough choice: A candid discussion on using React versus emerging alternatives like HTMX and when to keep things lightweight.Octoverse 2024 trends and language wars: Python takes the lead over JavaScript as the top GitHub language, and we dissect why Go, TypeScript, and Rust are getting love too.GenAI meets Minecraft: Imagine procedurally generated worlds and dreamlike coherence breaks—Minecraft-style. How GenAI could redefine gameplay narratives and NPC behavior.OpenAI’s O1 model leak: Insights on the recent leak, what’s new, and its implications for the future of AI.Tiger Beetle’s transactional databases and testing tales: Nico walks us through Tiger Style, deterministic simulation testing, and why it’s a game changer for distributed databases.Automated testing for LLMOps: A quick overview of automated testing for large language models and its role in modern AI workflows.DeepLearning.ai’s short courses: Quick, impactful learning to level up your AI skills.

Send us a text Welcome to the cozy corner of the tech world where ones and zeros mingle with casual chit-chat. Datatopics Unplugged is your go-to spot for relaxed discussions around tech, news, data, and society. Dive into conversations that should flow as smoothly as your morning coffee (but don't), where industry insights meet laid-back banter. Whether you're a data aficionado or just someone curious about the digital age, pull up a chair, relax, and let's get into the heart of data, unplugged style! In this episode, we cover: ChatGPT Search: Exploring OpenAI's new web-browsing capability, and how it transforms everything from everyday searches to complex problem-solving.ChatGPT is a Good Rubber Duck: Discover how ChatGPT makes for an excellent companion for debugging and brainstorming, offering more than a few laughs along the way.What’s New in Python 3.13: From the new free-threaded mode to the just-in-time (JIT) compiler, we break down the major (and some lesser-known) changes, with additional context from this breakdown and Reddit insights.UV is Fast on its Feet: How the development of new tools impacts the Python packaging ecosystem, with a side discussion on Poetry and the complexities of Python lockfiles.Meta’s Llama Training Takes Center Stage: Meta ramps up its AI game, pouring vast resources into training the Llama model. We ponder the long-term impact and their ambitions in the AI space.OpenAI’s Swarm: A new experimental framework for multi-agent orchestration, enabling AI agents to collaborate and complete tasks—what it means for the future of AI interactions.PGrag for Retrieval-Augmented Generation (RAG): We explore Neon's integration for building end-to-end RAG pipelines directly in Postgres, bridging vector databases, text embedding, and more.OSI’s Open Source AI License: The Open Source Initiative releases an AI-specific license to bring much-needed clarity and standards to open-source models.We also venture into generative AI, the future of AR (including Apple Vision and potential contact lenses), and a brief look at V0 by Vercel, a tool that auto-generates web components with AI prompts.

Panel discussion on GenAI trends with perspectives on investment, growth, and practice.

Machine learning and AI have become essential tools for delivering real-time solutions across industries. However, as these technologies scale, they bring their own set of challenges—complexity, data drift, latency, and the constant fight between innovation and reliability. How can we deploy models that not only enhance user experiences but also keep up with changing demands? And what does it take to ensure that these solutions are built to adapt, perform, and deliver value at scale? Rachita Naik is a Machine Learning (ML) Engineer at Lyft, Inc., and a recent graduate of Columbia University in New York. With two years of professional experience, Rachita is dedicated to creating impactful software solutions that leverage the power of Artificial Intelligence (AI) to solve real-world problems. At Lyft, Rachita focuses on developing and deploying robust ML models to enhance the ride-hailing industry’s pickup time reliability. She thrives on the challenge of addressing ML use cases at scale in dynamic environments, which has provided her with a deep understanding of practical challenges and the expertise to overcome them. Throughout her academic and professional journey, Rachita has honed a diverse skill set in AI and software engineering and remains eager to learn about new technologies and techniques to improve the quality and effectiveness of her work. In the episode, Adel and Rachita explore how machine learning is leveraged at Lyft, the primary use-cases of ML in ride-sharing, what goes into an ETA prediction pipeline, the challenges of building large scale ML systems, reinforcement learning for dynamic pricing, key skills for machine learning engineers, future trends across machine learning and generative AI and much more. Links Mentioned in the Show: Engineering at Lyft on MediumConnect with RachitaResearch Paper—A Better Match for Drivers and Riders: Reinforcement Learning at LyftCareer Track: Machine Learning EngineerRelated Episode: Why ML Projects Fail, and How to Ensure Success with Eric Siegel, Founder of Machine Learning Week, Former Columbia Professor, and Bestselling AuthorSign up to RADAR: Forward Edition New to DataCamp? Learn on the go using the DataCamp mobile appEmpower your business with world-class data and AI skills with DataCamp for business

Erik Bernhardsson, the CEO and co-founder of Modal Labs, joins Tristan to talk about Gen AI, the lack of GPUs, the future of cloud computing, and egress fees. They also discuss whether the job title of data engineer is something we should want more or less of in the future. Erik's not afraid of a spicy take, so this is a fun one. For full show notes and to read 6+ years of back issues of the podcast's companion newsletter, head to https://roundup.getdbt.com. The Analytics Engineering Podcast is sponsored by dbt Labs.

We talked about:

00:00 DataTalks.Club intro 01:56 Using data to create livable cities 02:52 Rachel's career journey: from geography to urban data science 04:20 What does a transport scientist do? 05:34 Short-term and long-term transportation planning 06:14 Data sources for transportation planning in Singapore 08:38 Rachel's motivation for combining geography and data science 10:19 Urban design and its connection to geography 13:12 Defining a livable city 15:30 Livability of Singapore and urban planning 18:24 Role of data science in urban and transportation planning 20:31 Predicting travel patterns for future transportation needs 22:02 Data collection and processing in transportation systems 24:02 Use of real-time data for traffic management 27:06 Incorporating generative AI into data engineering 30:09 Data analysis for transportation policies 33:19 Technologies used in text-to-SQL projects 36:12 Handling large datasets and transportation data in Singapore 42:17 Generative AI applications beyond text-to-SQL 45:26 Publishing public data and maintaining privacy 45:52 Recommended datasets and projects for data engineering beginners 49:16 Recommended resources for learning urban data science

About the speaker:

Rachel is an urban data scientist dedicated to creating liveable cities through the innovative use of data. With a background in geography, and a masters in urban data science, she blends qualitative and quantitative analysis to tackle urban challenges. Her aim is to integrate data driven techniques with urban design to foster sustainable and equitable urban environments.

Links: - https://datamall.lta.gov.sg/content/datamall/en/dynamic-data.html

00:00 DataTalks.Club intro 01:56 Using data to create livable cities 02:52 Rachel's career journey: from geography to urban data science 04:20 What does a transport scientist do? 05:34 Short-term and long-term transportation planning 06:14 Data sources for transportation planning in Singapore 08:38 Rachel's motivation for combining geography and data science 10:19 Urban design and its connection to geography 13:12 Defining a livable city 15:30 Livability of Singapore and urban planning 18:24 Role of data science in urban and transportation planning 20:31 Predicting travel patterns for future transportation needs 22:02 Data collection and processing in transportation systems 24:02 Use of real-time data for traffic management 27:06 Incorporating generative AI into data engineering 30:09 Data analysis for transportation policies 33:19 Technologies used in text-to-SQL projects 36:12 Handling large datasets and transportation data in Singapore 42:17 Generative AI applications beyond text-to-SQL 45:26 Publishing public data and maintaining privacy 45:52 Recommended datasets and projects for data engineering beginners 49:16 Recommended resources for learning urban data science

Join our slack: https: //datatalks.club/slack.html

The relationship between AI and ethics is both developing and delicate. On one hand, the GenAI advancements to date are impressive. On the other, extreme care needs to be taken as this tech continues to quickly become more commonplace in our lives. In today’s episode, Ovetta Sampson and I examine the crossroads ahead for designing AI and GenAI user experiences.

While professionals and the general public are eager to embrace new products, recent breakthroughs, etc.; we still need to have some guard rails in place. If we don’t, data can easily get mishandled, and people could get hurt. Ovetta possesses firsthand experience working on these issues as they sprout up. We look at who should be on a team designing an AI UX, exploring the risks associated with GenAI, ethics, and need to be thinking about going forward.

Highlights/ Skip to: (1:48) Ovetta's background and what she brings to Google’s Core ML group (6:03) How Ovetta and her team work with data scientists and engineers deep in the stack (9:09) How AI is changing the front-end of applications (12:46) The type of people you should seek out to design your AI and LLM UXs (16:15) Explaining why we’re only at the very start of major GenAI breakthroughs (22:34) How GenAI tools will alter the roles and responsibilities of designers, developers, and product teams (31:11) The potential harms of carelessly deploying GenAI technology (42:09) Defining acceptable levels of risk when using GenAI in real-world applications (53:16) Closing thoughts from Ovetta and where you can find her

Quotes from Today’s Episode “If artificial intelligence is just another technology, why would we build entire policies and frameworks around it? The reason why we do that is because we realize there are some real thorny ethical issues [surrounding AI]. Who owns that data? Where does it come from? Data is created by people, and all people create data. That’s why companies have strong legal, compliance, and regulatory policies around [AI], how it’s built, and how it engages with people. Think about having a toddler and then training the toddler on everything in the Library of Congress and on the internet. Do you release that toddler into the world without guardrails? Probably not.” - Ovetta Sampson (10:03) “[When building a team] you should look for a diverse thinker who focuses on the limitations of this technology- not its capability. You need someone who understands that the end destination of that technology is an engagement with a human being. You need somebody who understands how they engage with machines and digital products. You need that person to be passionate about testing various ways that relationships can evolve. When we go from execution on code to machine learning, we make a shift from [human] agency to a shared-agency relationship. The user and machine both have decision-making power. That’s the paradigm shift that [designers] need to understand. You want somebody who can keep that duality in their head as they’re testing product design.” - Ovetta Sampson (13:45) “We’re in for a huge taxonomy change. There are words that mean very specific definitions today. Software engineer. Designer. Technically skilled. Digital. Art. Craft. AI is changing all that. It’s changing what it means to be a software engineer. Machine learning used to be the purview of data scientists only, but with GenAI, all of that is baked in to Gemini. So, now you start at a checkpoint, and you’re like, all right, let’s go make an API, right? So, the skills, the understanding, the knowledge, the taxonomy even, how we talk about these things, how do we talk about the machine who speaks to us talks to us, who could create a podcast out of just voice memos?” - Ovetta Sampson (24:16) “We have to be very intentional [when building AI tools], and that’s the kind of folks you want on teams. [Designers] have to go and play scary scenarios. We have to do that. No designer wants to be “Negative Nancy,” but this technology has huge potential to harm. It has harmed. If we don’t have the skill sets to recognize, document, and minimize harm, that needs to be part of our skill set. If we’re not looking out for the humans, then who actually is?” - Ovetta Sampson (32:10) “[Research shows] things happen to our brain when we’re exposed to artificial intelligence… there are real human engagement risks that are an opportunity for design. When you’re designing a self-driving car, you can’t just let the person go to sleep unless the car is fully [automated] and every other car on the road is self-driving. If there are humans behind the wheel, you need to have a feedback loop system—something that’s going to happen [in case] the algorithm is wrong. If you don’t have that designed, there’s going to be a large human engagement risk that a car is going to run over somebody who’s [for example] pushing a bike up a hill[...] Why? The car could not calculate the right speed and pace of a person pushing their bike. It had the speed and pace of a person walking, the speed and pace of a person on a bike, but not the two together. Algorithms will be wrong, right?” - Ovetta Sampson (39:42) “Model goodness used to be the purview of companies and the data scientists. Think about the first search engines. Their model goodness was [about] 77%. That’s good, right? And then people started seeing photos of apes when [they] typed in ‘black people.’ Companies have to get used to going to their customers in a wide spectrum and asking them when they’re [models or apps are] right and wrong. They can’t take on that burden themselves anymore. Having ethically sourced data input and variables is hard work. If you’re going to use this technology, you need to put into place the governance that needs to be there.” - Ovetta Sampson (44:08)

udging by the number of inbound pitches we get from PR firms, AI is absolutely going to replace most of the work of the analyst some time in the next few weeks. It's just a matter of time until some startup gets enough market traction to make that happen (business tip: niche podcasts are likely not a productive path to market dominance, no matter what Claude from Marketing says). We're skeptical. But that doesn't mean we don't think there are a lot of useful applications of generative AI for the analyst. We do! As Moe posited in this episode, one useful analogy is that thinking of using generative AI effectively is like getting a marketer effectively using MMM when they've been living in an MTA world (it's more nuanced and complicated). Our guest (NOT from a PR firm solicitation!), Martin Broadhurst, agreed: it's dicey to fully embrace generative AI without some understanding of what it's actually doing. Things got a little spicy, but no humans or AI were harmed in the making of the episode. For complete show notes, including links to items mentioned in this episode and a transcript of the show, visit the show page.

Send us a text Welcome to the cozy corner of the tech world where ones and zeros mingle with casual chit-chat. Datatopics Unplugged is your go-to spot for relaxed discussions around tech, news, data, and society.

In this episode, we dive into the world of data storytelling with special guest Angelica Lo Duca, a professor, researcher, and author. Pull up a chair as we explore her journey from programming to teaching, and dive into the principles of turning raw data into compelling stories.

Key topics include:

Angelica’s background: From researcher to professor and published author

Why write a book?: The motivation, process, and why she chooses books over blogs

About the book: Data Storytelling with Altair and Generative AI

Overview of the book: Who it’s for and the key insights it offers

What is data storytelling and how it differs from traditional dashboards and reports

Why Altair? Exploring Altair and Vega-Lite for effective visualizations

Generative AI’s role: How tools like ChatGPT and DALL-E fit into the data storytelling process, and potential risks like bias in AI-generated images

DIKW Pyramid: Moving from raw data to actionable wisdom using the Data-Information-Knowledge-Wisdom framework

Where to buy her books: https://www.amazon.com/stores/Angelica-Lo-Duca/author/B0B5BHD5VF https://www.amazon.com/Become-Great-Data-Storyteller-Change/dp/1394283318 https://www.amazon.com/Data-Storytelling-Altair-Angelica-Duca/dp/1633437922/

Snippet: https://livebook.manning.com/book/data-storytelling-with-altair-and-ai/chapter-10/16

Connect with Angelica on Medium for more articles and insights: https://medium.com/@alod83/about

Generative AI and data are more interconnected than ever. If you want quality in your AI product, you need to be connected to a database with high quality data. But with so many database options and new AI tools emerging, how do you ensure you’re making the right choices for your organization? Whether it’s enhancing customer experiences or improving operational efficiency, understanding the role of your databases in powering AI is crucial. Andi Gutmans is the General Manager and Vice President for Databases at Google. Andi’s focus is on building, managing, and scaling the most innovative database services to deliver the industry’s leading data platform for businesses. Prior to joining Google, Andi was VP Analytics at AWS running services such as Amazon Redshift. Prior to his tenure at AWS, Andi served as CEO and co-founder of Zend Technologies, the commercial backer of open-source PHP. Andi has over 20 years of experience as an open source contributor and leader. He co-authored open source PHP. He is an emeritus member of the Apache Software Foundation and served on the Eclipse Foundation’s board of directors. He holds a bachelor’s degree in computer science from the Technion, Israel Institute of Technology. In the episode, Richie and Andi explore databases and their relationship with AI and GenAI, key features needed in databases for AI, GCP database services, AlloyDB, federated queries in Google Cloud, vector databases, graph databases, practical use cases of AI in databases and much more. Links Mentioned in the Show: GCPConnect with AndiAlloyDB for PostgreSQLCourse: Responsible AI Data ManagementRelated Episode: The Power of Vector Databases and Semantic Search with Elan Dekel, VP of Product at PineconeSign up to RADAR: Forward Edition New to DataCamp? Learn on the go using the DataCamp mobile appEmpower your business with world-class data and AI skills with DataCamp for business

Today, we’re joined by Vineet Jain, Co-Founder & CEO of Egnyte, the #1 cloud content governance platform. We talk about: The generative AI hype cycleStartups focusing on creating value versus increasing their valuationBiggest challenges to implementing an enterprise content management systemHow to boost user adoption of a new content management systemWill on-prem infrastructure eventually disappear?

Summary The rapid growth of generative AI applications has prompted a surge of investment in vector databases. While there are numerous engines available now, Lance is designed to integrate with data lake and lakehouse architectures. In this episode Weston Pace explains the inner workings of the Lance format for table definitions and file storage, and the optimizations that they have made to allow for fast random access and efficient schema evolution. In addition to integrating well with data lakes, Lance is also a first-class participant in the Arrow ecosystem, making it easy to use with your existing ML and AI toolchains. This is a fascinating conversation about a technology that is focused on expanding the range of options for working with vector data. Announcements Hello and welcome to the Data Engineering Podcast, the show about modern data managementImagine catching data issues before they snowball into bigger problems. That’s what Datafold’s new Monitors do. With automatic monitoring for cross-database data diffs, schema changes, key metrics, and custom data tests, you can catch discrepancies and anomalies in real time, right at the source. Whether it’s maintaining data integrity or preventing costly mistakes, Datafold Monitors give you the visibility and control you need to keep your entire data stack running smoothly. Want to stop issues before they hit production? Learn more at dataengineeringpodcast.com/datafold today!Your host is Tobias Macey and today I'm interviewing Weston Pace about the Lance file and table format for column-oriented vector storageInterview IntroductionHow did you get involved in the area of data management?Can you describe what Lance is and the story behind it?What are the core problems that Lance is designed to solve?What is explicitly out of scope?The README mentions that it is straightforward to convert to Lance from Parquet. What is the motivation for this compatibility/conversion support?What formats does Lance replace or obviate?In terms of data modeling Lance obviously adds a vector type, what are the features and constraints that engineers should be aware of when modeling their embeddings or arbitrary vectors?Are there any practical or hard limitations on vector dimensionality?When generating Lance files/datasets, what are some considerations to be aware of for balancing file/chunk sizes for I/O efficiency and random access in cloud storage?I noticed that the file specification has space for feature flags. How has that aided in enabling experimentation in new capabilities and optimizations?What are some of the engineering and design decisions that were most challenging and/or had the biggest impact on the performance and utility of Lance?The most obvious interface for reading and writing Lance files is through LanceDB. Can you describe the use cases that it focuses on and its notable features?What are the other main integrations for Lance?What are the opportunities or roadblocks in adding support for Lance and vector storage/indexes in e.g. Iceberg or Delta to enable its use in data lake environments?What are the most interesting, innovative, or unexpected ways that you have seen Lance used?What are the most interesting, unexpected, or challenging lessons that you have learned while working on the Lance format?When is Lance the wrong choice?What do you have planned for the future of Lance?Contact Info LinkedInGitHubParting Question From your perspective, what is the biggest gap in the tooling or technology for data management today?Links Lance FormatLanceDBSubstraitPyArrowFAISSPineconePodcast EpisodeParquetIcebergPodcast EpisodeDelta LakePodcast EpisodePyLanceHilbert CurvesSIFT VectorsS3 ExpressWekaDataFusionRay DataTorch Data LoaderHNSW == Hierarchical Navigable Small Worlds vector indexIVFPQ vector indexGeoJSONPolarsThe intro and outro music is from The Hug by The Freak Fandango Orchestra / CC BY-SA

coming soon.

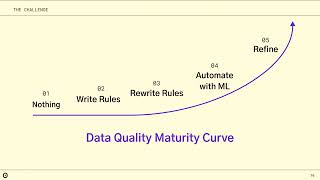

Are you a dbt Cloud customer aiming to fast-track your company’s journey to GenAI and speed up data development? You can't deploy AI applications without trusting the data that feeds them. Rule-based data quality approaches are a dead end that leaves you in a never-ending maintenance cycle. Join us to learn how modern machine learning approaches to data quality overcome the limits of rules and checks, helping you escape the reactive doom loop and unlock high-quality data for your whole company.

Speakers: Amy Reams VP Business Development Anomalo

Jonathan Karon Partner Innovation Lead Anomalo

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements