Bob Muglia likely needs no introduction. The former CEO of Snowflake led the company during its early, transformational years after a long career at Microsoft and Juniper. Bob recently released the book The Datapreneurs about the arc of innovation in the data industry, starting with the first relational databases all the way to the present craze of LLMs and beyond. In this conversation with Tristan and Julia, Bob shares insights into the future of data engineering and its potential business impact while offering a glimpse into his professional journey. For full show notes and to read 6+ years of back issues of the podcast's companion newsletter, head to https://roundup.getdbt.com. The Analytics Engineering Podcast is sponsored by dbt Labs.

talk-data.com

talk-data.com

Topic

Snowflake

550

tagged

Activity Trend

Top Events

Data and AI are advancing at an unprecedented rate—and while the jury is still out on achieving superintelligent AI systems, the idea of artificial intelligence that can understand and learn anything—an “artificial general intelligence”—is becoming more likely. What does the rise of AI mean for the future of software and work as we know it? How will AI help reinvent most of the ways we interact with the digital and physical world? Bob Muglia is a data technology investor and business executive, former CEO of Snowflake, and past president of Microsoft's Server and Tools Division. As a leader in data & AI, Bob focuses on how innovation and ethical values can merge to shape the data economy's future in the era of AI. He serves as a board director for emerging companies that seek to maximize the power of data to help solve some of the world's most challenging problems. In the episode, Richie and Bob explore the current era of AI and what it means for the future of software. Throughout the episode, they discuss how to approach driving value with large language models, the main challenges organizations face when deploying AI systems, the risks, and rewards of fine-tuning LLMs for specific use cases, what the next 12 to 18 months hold for the burgeoning AI ecosystem, the likelihood of superintelligence within our lifetimes, and more. Links from the show: The Datapreneurs by Bob Muglia and Steve HammThe Singularity is Near by Ray KurzweilIsaac AsimovSnowflakePineconeDocugamiOpenAI/GPT-4The Modern Data Stack

ETL data pipelines are the bread and butter of data teams that must design, develop, and author DAGs to accommodate the various business requirements. dbt is becoming one of the most used tools to perform SQL transformations on the Data Warehouse, allowing teams to harness the power of queries at scale. Airflow users are constantly finding new ways to integrate dbt with the Airflow ecosystem and build a single pane of glass where Data Engineers can manage and administer their pipelines. Astronomer Cosmos, an open-source product, has been introduced to integrate Airflow with dbt Core seamlessly. Now you can easily see your dbt pipelines fully integrated on Airflow. You will learn the following: How to integrate dbt Core with Airflow How to use Cosmos How to build data pipelines at scale

Introduced in Airflow 2.4, Datasets are a foundational feature for authoring modular data pipelines. As DAGs grow to encompass a larger number of data sources and encompass multiple data transformation steps, they typically become less predictable in the timeliness of execution and less efficient. This talk focuses on leveraging Datasets to enable predictable and more efficient DAGs, by leveraging patterns from microservice architectures. Just as large monolithic applications were decomposed into micro-services to deliver more efficient scalability and faster development cycles, micropipelines have the same potential to radically transform data pipeline efficiency and development velocity. Using a simple financial analysis pipeline example, with data aggregation being done in Snowflake and prediction analysis in Spark, this talk outlines how to retain timelines of data pipelines while expanding data sets.

Data Engineering with dbt provides a comprehensive guide to building modern, reliable data platforms using dbt and SQL. You'll gain hands-on experience building automated ELT pipelines, using dbt Cloud with Snowflake, and embracing patterns for scalable and maintainable data solutions. What this Book will help me do Set up and manage a dbt Cloud environment and create reliable ELT pipelines. Integrate Snowflake with dbt to implement robust data engineering workflows. Transform raw data into analytics-ready data using dbt's features and SQL. Apply advanced dbt functionality such as macros and Jinja for efficient coding. Ensure data accuracy and platform reliability with built-in testing and monitoring. Author(s) None Zagni is a seasoned data engineering professional with a wealth of experience in designing scalable data platforms. Through practical insights and real-world applications, Zagni demystifies complex data engineering practices. Their approachable teaching style makes technical concepts accessible and actionable. Who is it for? This book is perfect for data engineers, analysts, and analytics engineers looking to leverage dbt for data platform development. If you're a manager or decision maker interested in fostering efficient data workflows or a professional with basic SQL knowledge aiming to deepen your expertise, this resource will be invaluable.

Summary

Data engineering is all about building workflows, pipelines, systems, and interfaces to provide stable and reliable data. Your data can be stable and wrong, but then it isn't reliable. Confidence in your data is achieved through constant validation and testing. Datafold has invested a lot of time into integrating with the workflow of dbt projects to add early verification that the changes you are making are correct. In this episode Gleb Mezhanskiy shares some valuable advice and insights into how you can build reliable and well-tested data assets with dbt and data-diff.

Announcements

Hello and welcome to the Data Engineering Podcast, the show about modern data management RudderStack helps you build a customer data platform on your warehouse or data lake. Instead of trapping data in a black box, they enable you to easily collect customer data from the entire stack and build an identity graph on your warehouse, giving you full visibility and control. Their SDKs make event streaming from any app or website easy, and their extensive library of integrations enable you to automatically send data to hundreds of downstream tools. Sign up free at dataengineeringpodcast.com/rudderstack Your host is Tobias Macey and today I'm interviewing Gleb Mezhanskiy about how to test your dbt projects with Datafold

Interview

Introduction How did you get involved in the area of data management? Can you describe what Datafold is and what's new since we last spoke? (July 2021 and July 2022 about data-diff) What are the roadblocks to data testing/validation that you see teams run into most often?

How does the tooling used contribute to/help address those roadblocks?

What are some of the error conditions/failure modes that data-diff can help identify in a dbt project?

What are some examples of tests that need to be implemented by the engineer?

In your experience working with data teams, what typically constitutes the "staging area" for a dbt project? (e.g. separate warehouse, namespaced tables, snowflake data copies, lakefs, etc.) Given a dbt project that is well tested and has data-diff as part of the validation suite, what are the challenges that teams face in managing the feedback cycle of running those tests? In application development there is the idea of the "testing pyramid", consisting of unit tests, integration tests, system tests, etc. What are the parallels to that in data projects?

What are the limitations of the data ecosystem that make testing a bigger challenge than it might otherwise be?

Beyond test execution, what are the other aspects of data health that need to be included in the development and deployment workflow of dbt projects? (e.g. freshness, time to delivery, etc.) What are the most interesting, innovative, or unexpected ways that you have seen Datafold and/or data-diff used for testing dbt projects? What are the most interesting, unexpected, or challenging lessons that you have learned while working on dbt testing internally or with your customers? When is Datafold/data-diff the wrong choice for dbt projects? What do you have planned for the future of Datafold?

Contact Info

Closing Announcements

Thank you for listening! Don't forget to check out our other shows. Podcast.init covers the Python language, its community, and the innovative ways it is being used. The Machine Learning Podcast helps you go from idea to production with machine learning. Visit the site to subscribe to the show, sign up for the mailing list, and read the show notes. If you've learned something or tried out a project from the show then tell us about it! Email [email protected]) with your story. To help other people find the show please leave a review on Apple Podcasts and tell your friends and co-workers

Parting Question

From your perspective, what is the biggest gap in the tooling or technology for data management today?

Links

Datafold

Podcast Episode

data-diff

Podcast Episode

db

Summary

A significant portion of the time spent by data engineering teams is on managing the workflows and operations of their pipelines. DataOps has arisen as a parallel set of practices to that of DevOps teams as a means of reducing wasted effort. Agile Data Engine is a platform designed to handle the infrastructure side of the DataOps equation, as well as providing the insights that you need to manage the human side of the workflow. In this episode Tevje Olin explains how the platform is implemented, the features that it provides to reduce the amount of effort required to keep your pipelines running, and how you can start using it in your own team.

Announcements

Hello and welcome to the Data Engineering Podcast, the show about modern data management RudderStack helps you build a customer data platform on your warehouse or data lake. Instead of trapping data in a black box, they enable you to easily collect customer data from the entire stack and build an identity graph on your warehouse, giving you full visibility and control. Their SDKs make event streaming from any app or website easy, and their extensive library of integrations enable you to automatically send data to hundreds of downstream tools. Sign up free at dataengineeringpodcast.com/rudderstack Your host is Tobias Macey and today I'm interviewing Tevje Olin about Agile Data Engine, a platform that combines data modeling, transformations, continuous delivery and workload orchestration to help you manage your data products and the whole lifecycle of your warehouse

Interview

Introduction How did you get involved in the area of data management? Can you describe what Agile Data Engine is and the story behind it? What are some of the tools and architectures that an organization might be able to replace with Agile Data Engine?

How does the unified experience of Agile Data Engine change the way that teams think about the lifecycle of their data? What are some of the types of experiments that are enabled by reduced operational overhead?

What does CI/CD look like for a data warehouse?

How is it different from CI/CD for software applications?

Can you describe how Agile Data Engine is architected?

How have the design and goals of the system changed since you first started working on it? What are the components that you needed to develop in-house to enable your platform goals?

What are the changes in the broader data ecosystem that have had the most influence on your product goals and customer adoption? Can you describe the workflow for a team that is using Agile Data Engine to power their business analytics?

What are some of the insights that you generate to help your customers understand how to improve their processes or identify new opportunities?

In your "about" page it mentions the unique approaches that you take for warehouse automation. How do your practices differ from the rest of the industry? How have changes in the adoption/implementation of ML and AI impacted the ways that your customers exercise your platform? What are the most interesting, innovative, or unexpected ways that you have seen the Agile Data Engine platform used? What are the most interesting, unexpected, or challenging lessons that you have learned while working on Agile Data Engine? When is Agile Data Engine the wrong choice? What do you have planned for the future of Agile Data Engine?

Guest Contact Info

Parting Question

From your perspective, what is the biggest gap in the tooling or technology for data management today?

About Agile Data Engine

Agile Data Engine unlocks the potential of your data to drive business value - in a rapidly changing world. Agile Data Engine is a DataOps Management platform for designing, deploying, operating and managing data products, and managing the whole lifecycle of a data warehouse. It combines data modeling, transformations, continuous delivery and workload orchestration into the same platform.

Links

Agile Data Engine Bill Inmon Ralph Kimball Snowflake Redshift BigQuery Azure Synapse Airflow

The intro and outro music is from The Hug by The Freak Fandango Orchestra / CC BY-SA

Sponsored By:

Rudderstack:

RudderStack provides all your customer data pipelines in one platform. You can collect, transform, and route data across your entire stack with its event streaming, ETL, and reverse ETL pipelines.

RudderStack’s warehouse-first approach means it does not store sensitive information, and it allows you to leverage your existing data warehouse/data lake infrastructure to build a single source of truth for every team.

RudderStack also supports real-time use cases. You can Implement RudderStack SDKs once, then automatically send events to your warehouse and 150+ business tools, and you’ll never have to worry about API changes again.

Visit dataengineeringpodcast.com/rudderstack to sign up for free today, and snag a free T-Shirt just for being a Data Engineering Podcast listener.Support Data Engineering Podcast

This comprehensive guide, "Data Modeling with Snowflake", is your go-to resource for mastering the art of efficient data modeling tailored to the capabilities of the Snowflake Data Cloud. In this book, you will learn how to design agile and scalable data solutions by effectively leveraging Snowflake's unique architecture and advanced features. What this Book will help me do Understand the core principles of data modeling and how they apply to Snowflake's cloud-native environment. Learn to use Snowflake's features, such as time travel and zero-copy cloning, to create efficient data solutions. Gain hands-on experience with SQL recipes that outline practical approaches to transforming and managing Snowflake data. Discover techniques for modeling structured and semi-structured data for real-world business needs. Learn to integrate universal modeling frameworks like Star Schema and Data Vault into Snowflake implementations for scalability and maintainability. Author(s) The author, Serge Gershkovich, is a seasoned expert in database design and Snowflake architecture. With years of experience in the data management field, Serge has dedicated himself to making complex technical subjects approachable to professionals at all levels. His insights in this book are informed by practical applications and real-world experience. Who is it for? This book is targeted at data professionals, ranging from newcomers to database design to seasoned SQL developers seeking to specialize in Snowflake. If you are looking to understand and apply data modeling practices effectively within Snowflake's architecture, this book is for you. Whether you're refining your modeling skills or getting started with Snowflake, it provides the practical knowledge you need to succeed.

This project-oriented book gives you a hands-on approach to designing, developing, and templating your Snowflake platform delivery. Written by seasoned Snowflake practitioners, the book is full of practical guidance and advice to accelerate and mature your Snowflake journey. Working through the examples helps you develop the skill, knowledge, and expertise to expand your organization’s core Snowflake capability and prepare for later incorporation of additional Snowflake features as they become available. Your Snowflake platform will be resilient, fit for purpose, extensible, and guarantee rapid, consistent, and repeatable, pattern-based deployments ready for application delivery. When a Snowflake account is delivered there are no controls, guard rails, external monitoring, nor governance mechanisms baked in. From a large organization perspective, this book explains how to deliver your core Snowflake platform in the form of a Landing Zone, a consistent, templated approach that assumes familiarity with Snowflake core concepts and principles. The book also covers Snowflake from a governance perspective and addresses the “who can see what?” question, satisfying requirements to know for certain that your Snowflake accounts properly adhere to your organization’s data usage policies. The book provides a proven pathway to success by equipping you with skill, knowledge, and expertise to accelerate Snowflake adoption within your organization. The patterns delivered within this book are used for production deployment, and are proven in real-world use. Examples in the book help you succeed in an environment in which governance policies, processes, and procedures oversee and control every aspect of your Snowflake platform development and delivery life cycle. Your environment may not be so exacting, but you’ll still benefit from the rigorous and demanding perspective this book’s authors bring to the table. The book showsyou how to leverage what you already know and adds what you don’t know, all applied to deliver your Snowflake accounts. You will know how to position your organization to deliver consistent Snowflake accounts that are prepared and ready for immediate application development. What You Will Learn Create a common, consistent deployment framework for Snowflake in your organization Enable rapid up-skill and adoption of Snowflake, leveraging the benefits of cloud platforms Develop a deep understanding of Snowflake administration and configuration Implement consistent, approved design patterns that reduce account provisioning times Manage data consumption by monitoring and controlling access to datasets Who This Book Is For Systems administrators charged with delivering a common implementationpattern for all Snowflake accounts within an organization; senior managers looking to simplify the delivery of complex technology into their existing infrastructure; developers seeking to understand guard rails, monitoring, and controls to ensure that Snowflake meets their organization's requirements; sales executives needing to understand how their data usage can be monitored and gain insights into how their data is being consumed; governance colleagues wanting to know who can see each data set, and wanting to identify toxic role combinations, and have confidence that their Snowflake accounts are properly provisioned

Summary

All of the advancements in our technology is based around the principles of abstraction. These are valuable until they break down, which is an inevitable occurrence. In this episode the host Tobias Macey shares his reflections on recent experiences where the abstractions leaked and some observances on how to deal with that situation in a data platform architecture.

Announcements

Hello and welcome to the Data Engineering Podcast, the show about modern data management RudderStack helps you build a customer data platform on your warehouse or data lake. Instead of trapping data in a black box, they enable you to easily collect customer data from the entire stack and build an identity graph on your warehouse, giving you full visibility and control. Their SDKs make event streaming from any app or website easy, and their extensive library of integrations enable you to automatically send data to hundreds of downstream tools. Sign up free at dataengineeringpodcast.com/rudderstack Your host is Tobias Macey and today I'm sharing some thoughts and observances about abstractions and impedance mismatches from my experience building a data lakehouse with an ELT workflow

Interview

Introduction impact of community tech debt

hive metastore new work being done but not widely adopted

tensions between automation and correctness data type mapping

integer types complex types naming things (keys/column names from APIs to databases)

disaggregated databases - pros and cons

flexibility and cost control not as much tooling invested vs. Snowflake/BigQuery/Redshift

data modeling

dimensional modeling vs. answering today's questions

What are the most interesting, unexpected, or challenging lessons that you have learned while working on your data platform? When is ELT the wrong choice? What do you have planned for the future of your data platform?

Contact Info

Parting Question

From your perspective, what is the biggest gap in the tooling or technology for data management today?

Closing Announcements

Thank you for listening! Don't forget to check out our other shows. Podcast.init covers the Python language, its community, and the innovative ways it is being used. The Machine Learning Podcast helps you go from idea to production with machine learning. Visit the site to subscribe to the show, sign up for the mailing list, and read the show notes. If you've learned something or tried out a project from the show then tell us about it! Email [email protected]) with your story. To help other people find the show please leave a review on Apple Podcasts and tell your friends and co-workers

Links

dbt Airbyte

Podcast Episode

Dagster

Podcast Episode

Trino

Podcast Episode

ELT Data Lakehouse Snowflake BigQuery Redshift Technical Debt Hive Metastore AWS Glue

The intro and outro music is from The Hug by The Freak Fandango Orchestra / CC BY-SA

Sponsored By:

Rudderstack:

RudderStack provides all your customer data pipelines in one platform. You can collect, transform, and route data across your entire stack with its event streaming, ETL, and reverse ETL pipelines.

RudderStack’s warehouse-first approach means it does not store sensitive information, and it allows you to leverage your existing data warehouse/data lake infrastructure to build a single source of truth for every team.

RudderStack also supports real-time use cases. You can Implement RudderStack SDKs once, then automatically send events to your warehouse and 150+ business tools, and you’ll never have to worry about API changes again.

Visit dataengineeringpodcast.com/rudderstack to sign up for free today, and snag a free T-Shirt just for being a Data Engineering Podcast listener.Support Data Engineering Podcast

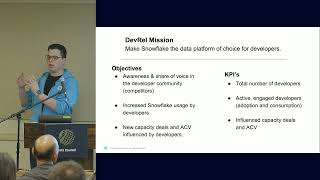

ABOUT THE TALK: In this talk, Felipe Hoffa and Daniel Myers present an honest take of their wildly different approaches to Developer Relations and how both have been critical in building Snowflake's world-class developer community and ecosystem from the ground up. Learn how they define DevRel KPIs & metrics and daily challenges they face and lessons learned along the way. You might even get inspired to become a Developer Advocate after understanding the different ways to engage with the Snowflake community and what's next for Snowflake Developer Relations.

ABOUT THE SPEAKERS: Felipe Hoffa is the Data Cloud Advocate at Snowflake. Previously he worked at Google, as a Developer Advocate on Data Analytics for BigQuery, after joining as a Software Engineer. He moved from Chile to San Francisco in 2011. His goal is to inspire developers and data scientists around the world to analyze and understand their data in ways they never could before.

Daniel Myers is in Developer Relations and previously held roles at different companies, including Google, Cisco, and Fujitsu. In addition, he led and founded multiple startups.

ABOUT DATA COUNCIL: Data Council (https://www.datacouncil.ai/) is a community and conference series that provides data professionals with the learning and networking opportunities they need to grow their careers.

Make sure to subscribe to our channel for the most up-to-date talks from technical professionals on data related topics including data infrastructure, data engineering, ML systems, analytics and AI from top startups and tech companies.

FOLLOW DATA COUNCIL: Twitter: https://twitter.com/DataCouncilAI LinkedIn: https://www.linkedin.com/company/datacouncil-ai/

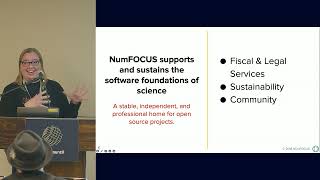

ABOUT THE TALK: This talk walks you through the structure of NumFOCUS, the programs, challenges, and vision for a sustainable, inclusive, and vibrant open source community. This talk will deep dive on sustainability endeavors, including diversity and inclusion, and how you can get involved in the NumFOCUS community.

ABOUT THE SPEAKER: Dr. Katrina Riehl is President of the Board of Directors at NumFOCUS, Head of the Streamlit Data Team at Snowflake, and Adjunct Lecturer at Georgetown University. For almost two decades, Katrina has worked extensively in the fields of scientific computing, machine learning, data mining, and visualization. Most notably, she has helped lead data science efforts at the University of Texas Austin Applied Research Laboratory, Apple, HomeAway (now, Vrbo), and Cloudflare.

ABOUT DATA COUNCIL: Data Council (https://www.datacouncil.ai/) is a community and conference series that provides data professionals with the learning and networking opportunities they need to grow their careers.

Make sure to subscribe to our channel for the most up-to-date talks from technical professionals on data related topics including data infrastructure, data engineering, ML systems, analytics and AI from top startups and tech companies.

FOLLOW DATA COUNCIL: Twitter: https://twitter.com/DataCouncilAI LinkedIn: https://www.linkedin.com/company/datacouncil-ai/

ABOUT THE TALK: The power to gather, analyze, and quickly act on real-time bidding data is critical for advertisers and publishers. A data platform that supports real-time bidding empowers these participants to obtain insights from the huge amounts of data generated by programmatic advertising.

Learn how our Beeswax data platform captures real-time information about bids and impressions and provides feedback to advertisers, enabling them to make data-driven decisions for optimal results. It is built on an event-based architecture, leveraging AWS Kinesis and Snowflake's Snowpipe, that is capable of processing bid requests at a massive scale - around half a million QPS in real-time! We also talk about how the platform evolved over time and how we've built the platform and monitoring infrastructure to enable sustained growth.

ABOUT THE SPEAKER: Margi Dubal is a Director of Data Engineering at Freewheel, a Comcast Company. She currently leads various data teams to build scalable, reliable, and high-quality data solutions. Prior to joining Freewheel, Margi has held data engineering management positions at Paperless Post, Amplify and Adknowledge Inc.

ABOUT DATA COUNCIL: Data Council (https://www.datacouncil.ai/) is a community and conference series that provides data professionals with the learning and networking opportunities they need to grow their careers.

Make sure to subscribe to our channel for the most up-to-date talks from technical professionals on data related topics including data infrastructure, data engineering, ML systems, analytics and AI from top startups and tech companies.

FOLLOW DATA COUNCIL: Twitter: https://twitter.com/DataCouncilAI LinkedIn: https://www.linkedin.com/company/datacouncil-ai/

ABOUT THE TALK Learn all about cost and performance optimization in Snowflake. This talk deep dive's into Snowflake’s architecture & billing model, covering key concepts like virtual warehouses, micro-partitioning, the lifecycle of a query and Snowflake’s two-tiered cache. It then goes in depth on the most important optimization strategies, like virtual warehouse configuration, table clustering and query writing best practices. Throughout the talk, code snippets and other resources are shared to help you get the most out of Snowflake.

ABOUT THE SPEAKERS Niall Woodward and Ian Whitestone are the co-founders at SELECT, a tool to help Snowflake users optimize their Snowflake cost & performance.

Niall Woodward has been well known in the data community for creating and contributing to open source packages.

Ian Whitestone previously led data teams at Shopify and Capital One. At Shopify, Ian spearheaded the efforts to reduce their data warehouse spend by over 50%.

ABOUT DATA COUNCIL: Data Council (https://www.datacouncil.ai/) is a community and conference series that provides data professionals with the learning and networking opportunities they need to grow their careers.

Make sure to subscribe to our channel for the most up-to-date talks from technical professionals on data related topics including data infrastructure, data engineering, ML systems, analytics and AI from top startups and tech companies.

FOLLOW DATA COUNCIL: Twitter: https://twitter.com/DataCouncilAI LinkedIn: https://www.linkedin.com/company/datacouncil-ai

The name WALD-stack stems from the four technologies it is composed of, i.e. a cloud-computing Warehouse like Snowflake or Google BigQuery, the open-source data integration engine Airbyte, the open-source full-stack BI platform Lightdash, and the open-source data transformation tool DBT.

Using a Formula 1 Grand Prix dataset, I will give an overview of how these four tools complement each other perfectly for analytics tasks in an ELT approach. You will learn the specific uses of each tool as well as their particular features. My talk is based on a full tutorial, which you can find under waldstack.org.

Snowflake as a data platform is the core data repository of many large organizations.

With the introduction of Snowflake's Snowpark for Python, Python developers can now collaborate and build on one platform with a secure Python sandbox, providing developers with dynamic scalability & elasticity as well as security and compliance.

In this talk I'll explain the core concepts of Snowpark for Python and how they can be used for large scale feature engineering and data science.

Master the intricacies of Snowflake and prepare for the SnowPro Advanced Architect Certification exam with this comprehensive study companion. This book provides robust and effective study tools to help you prepare for the exam and is also designed for those who are interested in learning the advanced features of Snowflake. The practical examples and in-depth background on theory in this book help you unleash the power of Snowflake in building a high-performance system. The best practices demonstrated in the book help you use Snowflake more powerfully and effectively as a data warehousing and analytics platform. Reading this book and reviewing the concepts will help you gain the knowledge you need to take the exam. The book guides you through a study of the different domains covered on the exam: Accounts and Security, Snowflake Architecture, Data Engineering, and Performance Optimization. You’ll also be well positioned to apply your newly acquired practical skills to real-world Snowflake solutions. You will have a deep understanding of Snowflake to help you take full advantage of Snowflake’s architecture to deliver value analytics insight to your business. What You Will Learn Gain the knowledge you need to prepare for the exam Review in-depth theory on Snowflake to help you build high-performance systems Broaden your skills as a data warehouse designer to cover the Snowflake ecosystem Optimize performance and costs associated with your use of the Snowflake data platform Share data securely both inside your organization and with external partners Apply your practical skills to real-world Snowflake solutions Who This Book Is For Anyone who is planning to take the SnowPro Advanced Architect Certification exam, those who want to move beyond traditional database technologies and build their skills to design and architect solutions using Snowflake services, and veteran database professionals seeking an on-the-job reference to understand one of the newest and fastest-growing technologies in data

Summary

The data ecosystem has been building momentum for several years now. As a venture capital investor Matt Turck has been trying to keep track of the main trends and has compiled his findings into the MAD (ML, AI, and Data) landscape reports each year. In this episode he shares his experiences building those reports and the perspective he has gained from the exercise.

Announcements

Hello and welcome to the Data Engineering Podcast, the show about modern data management Businesses that adapt well to change grow 3 times faster than the industry average. As your business adapts, so should your data. RudderStack Transformations lets you customize your event data in real-time with your own JavaScript or Python code. Join The RudderStack Transformation Challenge today for a chance to win a $1,000 cash prize just by submitting a Transformation to the open-source RudderStack Transformation library. Visit dataengineeringpodcast.com/rudderstack today to learn more Your host is Tobias Macey and today I'm interviewing Matt Turck about his annual report on the Machine Learning, AI, & Data landscape and the insights around data infrastructure that he has gained in the process

Interview

Introduction How did you get involved in the area of data management? Can you describe what the MAD landscape report is and the story behind it?

At a high level, what is your goal in the compilation and maintenance of your landscape document? What are your guidelines for what to include in the landscape?

As the data landscape matures, how have you seen that influence the types of projects/companies that are founded?

What are the product categories that were only viable when capital was plentiful and easy to obtain? What are the product categories that you think will be swallowed by adjacent concerns, and which are likely to consolidate to remain competitive?

The rapid growth and proliferation of data tools helped establish the "Modern Data Stack" as a de-facto architectural paradigm. As we move into this phase of contraction, what are your predictions for how the "Modern Data Stack" will evolve?

Is there a different architectural paradigm that you see as growing to take its place?

How has your presentation and the types of information that you collate in the MAD landscape evolved since you first started it?~~ What are the most interesting, innovative, or unexpected product and positioning approaches that you have seen while tracking data infrastructure as a VC and maintainer of the MAD landscape? What are the most interesting, unexpected, or challenging lessons that you have learned while working on the MAD landscape over the years? What do you have planned for future iterations of the MAD landscape?

Contact Info

Website @mattturck on Twitter MAD Landscape Comments Email

Parting Question

From your perspective, what is the biggest gap in the tooling or technology for data management today?

Closing Announcements

Thank you for listening! Don't forget to check out our other shows. Podcast.init covers the Python language, its community, and the innovative ways it is being used. The Machine Learning Podcast helps you go from idea to production with machine learning. Visit the site to subscribe to the show, sign up for the mailing list, and read the show notes. If you've learned something or tried out a project from the show then tell us about it! Email [email protected]) with your story. To help other people find the show please leave a review on Apple Podcasts and tell your friends and co-workers

Links

MAD Landscape First Mark Capital Bayesian Learning AI Winter Databricks Cloud Native Landscape LUMA Scape Hadoop Ecosystem Modern Data Stack Reverse ETL Generative AI dbt Transform

Podcast Episode

Snowflake IPO Dataiku Iceberg

Podcast Episode

Hudi

Podcast Episode

DuckDB

Podcast Episode

Trino Y42

Podcast Episode

Mozart Data

Podcast Episode

Keboola MPP Database

The intro and outro music is f

Brad Culberson is a Principal Architect in the Field CTO's office at Snowflake. Niall Woodward is a co-founder of SELECT, a startup providing optimization and spend management software for Snowflake customers. In this conversation with Tristan and Julia, Brad and Niall discuss all things cost optimization: cloud vs on-prem, measuring ROI, and tactical ways to get more out of your budget. For full show notes and to read 6+ years of back issues of the podcast's companion newsletter, head to https://roundup.getdbt.com. The Analytics Engineering Podcast is sponsored by dbt Labs.