As we are in an era of big data where large groups of information are assimilated and analyzed, for insights into human behavior, data privacy has become a hot topic. Since there is a lot of private information which once leaked can be misused, all data cannot be released for research. This talk aims to discuss Differential Privacy, a cutting-edge technique of cybersecurity that claims to preserve an individual’s privacy, how it is employed to minimize the risks with private data, its applications in various domains, and how Python eases the task of employing it in our models with PyDP.

talk-data.com

talk-data.com

Topic

Big Data

1217

tagged

Activity Trend

Top Events

Get ready to level up your big data processing skills! Join us for an introductory talk on Apache Spark, the distributed computing system used by tech giants like Netflix and Amazon. We'll cover PySpark DataFrames and how to use them. Whether you're a Python developer new to big data or looking to explore new technologies, this talk is for you. You'll gain foundational knowledge about Apache Spark and its capabilities, and learn how to leverage DataFrames and SQL APIs to efficiently process large amounts of data. Don't miss out on this opportunity to up your big data game!

We talked about:

Rosona’s background How mathematics knowledge helps in industry What is industrial data? Setting up an industrial process using blue paint Internet companies’ data vs industrial data Explaining industrial processes using packing peanuts Why productive industry needs data Measuring product qualities How data specialists use industrial data Defining and measuring sustainability Using data in reactionary measures to changing regulations Types of industrial data Solving problems and optimizing with industrial data Industrial solvers Tiny data vs Big data in productive industry The advantages of coming from academia into productive industry Materials and resources for industrial data Women in industry Why Rosona decided to shift to industrial data

Links:

Kaggle dataset: https://www.kaggle.com/datasets/paresh2047/uci-semcom

This book illustrates how data can be useful in solving business problems. It explores various analytics techniques for using data to discover hidden patterns and relationships, predict future outcomes, optimize efficiency and improve the performance of organizations. You’ll learn how to analyze data by applying concepts of statistics, probability theory, and linear algebra. In this new edition, both R and Python are used to demonstrate these analyses. Practical Business Analytics Using R and Python also features new chapters covering databases, SQL, Neural networks, Text Analytics, and Natural Language Processing.Part one begins with an introduction to analytics, the foundations required to perform data analytics, and explains different analytics terms and concepts such as databases and SQL, basic statistics, probability theory, and data exploration. Part two introduces predictive models using statistical machine learning and discusses concepts like regression, classification, and neural networks. Part three covers two of the most popular unsupervised learning techniques, clustering and association mining, as well as text mining and natural language processing (NLP). The book concludes with an overview of big data analytics, R and Python essentials for analytics including libraries such as pandas and NumPy. Upon completing this book, you will understand how to improve business outcomes by leveraging R and Python for data analytics. What You Will Learn Master the mathematical foundations required for business analytics Understand various analytics models and data mining techniques such as regression, supervised machine learning algorithms for modeling, unsupervised modeling techniques, and how to choose the correct algorithm for analysis in any given task Use R and Python to develop descriptive models, predictive models, and optimize models Interpret and recommend actions based on analytical model outcomes Who This Book Is For Software professionals and developers, managers, and executives who want to understand and learn the fundamentals of analytics using R and Python.

Summary

This podcast started almost exactly six years ago, and the technology landscape was much different than it is now. In that time there have been a number of generational shifts in how data engineering is done. In this episode I reflect on some of the major themes and take a brief look forward at some of the upcoming changes.

Announcements

Hello and welcome to the Data Engineering Podcast, the show about modern data management Your host is Tobias Macey and today I'm reflecting on the major trends in data engineering over the past 6 years

Interview

Introduction 6 years of running the Data Engineering Podcast Around the first time that data engineering was discussed as a role

Followed on from hype about "data science"

Hadoop era Streaming Lambda and Kappa architectures

Not really referenced anymore

"Big Data" era of capture everything has shifted to focusing on data that presents value

Regulatory environment increases risk, better tools introduce more capability to understand what data is useful

Data catalogs

Amundsen and Alation

Orchestration engine

Oozie, etc. -> Airflow and Luigi -> Dagster, Prefect, Lyft, etc. Orchestration is now a part of most vertical tools

Cloud data warehouses Data lakes DataOps and MLOps Data quality to data observability Metadata for everything

Data catalog -> data discovery -> active metadata

Business intelligence

Read only reports to metric/semantic layers Embedded analytics and data APIs

Rise of ELT

dbt Corresponding introduction of reverse ETL

What are the most interesting, unexpected, or challenging lessons that you have learned while working on running the podcast? What do you have planned for the future of the podcast?

Parting Question

From your perspective, what is the biggest gap in the tooling or technology for data management today?

Closing Announcements

Thank you for listening! Don't forget to check out our other shows. Podcast.init covers the Python language, its community, and the innovative ways it is being used. The Machine Learning Podcast helps you go from idea to production with machine learning. Visit the site to subscribe to the show, sign up for the mailing list, and read the show notes. If you've learned something or tried out a project from the show then tell us about it! Email [email protected]) with your story. To help other people find the show please leave a review on Apple Podcasts and tell your friends and co-workers

The intro and outro music is from The Hug by The Freak Fandango Orchestra / CC BY-SA

Sponsored By:

Materialize:

Looking for the simplest way to get the freshest data possible to your teams? Because let's face it: if real-time were easy, everyone would be using it. Look no further than Materialize, the streaming database you already know how to use.

Materialize’s PostgreSQL-compatible interface lets users leverage the tools they already use, with unsurpassed simplicity enabled by full ANSI SQL support. Delivered as a single platform with the separation of storage and compute, strict-serializability, active replication, horizontal scalability and workload isolation — Materialize is now the fastest way to build products with streaming data, drastically reducing the time, expertise, cost and maintenance traditionally associated with implementation of real-time features.

Sign up now for early access to Materialize and get started with the power of streaming data with the same simplicity and low implementation cost as batch cloud data warehouses.

Go to materialize.comSupport Data Engineering Podcast

More organizations than ever understand the importance of data lake architectures for deriving value from their data. Building a robust, scalable, and performant data lake remains a complex proposition, however, with a buffet of tools and options that need to work together to provide a seamless end-to-end pipeline from data to insights. This book provides a concise yet comprehensive overview on the setup, management, and governance of a cloud data lake. Author Rukmani Gopalan, a product management leader and data enthusiast, guides data architects and engineers through the major aspects of working with a cloud data lake, from design considerations and best practices to data format optimizations, performance optimization, cost management, and governance. Learn the benefits of a cloud-based big data strategy for your organization Get guidance and best practices for designing performant and scalable data lakes Examine architecture and design choices, and data governance principles and strategies Build a data strategy that scales as your organizational and business needs increase Implement a scalable data lake in the cloud Use cloud-based advanced analytics to gain more value from your data

FUZZY COMPUTING IN DATA SCIENCE This book comprehensively explains how to use various fuzzy-based models to solve real-time industrial challenges. The book provides information about fundamental aspects of the field and explores the myriad applications of fuzzy logic techniques and methods. It presents basic conceptual considerations and case studies of applications of fuzzy computation. It covers the fundamental concepts and techniques for system modeling, information processing, intelligent system design, decision analysis, statistical analysis, pattern recognition, automated learning, system control, and identification. The book also discusses the combination of fuzzy computation techniques with other computational intelligence approaches such as neural and evolutionary computation. Audience Researchers and students in computer science, artificial intelligence, machine learning, big data analytics, and information and communication technology.

This IBM® Redpaper Redbookspublication provides an overview of the IBM Elastic Storage® Server (IBM ESS) and IBM Elastic Storage System (also IBM ESS). These scalable, high-performance data and file management solution, are built on IBM Spectrum® Scale technology. Providing reliability, performance, and scalability, IBM ESS can be implemented for a range of diverse requirements. The latest IBM ESS 3500 is the most innovative system that provides investment protection to expand or build a new Global Data Platform and use current storage. The system allows enhanced, non-disruptive upgrades to grow from flash to hybrid or from hard disk drives (HDDs) to hybrid. IBM ESS can scale up or out with two different storage mediums in the environment, and it is ready for technologies like 200 Gb Ethernet or InfiniBand NDR-200 connectivity. This publication helps you to understand the solution and its architecture. It describes ordering the best solution for your environment, planning the installation and integration of the solution into your environment, and correctly maintaining your solution. The solution is created from the following combination of physical and logical components: Hardware Operating system Storage Network Applications Knowledge of the IBM Elastic Storage Server and IBM Elastic Storage System components is key for planning an environment. This paper is targeted toward technical professionals (consultants, technical support staff, IT Architects, and IT specialists) who are responsible for delivering cost-effective cloud services and big data solutions. The content of this paper can help you to uncover insights among client's data so that you can take appropriate actions to optimize business results, product development, and scientific discoveries.

While the data lakehouse architecture offers many inherent benefits, it’s still relatively new to the dbt community, which creates hurdles to adoption.

In this talk, you’ll meet Apache Hudi, a platform used by organizations to build planet-scale data platforms according to all of the key design elements required by the lakehouse architecture. You’ll also learn how we’ve personaly used Hudi, along with dbt, Spark, Airflow, and many more open-source tools to build a truly reliable big data streaming lakehouse that cut the latency of our petabyte-scale data pipelines from hours to minutes.

Check the slides here: https://docs.google.com/presentation/d/18dv4TZzRnZQ-IK7xLkYJuind4Bcztkl19zV7b4HTaTU/edit?usp=sharing

Coalesce 2023 is coming! Register for free at https://coalesce.getdbt.com/.

Do you really need a data-driven culture? Maybe not. According to Bill Schmarzo, the CEO’s mandate is to become value-driven, not data-driven. For analytics teams that means one thing: no one cares about your data, they want results! In this episode of Leaders of Analytics, Bill and I explore the economics of data & analytics and how to drive powerful decisions with data. Decisions that turn into business value. Bill is the author of four text books and one comic book on generating value with analytics. He is a long-serving business executive, adjunct professor, university educator and global influencer in the sphere of big data, digital transformation and data & analytics leadership. In this episode of Leaders of Analytics, we discuss: Why Bill has split his career between corporate leadership and educationWhat value engineering is and how it pertains to data and analyticsHow to determine the economic value of data and analyticsWhy data management the single most important business discipline in the 21st century, and much more.Bill's website: https://deanofbigdata.com/ Bill on LinkedIn: https://www.linkedin.com/in/schmarzo/ Bill on Twitter: https://twitter.com/schmarzo

Summary For any business that wants to stay in operation, the most important thing they can do is understand their customers. American Express has invested substantial time and effort in their Customer 360 product to achieve that understanding. In this episode Purvi Shah, the VP of Enterprise Big Data Platforms at American Express, explains how they have invested in the cloud to power this visibility and the complex suite of integrations they have built and maintained across legacy and modern systems to make it possible.

Announcements

Hello and welcome to the Data Engineering Podcast, the show about modern data management When you’re ready to build your next pipeline, or want to test out the projects you hear about on the show, you’ll need somewhere to deploy it, so check out our friends at Linode. With their new managed database service you can launch a production ready MySQL, Postgres, or MongoDB cluster in minutes, with automated backups, 40 Gbps connections from your application hosts, and high throughput SSDs. Go to dataengineeringpodcast.com/linode today and get a $100 credit to launch a database, create a Kubernetes cluster, or take advantage of all of their other services. And don’t forget to thank them for their continued support of this show! You wake up to a Slack message from your CEO, who’s upset because the company’s revenue dashboard is broken. You’re told to fix it before this morning’s board meeting, which is just minutes away. Enter Metaplane, the industry’s only self-serve data observability tool. In just a few clicks, you identify the issue’s root cause, conduct an impact analysis—and save the day. Data leaders at Imperfect Foods, Drift, and Vendr love Metaplane because it helps them catch, investigate, and fix data quality issues before their stakeholders ever notice they exist. Setup takes 30 minutes. You can literally get up and running with Metaplane by the end of this podcast. Sign up for a free-forever plan at dataengineeringpodcast.com/metaplane, or try out their most advanced features with a 14-day free trial. Mention the podcast to get a free "In Data We Trust World Tour" t-shirt. RudderStack helps you build a customer data platform on your warehouse or data lake. Instead of trapping data in a black box, they enable you to easily collect customer data from the entire stack and build an identity graph on your warehouse, giving you full visibility and control. Their SDKs make event streaming from any app or website easy, and their state-of-the-art reverse ETL pipelines enable you to send enriched data to any cloud tool. Sign up free… or just get the free t-shirt for being a listener of the Data Engineering Podcast at dataengineeringpodcast.com/rudder. Data teams are increasingly under pressure to deliver. According to a recent survey by Ascend.io, 95% in fact reported being at or over capacity. With 72% of data experts reporting demands on their team going up faster than they can hire, it’s no surprise they are increasingly turning to automation. In fact, while only 3.5% report having current investments in automation, 85% of data teams plan on investing in automation in the next 12 months. 85%!!! That’s where our friends at Ascend.io come in. The Ascend Data Automation Cloud provides a unified platform for data ingestion, transformation, orchestration, and observability. Ascend users love its declarative pipelines, powerful SDK, elegant UI, and extensible plug-in architecture, as well as its support for Python, SQL, Scala, and Java. Ascend automates workloads on Snowflake, Databricks, BigQuery, and open source Spark, and can be deployed in AWS, Azure, or GCP. Go to dataengineeringpodcast.com/ascend and sign up for a free trial. If you’re a data engineering podcast listener, you get credits worth $5,000 when you become a customer. Your host is Tobias Macey and today I’m interviewing Purvi Shah about building the Customer 360 data product for American Express and mig

DoorDash was using a data warehouse but found that they needed more data transparency, lower costs, and the ability to handle streaming data as well as batch data. With an engineering team rooted in big data backgrounds at Uber and LinkedIn, they moved to a Lakehouse architecture intuitively, without knowing about the term. In this session, learn more about how they arrived at that architecture, the process of making the move, and the results they have seen. While addressing both data analysts and data scientists from their lakehouse, this session will focus on their machine learning operations, and how their efficiencies are enabling them to tackle more advanced use cases such as NLP and image classification.

Connect with us: Website: https://databricks.com Facebook: https://www.facebook.com/databricksinc Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/data... Instagram: https://www.instagram.com/databricksinc/

- There are unique challenges associated with working with big data for finance (volume of data, disparate storage, variable sharing protocols etc...)

- Leveraging open source technologies, like Databricks' Delta Sharing, in combination with a flexible data management stack, can allow organizations to be more nimble in testing and deploying more strategies

- Live demonstration of Delta Sharing in combination with Nasdaq Data Fabric

Connect with us: Website: https://databricks.com Facebook: https://www.facebook.com/databricksinc Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/data... Instagram: https://www.instagram.com/databricksinc/

At Mirakl, we empower marketplaces with Artificial Intelligence solutions. Catalogs data is an extremely rich source of e-commerce sellers and marketplaces products which include images, descriptions, brands, prices and attributes (for example, size, gender, material or color). Such big volumes of data are suitable for training multimodal deep learning models and present several technical Machine Learning and MLOps challenges to tackle.

We will dive deep into two key use cases: deduplication and categorization of products. For categorization the creation of quality multimodal embeddings plays a crucial role and is achieved through experimentation of transfer learning techniques on state-of-the-art models. Finding very similar or almost identical products among millions and millions can be a very difficult problem and that is where our deduplication algorithm comes to bring a fast and computationally efficient solution.

Furthermore we will show how we deal with big volumes of products using robust and efficient pipelines, Spark for distributed and parallel computing, TFRecords to stream and ingest data optimally on multiple machines avoiding memory issues, and MLflow for tracking experiments and metrics of our models.

Connect with us: Website: https://databricks.com Facebook: https://www.facebook.com/databricksinc Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/data... Instagram: https://www.instagram.com/databricksinc/

Apache Kafka is the de facto standard for real-time event streaming, but what do you do if you want to perform user-facing, ad-hoc, real-time analytics too? That's where Apache Pinot comes in.

Apache Pinot is a realtime distributed OLAP datastore, which is used to deliver scalable real time analytics with low latency. It can ingest data from batch data sources (S3, HDFS, Azure Data Lake, Google Cloud Storage) as well as streaming sources such as Kafka. Pinot is used extensively at LinkedIn and Uber to power many analytical applications such as Who Viewed My Profile, Ad Analytics, Talent Analytics, Uber Eats and many more serving 100k+ queries per second while ingesting 1Million+ events per second.

Apache Kafka's highly performant, distributed, fault-tolerant, real-time publish-subscribe messaging platform powers big data solutions at Airbnb, LinkedIn, MailChimp, Netflix, the New York Times, Oracle, PayPal, Pinterest, Spotify, Twitter, Uber, Wikimedia Foundation, and countless other businesses.

Come hear from Neha Power, Founding Engineer at a StarTree and PMC and committer of Apache Pinot, and Karin Wolok, Head of Developer Community at StarTree, on an introduction to both systems and a view of how they work together.

Connect with us: Website: https://databricks.com Facebook: https://www.facebook.com/databricksinc Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/data... Instagram: https://www.instagram.com/databricksinc/

Talk about a challenge of building a scalable framework for data scientists and ML engineers, that could accommodate hundreds of generic or customer specific ML models, running both in streaming and batch, capable of processing 100+ million records per day from social media networks.

The goal has been archived using Spark and Delta. Our framework is built on clever usage of delta features such as change data feed, selective merge and spark structure streaming from and into delta tables. Saving the data in multiple delta tables, where the structure of these tables are reflecting the particular step in the whole flow. This brings great efficiency, as the downstream processing does very little transformations and thus even people without extensive experience of writing ML pipelines and jobs can use the framework easily. At the heart of the framework there is a series of Spark structure streaming jobs continuously evaluating rules and looking for what social media content should be processed by which model. These rules could be updated by the users anytime and the framework needs to automatically adjust the processing. In an environment like this, the ability to track the records throughout the whole process and the atomicity of operations is of utmost importance and delta tables are providing all of this out of the box.

In the talk we are going to focus on the ideas behind the framework and efficient combining of structured streaming and delta tables. Key takeaways would be exploring some of the lesser known delta table features and real-life experiences from building a ML framework solution based on scalable big data technologies, showing how capable and fast such a solution can be, even with minimal hardware resources.

Connect with us: Website: https://databricks.com Facebook: https://www.facebook.com/databricksinc Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/data... Instagram: https://www.instagram.com/databricksinc/

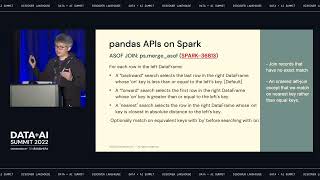

Apache Spark has become the most widely-used engine for executing data engineering, data science and machine learning on single-node machines or clusters. The number of monthly maven downloads of Spark has rapidly increased to 20 million.

We will talk about the higher-level features and improvements in Spark 3.2 and 3.3. The talk also dives deeper into the following features + Introducing pandas API on Apache Spark to unify small data API and big data API. + Completing the ANSI SQL compatibility mode to simplify migration of SQL workloads. + Productionizing adaptive query execution to speed up Spark SQL at runtime. + Introducing RocksDB state store to make state processing more scalable

Connect with us: Website: https://databricks.com Facebook: https://www.facebook.com/databricksinc Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/data... Instagram: https://www.instagram.com/databricksinc/

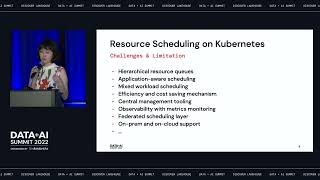

Many ML and big data teams in the open source community are looking to run their workloads in the cloud and they invariably face a common set of challenges such as multi-tenant cluster management, resource fairness and sharing, gang scheduling and cost-effective infrastructure operations. Kubernetes is the de-facto standard platform for running containerized applications in the cloud. However, the default resource scheduler in Kubernetes leaves more to be desired for AI scenarios when running ML/DL training workloads or large-scale data processing jobs for feature engineering.

In this talk, we will share how the community leverage and build upon Apache YuniKorn to address the unique resource scheduling needs for ML and big data teams.

Connect with us: Website: https://databricks.com Facebook: https://www.facebook.com/databricksinc Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/data... Instagram: https://www.instagram.com/databricksinc/

Data and predictions have permeated sports and our conversations around it since the beginning. Who will win the big game this weekend? How many points will your favorite player score? How much money will be guaranteed in the next free agent contract? Once could argue that data-driven decisions in sports started with Moneyball in baseball, in 2003. In the two decades since, data and technology have exploded on the scene. The Texas Rangers are using modern cloud software, such as Databricks, to help make sense of this data, and provide actionable information to create a World Series team on the field. From computer vision, pose analytics, and player tracking, to pitch design, base stealing likelihood, and more, come see how the Texas Rangers are using innovative cloud technologies to create action-driven reports from the current sea of Big Data. Finally, this talk will demonstrate how the Texas Rangers use MLFlow and the MLRegistry inside Databricks to organize their predictive models.

Connect with us: Website: https://databricks.com Facebook: https://www.facebook.com/databricksinc Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/data... Instagram: https://www.instagram.com/databricksinc/

Data driven personalization is an insurmountable challenge for AT&T’s data science team because of the size of datasets and complexity of data engineering. More often these data preparation tasks not only take several hours or days to complete but some of these tasks fail to complete affecting productivity.

In this session, the AT&T Data Science team will talk about how RAPIDS Accelerator for Apache Spark and Photon runtime on Databricks can be leveraged to process these extremely large datasets resulting in improved content recommendation, classification, etc while reducing infrastructure costs. The team will compare speedups and costs to the regular Databricks runtime Apache Spark environment. The size of tested datasets vary from 2TB - 50TB which consists of data collected from for 1 day to 31 days.

The talk will showcase the results from both RAPIDS accelerator for Apache Spark and Databricks Photon runtime.

Connect with us: Website: https://databricks.com Facebook: https://www.facebook.com/databricksinc Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/data... Instagram: https://www.instagram.com/databricksinc/