Summary

Building a data platform that is enjoyable and accessible for all of its end users is a substantial challenge. One of the core complexities that needs to be addressed is the fractal set of integrations that need to be managed across the individual components. In this episode Tobias Macey shares his thoughts on the challenges that he is facing as he prepares to build the next set of architectural layers for his data platform to enable a larger audience to start accessing the data being managed by his team.

Announcements

Hello and welcome to the Data Engineering Podcast, the show about modern data management

Introducing RudderStack Profiles. RudderStack Profiles takes the SaaS guesswork and SQL grunt work out of building complete customer profiles so you can quickly ship actionable, enriched data to every downstream team. You specify the customer traits, then Profiles runs the joins and computations for you to create complete customer profiles. Get all of the details and try the new product today at dataengineeringpodcast.com/rudderstack

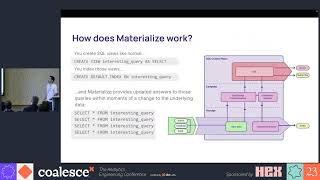

You shouldn't have to throw away the database to build with fast-changing data. You should be able to keep the familiarity of SQL and the proven architecture of cloud warehouses, but swap the decades-old batch computation model for an efficient incremental engine to get complex queries that are always up-to-date. With Materialize, you can! It’s the only true SQL streaming database built from the ground up to meet the needs of modern data products. Whether it’s real-time dashboarding and analytics, personalization and segmentation or automation and alerting, Materialize gives you the ability to work with fresh, correct, and scalable results — all in a familiar SQL interface. Go to dataengineeringpodcast.com/materialize today to get 2 weeks free!

Developing event-driven pipelines is going to be a lot easier - Meet Functions! Memphis functions enable developers and data engineers to build an organizational toolbox of functions to process, transform, and enrich ingested events “on the fly” in a serverless manner using AWS Lambda syntax, without boilerplate, orchestration, error handling, and infrastructure in almost any language, including Go, Python, JS, .NET, Java, SQL, and more. Go to dataengineeringpodcast.com/memphis today to get started!

Data lakes are notoriously complex. For data engineers who battle to build and scale high quality data workflows on the data lake, Starburst powers petabyte-scale SQL analytics fast, at a fraction of the cost of traditional methods, so that you can meet all your data needs ranging from AI to data applications to complete analytics. Trusted by teams of all sizes, including Comcast and Doordash, Starburst is a data lake analytics platform that delivers the adaptability and flexibility a lakehouse ecosystem promises. And Starburst does all of this on an open architecture with first-class support for Apache Iceberg, Delta Lake and Hudi, so you always maintain ownership of your data. Want to see Starburst in action? Go to dataengineeringpodcast.com/starburst and get $500 in credits to try Starburst Galaxy today, the easiest and fastest way to get started using Trino.

Your host is Tobias Macey and today I'll be sharing an update on my own journey of building a data platform, with a particular focus on the challenges of tool integration and maintaining a single source of truth

Interview

Introduction

How did you get involved in the area of data management?

data sharing

weight of history

existing integrations with dbt

switching cost for e.g. SQLMesh

de facto standard of Airflow

Single source of truth

permissions management across application layers

Database engine

Storage layer in a lakehouse

Presentation/access layer (BI)

Data flows

dbt -> table level lineage

orchestration engine -> pipeline flows

task based vs. asset based

Metadata platform as the logical place for horizontal view

Contact Info

LinkedIn

Website

Parting Questio