In this episode of Hub & Spoken, Jason Foster, CEO of Cynozure, chats with Luis Mejia, VP Data, Platforms & AI at PensionBee, about how the company is transforming the pension industry through smart use of data and AI. Luis shares how a digital-first mindset is helping PensionBee enhance customer experience, manage data effectively, and fuel business growth. He dives into how AI is being used in customer service, blending tech with human touch to build trust, and why ethics and transparency matter more than ever. From marketing to customer support, this episode explores the real-world challenges and opportunities of using data and AI. Luis also looks ahead to a future where AI helps democratise data and puts power in the hands of individuals. A must-listen for data and business leaders driving change in a digital world. Research Luis mentioned in the episode: https://www.pensionbee.com/uk/press/ai-and-pensions https://www.pensionbee.com/uk/press/age-vs-ai Follow Luis on LinkedIn Follow Jason on LinkedIn ***** Cynozure is a leading data, analytics and AI company that helps organisations to reach their data potential. It works with clients on data and AI strategy, data management, data architecture and engineering, analytics and AI, data culture and literacy, and data leadership. The company was named one of The Sunday Times' fastest-growing private companies in both 2022 and 2023 and recognised as The Best Place to Work in Data by DataIQ in 2023 and 2024. Cynozure is a certified B Corporation.

talk-data.com

talk-data.com

Topic

Data Management

1097

tagged

Activity Trend

Top Events

Summary In this episode of the Data Engineering Podcast Jeremy Edberg, CEO of DBOS, about durable execution and its impact on designing and implementing business logic for data systems. Jeremy explains how DBOS's serverless platform and orchestrator provide local resilience and reduce operational overhead, ensuring exactly-once execution in distributed systems through the use of the Transact library. He discusses the importance of version management in long-running workflows and how DBOS simplifies system design by reducing infrastructure needs like queues and CI pipelines, making it beneficial for data pipelines, AI workloads, and agentic AI.

Announcements Hello and welcome to the Data Engineering Podcast, the show about modern data managementData migrations are brutal. They drag on for months—sometimes years—burning through resources and crushing team morale. Datafold's AI-powered Migration Agent changes all that. Their unique combination of AI code translation and automated data validation has helped companies complete migrations up to 10 times faster than manual approaches. And they're so confident in their solution, they'll actually guarantee your timeline in writing. Ready to turn your year-long migration into weeks? Visit dataengineeringpodcast.com/datafold today for the details.Your host is Tobias Macey and today I'm interviewing Jeremy Edberg about durable execution and how it influences the design and implementation of business logicInterview IntroductionHow did you get involved in the area of data management?Can you describe what DBOS is and the story behind it?What is durable execution?What are some of the notable ways that inclusion of durable execution in an application architecture changes the ways that the rest of the application is implemented? (e.g. error handling, logic flow, etc.)Many data pipelines involve complex, multi-step workflows. How does DBOS simplify the creation and management of resilient data pipelines? How does durable execution impact the operational complexity of data management systems?One of the complexities in durable execution is managing code/data changes to workflows while existing executions are still processing. What are some of the useful patterns for addressing that challenge and how does DBOS help?Can you describe how DBOS is architected?How have the design and goals of the system changed since you first started working on it?What are the characteristics of Postgres that make it suitable for the persistence mechanism of DBOS?What are the guiding principles that you rely on to determine the boundaries between the open source and commercial elements of DBOS?What are the most interesting, innovative, or unexpected ways that you have seen DBOS used?What are the most interesting, unexpected, or challenging lessons that you have learned while working on DBOS?When is DBOS the wrong choice?What do you have planned for the future of DBOS?Contact Info LinkedInParting Question From your perspective, what is the biggest gap in the tooling or technology for data management today?Closing Announcements Thank you for listening! Don't forget to check out our other shows. Podcast.init covers the Python language, its community, and the innovative ways it is being used. The AI Engineering Podcast is your guide to the fast-moving world of building AI systems.Visit the site to subscribe to the show, sign up for the mailing list, and read the show notes.If you've learned something or tried out a project from the show then tell us about it! Email [email protected] with your story.Links DBOSExactly Once SemanticsTemporalSempahorePostgresDBOS TransactPython Typescript Idempotency KeysAgentic AIState MachineYugabyteDBPodcast EpisodeCockroachDBSupabaseNeonPodcast EpisodeAirflowThe intro and outro music is from The Hug by The Freak Fandango Orchestra / CC BY-SA

This session explores the evolution of data management on Kubernetes for AI and machine learning (ML) workloads and modern databases, including Google’s leadership in this space. We’ll discuss key challenges and solutions, including persistent storage with solutions like checkpointing and Cloud Storage FUSE, and accelerating data access with caching. Customers Qdrant and Codeway will share how they’ve successfully leveraged these technologies to improve their AI, ML, and database performance on Google Kubernetes Engine (GKE).

Explore the latest advancements in the open source software (OSS) world of in-memory data management and the launch of Memorystore for Valkey. Discover the exciting new features and enhancements in Valkey 8.0, including a preview of upcoming open source innovations on the roadmap. Join us for a deep dive with a customer who will share their real-world experience using Valkey within their architecture. Learn how they leverage the power of in-memory data to achieve exceptional performance and scalability.

This session dives into building a modern data platform on Google Cloud with AI-powered data management. Explore how to leverage data mesh architectures to break down data silos and enable efficient data sharing. Learn how data contracts improve reliability, and discover how real-time ingestion empowers immediate insights. We'll also examine the role of data agents in automating data discovery, preparation, and delivery for optimized AI workflows.

This Session is hosted by a Google Cloud Next Sponsor.

Visit your registration profile at g.co/cloudnext to opt out of sharing your contact information with the sponsor hosting this session.

Explore the future of data management with BigQuery multimodal tables. Discover how to integrate structured and unstructured data (such as text, images, and video) into a single table with full data manipulation language (DML) support. This session demonstrates how unified tables unlock the potential of unstructured data through easy extraction and merging, simplify Vertex AI integration for downstream workflows, and enable unified data discovery with search across all data.

Is complex data management holding your creative teams back? In this episode of Data Unchained, John Kleber, CTO at BUCK, joins us to discuss the transformative power of adopting a single global namespace. Discover how BUCK overcame the limitations of traditional storage systems and enhanced their global collaboration and productivity by leveraging unified data management. John Kleber shares practical insights and real-world strategies that demonstrate why shifting to a global namespace isn't just an improvement—it's essential for the future of distributed work.

GlobalNamespace #UnifiedData #TechLeadership #CreativeWorkflow #HybridCloud #DataManagement #RemoteCollaboration #DisasterRecovery #TechExecutives #DataUnchained

Cyberpunk by jiglr | https://soundcloud.com/jiglrmusic Music promoted by https://www.free-stock-music.com Creative Commons Attribution 3.0 Unported License https://creativecommons.org/licenses/by/3.0/deed.en_US Hosted on Acast. See acast.com/privacy for more information.

Send us a text Welcome to the cozy corner of the tech world where ones and zeros mingle with casual chit-chat. Datatopics Unplugged is your go-to spot for relaxed discussions around tech, news, data, and society. Dive into conversations that should flow as smoothly as your morning coffee (but don't), where industry insights meet laid-back banter. Whether you're a data aficionado or just someone curious about the digital age, pull up a chair, relax, and let's get into the heart of data, unplugged style! In this episode, host Murilo is joined by returning guest Paolo, Data Management Team Lead at dataroots, for a deep dive into the often-overlooked but rapidly evolving domain of unstructured data quality. Tune in for a field guide to navigating documents, images, and embeddings without losing your sanity. What we unpack: Data management basics: Metadata, ownership, and why Excel isn’t everything.Structured vs unstructured data: How the wild west of PDFs, images, and audio is redefining quality.Data quality challenges for LLMs: From apples and pears to rogue chatbots with “legally binding” hallucinations.Practical checks for document hygiene: Versioning, ownership, embedding similarity, and tagging strategies.Retrieval-Augmented Generation (RAG): When ChatGPT meets your HR policies and things get weird.Monitoring and governance: Building systems that flag rot before your chatbot gives out 2017 vacation rules.Tooling and gaps: Where open source is doing well—and where we’re still duct-taping workflows.Real-world inspirations: A look at how QuantumBlack (McKinsey) is tackling similar issues with their AI for DQ framework.

The rise of AI demands an easier and more efficient approach to data management. Discover how small IT teams are transforming their data foundations with BigQuery to support AI-powered use cases across all data types – from structured data to unstructured data like images and text (multimodal). Learn from peers across industries and geographies why they migrated to BigQuery and how it helped them accelerate time to insights, reduce data management complexity, and unlock the full potential of AI.

This session explores the business drivers for ESG data in the current business climate and delves into the technical architecture of a robust ESG data management solution. Discover how to integrate AI and data agents with core enterprise systems, like SAP and Cortex, and incorporate crucial third-party ESG data from sources like ESG Book to enhance business decision-making. We will also showcase real-world examples of how to integrate Google Cloud with platforms like Watershed to build a complete ESG data ecosystem.

This Session is hosted by a Google Cloud Next Sponsor.

Visit your registration profile at g.co/cloudnext to opt out of sharing your contact information with the sponsor hosting this session.

Discover how NetApp Volumes, a Google Cloud managed data storage service that provides advanced data management capabilities and highly scalable performance, can accelerate your workloads, enable hybrid deployments, and provide cloud bursting for AI, EDA, media and entertainment, and others by extending your on-premises data into Google Cloud, all while keeping your data available and secure. Join us to explore how NetApp and Google Cloud together drive innovation and operational excellence.

This Session is hosted by a Google Cloud Next Sponsor.

Visit your registration profile at g.co/cloudnext to opt out of sharing your contact information with the sponsor hosting this session.

Build transformative solutions by leveraging Google Cloud's data management and AI. Solutions like BigQuery and AI-first databases enable personalized recommendations and predictive analytics, delivering exceptional customer value. Partners will share best practices for building a successful data management organization with Google Cloud and how this has led to unlocking innovation.

Discover the latest breakthroughs in Cloud Storage. This executive session provides a high-level overview of the latest object, block, file storage, and backup and recovery solutions. Gain insights into our cutting-edge storage technologies and learn how they can optimize your infrastructure, reduce costs, and enhance your data management strategy. Don’t miss this opportunity to learn directly from Google executives about the future of storage. This session is a must for IT decision-makers seeking a competitive edge.

Enhance your data ingestion architecture's resilience with Google Cloud's serverless solutions. Gain end-to-end visibility into your data's lineage—track each data point's transformation journey, including timestamps, user actions, and process outcomes. Implement real-time streaming and daily batch processes for Vertex AI Retail Search to deliver near real-time search capabilities while maintaining a daily backup for contingencies. Adopt best practices for data management, lineage tracking, and forensic capabilities to streamline issue diagnosis. This talk presents a scalable and fault-tolerant design that optimizes data quality and search performance while ensuring forensic-level traceability for every data movement.

BigQuery is unifying data management, analytics, governance, and AI. Join this session to learn about the latest innovations in BigQuery to help you get actionable insights from your multimodal data and accelerate AI innovation with a secure data foundation and new-gen AI-powered experiences. Hear how Mattel utilized BigQuery to create a no-code, shareable template for data processing, analytics, and AI modeling, leveraging their existing data and streamlining the entire workflow from ETL to AI implementation within a single platform.

In this episode of Hub & Spoken, host Jason Foster welcomes Sam White, the multi-award-winning Founder of Freedom Services Group and Global Founder of Stella Insurance Australia. Sam shares her journey of building Stella Insurance, the challenges and opportunities of creating a digital-first insurance company, the importance of customer experience, and how Stella Insurance is reimagining financial services from a female perspective. Sam also discusses the impact of regulatory changes, the role of AI in the insurance industry, and the significance of diversity in business. This is a real gem of an episode, especially for entrepreneurs and business leaders interested in digital transformation, insurance innovations, and diversity in leadership. Follow Sam: linkedin.com/in/samwhiteentrepreneur/ Follow Jason: linkedin.com/in/jasonbfoster/ ***** Cynozure is a leading data, analytics and AI company that helps organisations to reach their data potential. It works with clients on data and AI strategy, data management, data architecture and engineering, analytics and AI, data culture and literacy, and data leadership. The company was named one of The Sunday Times' fastest-growing private companies in both 2022 and 2023 and recognised as The Best Place to Work in Data by DataIQ in 2023 and 2024. Cynozure is a certified B Corporation.

Building a Scalable Data Foundation in Health Tech | Anna Swigart | Shift Left Data Conference 2025

In healthcare technology, protecting patient privacy while scaling data operations requires reimagining where quality and governance live. This presentation explores Helix's journey of shifting critical processes left in its precision medicine business—from implementing automated data classification and privacy workflows to enlisting cross-functional expertise in refining operational workflows. For clinical data management, we've partnered with healthcare systems to implement OMOP standards and data contracts at the source, creating a robust foundation for research and commercial opportunities. Through practical examples, we'll demonstrate how this upstream approach has transformed our data operations, encouraged internal alignment, and strengthened partner relationships.

Shift Left with Apache Iceberg Data Products to Power AI | Andrew Madson | Shift Left Data Conference 2025

High-quality, governed, and performant data from the outset is vital for agile, trustworthy enterprise AI systems. Traditional approaches delay addressing data quality and governance, causing inefficiencies and rework. Apache Iceberg, a modern table format for data lakes, empowers organizations to "Shift Left" by integrating data management best practices earlier in the pipeline to enable successful AI systems.

This session covers how Iceberg's schema evolution, time travel, ACID transactions, and Git-like data branching allow teams to validate, version, and optimize data at its source. Attendees will learn to create resilient, reusable data assets, streamline engineering workflows, enforce governance efficiently, and reduce late-stage transformations—accelerating analytics, machine learning, and AI initiatives.

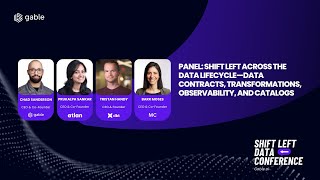

Panel: Shift Left Across the Data Lifecycle—Data Contracts, Transformations, Observability, and C...

Panel: Shift Left Across the Data Lifecycle—Data Contracts, Transformations, Observability, and Catalogs | Prukalpa Sankar, Tristan Handy, Barr Moses, Chad Sanderson | Shift Left Data Conference 2025

Join industry-leading CEOs Chad (Data Contracts), Tristan (Data Transformations), Barr (Data Observability), and Prukalpa (Data Catalogs) who are pioneering new approaches to operationalizing data by “Shifting Left.” This engaging panel will explore how embedding rigorous data management practices early in the data lifecycle reduces issues downstream, enhances data reliability, and empowers software engineers with clear visibility into data expectations. Attendees will gain insights into how data contracts define accountability, how effective transformations ensure data usability at scale, how proactive how proactive data and AI observability drives continuous confidence in data quality, and how catalogs enable data discoverability, accelerating innovation and trust across organizations.

This book is an essential guide designed to equip you with the vital tools and knowledge needed to excel in data science. Master the end-to-end process of data collection, processing, validation, and imputation using R, and understand fundamental theories to achieve transparency with literate programming, renv, and Git--and much more. Each chapter is concise and focused, rendering complex topics accessible and easy to understand. Data Insight Foundations caters to a diverse audience, including web developers, mathematicians, data analysts, and economists, and its flexible structure allows enables you to explore chapters in sequence or navigate directly to the topics most relevant to you. While examples are primarily in R, a basic understanding of the language is advantageous but not essential. Many chapters, especially those focusing on theory, require no programming knowledge at all. Dive in and discover how to manipulate data, ensure reproducibility, conduct thorough literature reviews, collect data effectively, and present your findings with clarity. What You Will Learn Data Management: Master the end-to-end process of data collection, processing, validation, and imputation using R. Reproducible Research: Understand fundamental theories and achieve transparency with literate programming, renv, and Git. Academic Writing: Conduct scientific literature reviews and write structured papers and reports with Quarto. Survey Design: Design well-structured surveys and manage data collection effectively. Data Visualization: Understand data visualization theory and create well-designed and captivating graphics using ggplot2. Who this Book is For Career professionals such as research and data analysts transitioning from academia to a professional setting where production quality significantly impacts career progression. Some familiarity with data analytics processes and an interest in learning R or Python are ideal.