Learn how to modernize and create sustainable software where AI-powered tools like GitHub Copilot help to empower software development in this new era of Agentic DevOps.

talk-data.com

talk-data.com

Topic

34

tagged

Learn how to modernize and create sustainable software where AI-powered tools like GitHub Copilot help to empower software development in this new era of Agentic DevOps.

Explore how GitHub Copilot and intelligent agents streamline Azure application development from design to operations. This session covers how the Coding Agent, App Modernization agent, and cloud architecture agent accelerate planning and coding, while GitHub Copilot for Azure and the Azure MCP Server simplify deployment and diagnostics. Learn how the SRE Agent and testing agent extend automation into production and quality assurance, enabling end-to-end DevOps with AI-powered workflows.

There's so much more to software than creating it! Exercising DevOps principles in Power Platform has never been easier. Learn about the latest enhancements that scale development on complex solution, deploy production ready changes and monitor production workloads - all within Power Platform.

Delivered in a silent stage breakout.

AI agents like GitHub Copilot have transformed how developers build software. In this session, learn how to leverage GitHub’s governance and security capabilities to enable agents at scale. We’ll cover best practices for rolling out agents across your organization, aligning with developer workflows, and maintaining oversight while embracing this new era of software development.

Agentic DevOps is shaping the future of software engineering, driving productivity and innovation through automation and intelligent collaboration. Learn how AI-powered tools like GitHub Copilot, Microsoft Foundry, and Azure are empowering developers at Microsoft to innovate faster and more securely. Gain practical insights and strategies from Microsoft’s journey to empower your own DevOps transformation.

Modernize your DevOps strategy with Agentic DevOps by migrating your Azure Repos to GitHub while continuing to leverage the investments you’ve made in Azure Boards and Azure Pipelines. We’ll walk through real-world patterns for hybrid adoption, show how to integrate GitHub, Azure Boards and Azure Pipelines, and share best practices for enabling agent-based workflows with the MCP Servers for Azure DevOps, Playwright and Azure.

Delivered in a silent stage breakout.

Get ready to explore how Azure Copilot is transforming the way IT, DevOps, and Developers manage, secure, and optimize cloud environments—ushering in an era of agentic AI that goes beyond simple automation. This session unveils powerful new capabilities that enable AI to act more independently and collaboratively with users, streamlining tasks like incident response, infrastructure provisioning, security posture management, and beyond.

The teams that have strong DevOps practices are the ones best positioned to take advantage of the power of AI. See how you can use GitHub Copilot and AI Agents to bring speed, scale and security across the software development for your organization.

At Virgin Media O2, we believe that strong processes and culture matter more than any individual tool. In this talk, we’ll share how we’ve applied DevOps and software engineering principles to transform our data capabilities and enable true data modernization at scale. We’ll take you behind the scenes of how these practices shaped the design and delivery of our enterprise Data Mesh, with dbt at its core, empowering our teams to move faster, build trust in data, and fully embrace a modern, decentralized approach.

Deploying AI models efficiently and consistently is a challenge many organizations face. This session will explore how Vizient built a standardized MLOps stack using Databricks and Azure DevOps to streamline model development, deployment and monitoring. Attendees will gain insights into how Databricks Asset Bundles were leveraged to create reproducible, scalable pipelines and how Infrastructure-as-Code principles accelerated onboarding for new AI projects. The talk will cover: End-to-end MLOps stack setup, ensuring efficiency and governance CI/CD pipeline architecture, automating model versioning and deployment Standardizing AI model repositories, reducing development and deployment time Lessons learned, including challenges and best practices By the end of this session, participants will have a roadmap for implementing a scalable, reusable MLOps framework that enhances operational efficiency across AI initiatives.

The session will cover how to use Unity Catalog governed system tables to understand what is happening in Databricks. We will touch on key scenarios for FinOps, DevOps and SecOps to ensure you have a well-observed Data Intelligence Platform. Learn about new developments in system tables and other features that will help you observe your Databricks instance.

In this presentation, we'll show how we achieved a unified development experience for teams working on Mercedes-Benz Data Platforms in AWS and Azure. We will demonstrate how we implemented Azure to AWS and AWS to Azure data product sharing (using Delta Sharing and Cloud Tokens), integration with AWS Glue Iceberg tables through UniForm and automation to drive everything using Azure DevOps Pipelines and DABs. We will also show how to monitor and track cloud egress costs and how we present a consolidated view of all the data products and relevant cost information. The end goal is to show how customers can offer the same user experience to their engineers and not have to worry about which cloud or region the Data Product lives in. Instead, they can enroll in the data product through self-service and have it available to them in minutes, regardless of where it originates.

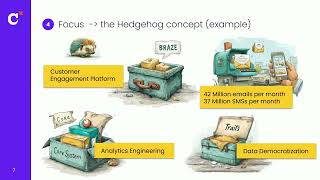

The role of data teams and data engineers is evolving. No longer just pipeline builders or dashboard creators, today’s data teams must evolve to drive business strategy, enable automation, and scale with growing demands. Best practices seen in the software engineering world (Agile development, CI/CD, and Infrastructure-as-code) from the DevOps movement are gradually making their way into data engineering. We believe these changes have led to the rise of DataOps and a new wave of best practices that will transform the discipline of data engineering. But how do you transform a reactive team into a proactive force for innovation? We’ll explore the key principles for building a resilient, high-impact data team—from structuring for collaboration, testing, automation, to leveraging modern orchestration tools. Whether you’re leading a team or looking to future-proof your career, you’ll walk away with actionable insights on how to stay ahead in the rapidly changing data landscape.

This session is repeated.Managing data and AI workloads in Databricks can be complex. Databricks Asset Bundles (DABs) simplify this by enabling declarative, Git-driven deployment workflows for notebooks, jobs, Lakeflow Declarative Pipelines, dashboards, ML models and more.Join the DABs Team for a Deep Dive and learn about:The Basics: Understanding Databricks asset bundlesDeclare, define and deploy assets, follow best practices, use templates and manage dependenciesCI/CD & Governance: Automate deployments with GitHub Actions/Azure DevOps, manage Dev vs. Prod differences, and ensure reproducibilityWhat’s new and what's coming up! AI/BI Dashboard support, Databricks Apps support, a Pythonic interface and workspace-based deploymentIf you're a data engineer, ML practitioner or platform architect, this talk will provide practical insights to improve reliability, efficiency and compliance in your Databricks workflows.

Modern insurers require agile, integrated data systems to harness AI. This framework for a global insurer uses Azure Databricks to unify legacy systems into a governed lakehouse medallion architecture (bronze/silver/gold layers), eliminating silos and enabling real-time analytics. The solution employs: Medallion architecture for incremental data quality improvement. Unity Catalog for centralized governance, row/column security, and audit compliance. Azure encryption/confidential computing for data mesh security. Automated ingestion/semantic/DevOps pipelines for scalability. By combining Databricks’ distributed infrastructure with Azure’s security, the insurer achieves regulatory compliance while enabling AI-driven innovation (e.g., underwriting, claims). The framework establishes a future-proof foundation for mergers/acquisitions (M&A) and cross-functional data products, balancing governance with agility.

Data DevOps applies rigorous software development practices—such as version control, automated testing, and governance—to data workflows, empowering software engineers to proactively manage data changes and address data-related issues directly within application code. By adopting a "shift left" approach with Data DevOps, SWE teams become more aware of data requirements, dependencies, and expectations early in the software development lifecycle, significantly reducing risks, improving data quality, and enhancing collaboration.

This session will provide practical strategies for integrating Data DevOps into application development, enabling teams to build more robust data products and accelerate adoption of production AI systems.

🌟 Session Overview 🌟

Session Name: Large-Scale Logging Made Easy Speaker: Aliaksandr Valialkin Session Description: Logging at scale is a common source of infrastructure expenses and frustration. While logging is something any organization does, there is still no silver bullet or simple, scalable solution without trade-offs.

In this session, Aliaksandr presents his innovative approach to log management after studying the limitations of existing logging systems. His solution is tailored for SREs, DevOps, and system engineers seeking a comprehensive logging platform for their organization.

Aliaksandr's solution seamlessly integrates with existing logging agents, pipelines, and streams, efficiently storing logs in a highly optimized database. Notably, it offers lightning-fast query speeds and seamless integration with essential tools like jq, awk, and cut.

🚀 About Big Data and RPA 2024 🚀

Unlock the future of innovation and automation at Big Data & RPA Conference Europe 2024! 🌟 This unique event brings together the brightest minds in big data, machine learning, AI, and robotic process automation to explore cutting-edge solutions and trends shaping the tech landscape. Perfect for data engineers, analysts, RPA developers, and business leaders, the conference offers dual insights into the power of data-driven strategies and intelligent automation. 🚀 Gain practical knowledge on topics like hyperautomation, AI integration, advanced analytics, and workflow optimization while networking with global experts. Don’t miss this exclusive opportunity to expand your expertise and revolutionize your processes—all from the comfort of your home! 📊🤖✨

📅 Yearly Conferences: Curious about the evolution of QA? Check out our archive of past Big Data & RPA sessions. Watch the strategies and technologies evolve in our videos! 🚀 🔗 Find Other Years' Videos: 2023 Big Data Conference Europe https://www.youtube.com/playlist?list=PLqYhGsQ9iSEpb_oyAsg67PhpbrkCC59_g 2022 Big Data Conference Europe Online https://www.youtube.com/playlist?list=PLqYhGsQ9iSEryAOjmvdiaXTfjCg5j3HhT 2021 Big Data Conference Europe Online https://www.youtube.com/playlist?list=PLqYhGsQ9iSEqHwbQoWEXEJALFLKVDRXiP

💡 Stay Connected & Updated 💡

Don’t miss out on any updates or upcoming event information from Big Data & RPA Conference Europe. Follow us on our social media channels and visit our website to stay in the loop!

🌐 Website: https://bigdataconference.eu/, https://rpaconference.eu/ 👤 Facebook: https://www.facebook.com/bigdataconf, https://www.facebook.com/rpaeurope/ 🐦 Twitter: @BigDataConfEU, @europe_rpa 🔗 LinkedIn: https://www.linkedin.com/company/73234449/admin/dashboard/, https://www.linkedin.com/company/75464753/admin/dashboard/ 🎥 YouTube: http://www.youtube.com/@DATAMINERLT

🌟 Session Overview 🌟

Session Name: Technology is Necessary, But Not Sufficient Speaker: Simon Copsey Session Description: Adopting and evolving technologies in an organization is important and can offer significant advantages. However, translating these advantages into bottom-line benefits often comes from combining technical change with social change - we must not just change the technology, but also rewrite the rules governing how that technology is used.

We are seeing growing thought leadership moving into this sociotechnical space: SRE, Data Mesh, DevOps, and Platform Engineering are equally about the technology as they are about the rethinking of how teams are designed and organized.

In this talk, we hope to help you understand how to apply this sociotechnical thinking to your organization so you unlock the - often untapped - bottom-line benefits of technical change.

🚀 About Big Data and RPA 2024 🚀

Unlock the future of innovation and automation at Big Data & RPA Conference Europe 2024! 🌟 This unique event brings together the brightest minds in big data, machine learning, AI, and robotic process automation to explore cutting-edge solutions and trends shaping the tech landscape. Perfect for data engineers, analysts, RPA developers, and business leaders, the conference offers dual insights into the power of data-driven strategies and intelligent automation. 🚀 Gain practical knowledge on topics like hyperautomation, AI integration, advanced analytics, and workflow optimization while networking with global experts. Don’t miss this exclusive opportunity to expand your expertise and revolutionize your processes—all from the comfort of your home! 📊🤖✨

📅 Yearly Conferences: Curious about the evolution of QA? Check out our archive of past Big Data & RPA sessions. Watch the strategies and technologies evolve in our videos! 🚀 🔗 Find Other Years' Videos: 2023 Big Data Conference Europe https://www.youtube.com/playlist?list=PLqYhGsQ9iSEpb_oyAsg67PhpbrkCC59_g 2022 Big Data Conference Europe Online https://www.youtube.com/playlist?list=PLqYhGsQ9iSEryAOjmvdiaXTfjCg5j3HhT 2021 Big Data Conference Europe Online https://www.youtube.com/playlist?list=PLqYhGsQ9iSEqHwbQoWEXEJALFLKVDRXiP

💡 Stay Connected & Updated 💡

Don’t miss out on any updates or upcoming event information from Big Data & RPA Conference Europe. Follow us on our social media channels and visit our website to stay in the loop!

🌐 Website: https://bigdataconference.eu/, https://rpaconference.eu/ 👤 Facebook: https://www.facebook.com/bigdataconf, https://www.facebook.com/rpaeurope/ 🐦 Twitter: @BigDataConfEU, @europe_rpa 🔗 LinkedIn: https://www.linkedin.com/company/73234449/admin/dashboard/, https://www.linkedin.com/company/75464753/admin/dashboard/ 🎥 YouTube: http://www.youtube.com/@DATAMINERLT

There are undoubtedly similarities between the disciplines of analytics engineering and DevOps: in fact, dbt was founded with the goal of helping data professionals embrace DevOps principles as part of the data workflow. As the embedded DevOps engineer for a mature analytics engineering function, Katie Claiborne, Founding Analytics Engineer at Duet, observed parallels between analytics-as-code and infrastructure-as-code, particularly tools like Terraform. In this talk, she'll examine how analytics engineering is a means of empowerment for data practitioners and discuss infrastructure engineering as a means of scaling dbt Cloud deployments. Learn about similarities between analytics and infrastructure configuration tools, how to apply the concepts you've learned about analytics engineering towards new disciplines like DevOps, and how to extend engineering principles beyond data transformation and into the world of infrastructure.

Speaker: Katie Claiborne Founding Analytics Engineer Duet

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements

DevOps and MLOps are both software development strategies that focus on collaboration between developers, operations, and data science teams. In this session, learn how to build modern, secure MLOps using AWS services and tools for infrastructure and network isolation, data protection, authentication and authorization, detective controls, and compliance. Discover how AWS customer PathAI, a leading digital pathology and AI company, uses seamless DevOps and MLOps strategies to run their AISight intelligent image management system and embedded AI products to support anatomic pathology labs and biopharma partners globally.

Learn more about AWS re:Inforce at https://go.aws/reinforce.

Subscribe: More AWS videos: http://bit.ly/2O3zS75 More AWS events videos: http://bit.ly/316g9t4

ABOUT AWS Amazon Web Services (AWS) hosts events, both online and in-person, bringing the cloud computing community together to connect, collaborate, and learn from AWS experts.

AWS is the world's most comprehensive and broadly adopted cloud platform, offering over 200 fully featured services from data centers globally. Millions of customers—including the fastest-growing startups, largest enterprises, and leading government agencies—are using AWS to lower costs, become more agile, and innovate faster.