Are you an Amazon Web Services (AWS) developer exploring Google Cloud for the first time, or looking to deepen your multi-cloud skills? Join us for a whirlwind tour exploring the ins and outs of Google Cloud, from resource and access management, to networking and SDKs. We’ll cover Google Cloud’s framework for hyperscaler migrations. Then, we will demonstrate migrating an AWS application to Google Kubernetes Engine (GKE) and Cloud SQL, including Database Migration Service (DMS), GKE cluster creation, container image migration, and CI/CD. You'll leave with a core understanding of how Google Cloud works, key similarities and differences with AWS, and resources to get started.

talk-data.com

talk-data.com

Topic

CI/CD

Continuous Integration/Continuous Delivery (CI/CD)

262

tagged

Activity Trend

Top Events

Essential for Looker developers and administrators responsible for high-performing deployments, this session dives deep into the latest Looker performance and modeling advancements, equipping you with the knowledge and tools to build robust and scalable data experiences. Explore the latest platform optimizations, continuous integration and delivery (CI/CD) with Spectacles, key metrics for monitoring, and advanced LookML modeling techniques. Plus, a Looker customer will share their journey to optimize performance and achieve greater scalability.

Shifting From Reactive to Proactive at Glassdoor | Zakariah Siyaji | Shift Left Data Conference 2025

As Glassdoor scaled to petabytes of data, ensuring data quality became critical for maintaining trust and supporting strategic decisions. Glassdoor implemented a proactive, “shift left” strategy focused on embedding data quality practices directly into the development process. This talk will detail how Glassdoor leveraged data contracts, static code analysis integrated into the CI/CD pipeline, and automated anomaly detection to empower software engineers and prevent data issues at the source. Attendees will learn how proactive data quality management reduces risk, promotes stronger collaboration across teams, enhances operational efficiency, and fosters a culture of trust in data at scale.

Supported by Our Partners • WorkOS — The modern identity platform for B2B SaaS. • Vanta — Automate compliance and simplify security with Vanta. — Linux is the most widespread operating system, globally – but how is it built? Few people are better to answer this than Greg Kroah-Hartman: a Linux kernel maintainer for 25 years, and one of the 3 Linux Kernel Foundation Fellows (the other two are Linus Torvalds and Shuah Khan). Greg manages the Linux kernel’s stable releases, and is a maintainer of multiple kernel subsystems. We cover the inner workings of Linux kernel development, exploring everything from how changes get implemented to why its community-driven approach produces such reliable software. Greg shares insights about the kernel's unique trust model and makes a case for why engineers should contribute to open-source projects. We go into: • How widespread is Linux? • What is the Linux kernel responsible for – and why is it a monolith? • How does a kernel change get merged? A walkthrough • The 9-week development cycle for the Linux kernel • Testing the Linux kernel • Why is Linux so widespread? • The career benefits of open-source contribution • And much more! — Timestamps (00:00) Intro (02:23) How widespread is Linux? (06:00) The difference in complexity in different devices powered by Linux (09:20) What is the Linux kernel? (14:00) Why trust is so important with the Linux kernel development (16:02) A walk-through of a kernel change (23:20) How Linux kernel development cycles work (29:55) The testing process at Kernel and Kernel CI (31:55) A case for the open source development process (35:44) Linux kernel branches: Stable vs. development (38:32) Challenges of maintaining older Linux code (40:30) How Linux handles bug fixes (44:40) The range of work Linux kernel engineers do (48:33) Greg’s review process and its parallels with Uber’s RFC process (51:48) Linux kernel within companies like IBM (53:52) Why Linux is so widespread (56:50) How Linux Kernel Institute runs without product managers (1:02:01) The pros and cons of using Rust in Linux kernel (1:09:55) How LLMs are utilized in bug fixes and coding in Linux (1:12:13) The value of contributing to the Linux kernel or any open-source project (1:16:40) Rapid fire round — The Pragmatic Engineer deepdives relevant for this episode: What TPMs do and what software engineers can learn from them The past and future of modern backend practices Backstage: an open-source developer portal — See the transcript and other references from the episode at https://newsletter.pragmaticengineer.com/podcast — Production and marketing by https://penname.co/. For inquiries about sponsoring the podcast, email [email protected].

Get full access to The Pragmatic Engineer at newsletter.pragmaticengineer.com/subscribe

Get ready to dive into the world of DevOps & Cloud tech! This session will help you navigate the complex world of Cloud and DevOps with confidence. This session is ideal for new grads, career changers, and anyone feeling overwhelmed by the buzz around DevOps. We'll break down its core concepts, demystify the jargon, and explore how DevOps is essential for success in the ever-changing technology landscape, particularly in the emerging era of generative AI. A basic understanding of software development concepts is helpful, but enthusiasm to learn is most important.

Vishakha is a Senior Cloud Architect at Google Cloud Platform with over 8 years of DevOps and Cloud experience. Prior to Google, she was a DevOps engineer at AWS and a Subject Matter Expert (SME) for the IaC offering CloudFormation in the NorthAm region. She has experience in diverse domains including Financial Services, Retail, and Online Media. She primarily focuses on Infrastructure Architecture, Design & Automation (IaC), Public Cloud (AWS, GCP), Kubernetes/CNCF tools, Infrastructure Security & Compliance, CI/CD & GitOps, and MLOPS.

Mergulhamos no universo dos AI Agents e discutimos por que eles são considerados a próxima revolução em Data & AI. Nossos convidados exploram desde os conceitos básicos até aplicações reais, incluindo como empresas estão criando agentes de forma autônoma e o papel do Langflow — uma plataforma de AI Agents, fundada por um brasileiro, que já é destaque no cenário internacional — nesse ecossistema.

Neste episódio do Data Hackers — a maior comunidade de AI e Data Science do Brasil - cconheçam Mikaeri Ohana - Head de Dados e IA na CI&T & Content Creator at Explica Mi , e o Gabriel Almeida - Founder & CTO @ Langflow.

Lembrando que você pode encontrar todos os podcasts da comunidade Data Hackers no Spotify, iTunes, Google Podcast, Castbox e muitas outras plataformas.

Nossa Bancada Data Hackers:

Paulo Vasconcellos - Co-founder da Data Hackers e Principal Data Scientist na Hotmart.

Monique Femme — Head of Community Management na Data Hackers

Gabriel Lages — Co-founder da Data Hackers e Data & Analytics Sr. Director na Hotmart.

Referências:

Participe do Evento do ifood: https://lu.ma/si2mn42p

Blog Data Hackers - Langflow: Conheça uma plataforma de AI Agents fundada por um Brasileiro que já é destaque no cenário internacional: https://www.datahackers.news/p/langflow-conheca-uma-plataforma-de-ai-agents-fundada-por-um-brasileiro

Langflow: https://www.langflow.org/pt/

Site da DataStax:

Blog Data Hackers - CrewAI : https://www.datahackers.news/p/crew-ai-a-startup-brasileira-que-esta-dominando-o-mercado-de-ai-agents

A practical introduction to data engineering on the powerful Snowflake cloud data platform. Data engineers create the pipelines that ingest raw data, transform it, and funnel it to the analysts and professionals who need it. The Snowflake cloud data platform provides a suite of productivity-focused tools and features that simplify building and maintaining data pipelines. In Snowflake Data Engineering, Snowflake Data Superhero Maja Ferle shows you how to get started. In Snowflake Data Engineering you will learn how to: Ingest data into Snowflake from both cloud and local file systems Transform data using functions, stored procedures, and SQL Orchestrate data pipelines with streams and tasks, and monitor their execution Use Snowpark to run Python code in your pipelines Deploy Snowflake objects and code using continuous integration principles Optimize performance and costs when ingesting data into Snowflake Snowflake Data Engineering reveals how Snowflake makes it easy to work with unstructured data, set up continuous ingestion with Snowpipe, and keep your data safe and secure with best-in-class data governance features. Along the way, you’ll practice the most important data engineering tasks as you work through relevant hands-on examples. Throughout, author Maja Ferle shares design tips drawn from her years of experience to ensure your pipeline follows the best practices of software engineering, security, and data governance. About the Technology Pipelines that ingest and transform raw data are the lifeblood of business analytics, and data engineers rely on Snowflake to help them deliver those pipelines efficiently. Snowflake is a full-service cloud-based platform that handles everything from near-infinite storage, fast elastic compute services, inbuilt AI/ML capabilities like vector search, text-to-SQL, code generation, and more. This book gives you what you need to create effective data pipelines on the Snowflake platform. About the Book Snowflake Data Engineering guides you skill-by-skill through accomplishing on-the-job data engineering tasks using Snowflake. You’ll start by building your first simple pipeline and then expand it by adding increasingly powerful features, including data governance and security, adding CI/CD into your pipelines, and even augmenting data with generative AI. You’ll be amazed how far you can go in just a few short chapters! What's Inside Ingest data from the cloud, APIs, or Snowflake Marketplace Orchestrate data pipelines with streams and tasks Optimize performance and cost About the Reader For software developers and data analysts. Readers should know the basics of SQL and the Cloud. About the Author Maja Ferle is a Snowflake Subject Matter Expert and a Snowflake Data Superhero who holds the SnowPro Advanced Data Engineer and the SnowPro Advanced Data Analyst certifications. Quotes An incredible guide for going from zero to production with Snowflake. - Doyle Turner, Microsoft A must-have if you’re looking to excel in the field of data engineering. - Isabella Renzetti, Data Analytics Consultant & Trainer Masterful! Unlocks the true potential of Snowflake for modern data engineers. - Shankar Narayanan, Microsoft Valuable insights will enhance your data engineering skills and lead to cost-effective solutions. A must read! - Frédéric L’Anglais, Maxa Comprehensive, up-to-date and packed with real-life code examples. - Albert Nogués, Danone

Summary In this episode of the Data Engineering Podcast, Anna Geller talks about the integration of code and UI-driven interfaces for data orchestration. Anna defines data orchestration as automating the coordination of workflow nodes that interact with data across various business functions, discussing how it goes beyond ETL and analytics to enable real-time data processing across different internal systems. She explores the challenges of using existing scheduling tools for data-specific workflows, highlighting limitations and anti-patterns, and discusses Kestra's solution, a low-code orchestration platform that combines code-driven flexibility with UI-driven simplicity. Anna delves into Kestra's architectural design, API-first approach, and pluggable infrastructure, and shares insights on balancing UI and code-driven workflows, the challenges of open-core business models, and innovative user applications of Kestra's platform.

Announcements Hello and welcome to the Data Engineering Podcast, the show about modern data managementData migrations are brutal. They drag on for months—sometimes years—burning through resources and crushing team morale. Datafold's AI-powered Migration Agent changes all that. Their unique combination of AI code translation and automated data validation has helped companies complete migrations up to 10 times faster than manual approaches. And they're so confident in their solution, they'll actually guarantee your timeline in writing. Ready to turn your year-long migration into weeks? Visit dataengineeringpodcast.com/datafold today for the details.As a listener of the Data Engineering Podcast you clearly care about data and how it affects your organization and the world. For even more perspective on the ways that data impacts everything around us you should listen to Data Citizens® Dialogues, the forward-thinking podcast from the folks at Collibra. You'll get further insights from industry leaders, innovators, and executives in the world's largest companies on the topics that are top of mind for everyone. They address questions around AI governance, data sharing, and working at global scale. In particular I appreciate the ability to hear about the challenges that enterprise scale businesses are tackling in this fast-moving field. While data is shaping our world, Data Citizens Dialogues is shaping the conversation. Subscribe to Data Citizens Dialogues on Apple, Spotify, Youtube, or wherever you get your podcasts.Your host is Tobias Macey and today I'm interviewing Anna Geller about incorporating both code and UI driven interfaces for data orchestrationInterview IntroductionHow did you get involved in the area of data management?Can you start by sharing a definition of what constitutes "data orchestration"?There are many orchestration and scheduling systems that exist in other contexts (e.g. CI/CD systems, Kubernetes, etc.). Those are often adapted to data workflows because they already exist in the organizational context. What are the anti-patterns and limitations that approach introduces in data workflows?What are the problems that exist in the opposite direction of using data orchestrators for CI/CD, etc.?Data orchestrators have been around for decades, with many different generations and opinions about how and by whom they are used. What do you see as the main motivation for UI vs. code-driven workflows?What are the benefits of combining code-driven and UI-driven capabilities in a single orchestrator?What constraints does it necessitate to allow for interoperability between those modalities?Data Orchestrators need to integrate with many external systems. How does Kestra approach building integrations and ensure governance for all their underlying configurations?Managing workflows at scale across teams can be challenging in terms of providing structure and visibility of dependencies across workflows and teams. What features does Kestra offer so that all pipelines and teams stay organised?What are

We talked about:

00:00 DataTalks.Club intro

02:34 Career journey and transition into MLOps

08:41 Dutch agriculture and its challenges

10:36 The concept of "technical debt" in MLOps

13:37 Trade-offs in MLOps: moving fast vs. doing things right

14:05 Building teams and the role of coordination in MLOps

16:58 Key roles in an MLOps team: evangelists and tech translators

23:01 Role of the MLOps team in an organization

25:19 How MLOps teams assist product teams

27 :56 Standardizing practices in MLOps

32:46 Getting feedback and creating buy-in from data scientists

36:55 The importance of addressing pain points in MLOps

39:06 Best practices and tools for standardizing MLOps processes

42:31 Value of data versioning and reproducibility

44:22 When to start thinking about data versioning

45:10 Importance of data science experience for MLOps

46:06 Skill mix needed in MLOps teams

47:33 Building a diverse MLOps team

48:18 Best practices for implementing MLOps in new teams

49:52 Starting with CI/CD in MLOps

51:21 Key components for a complete MLOps setup

53:08 Role of package registries in MLOps

54:12 Using Docker vs. packages in MLOps

57:56 Examples of MLOps success and failure stories

1:00:54 What MLOps is in simple terms

1:01:58 The complexity of achieving easy deployment, monitoring, and maintenance

Join our Slack: https://datatalks .club/slack.html

This book, "Building Modern Data Applications Using Databricks Lakehouse," provides a comprehensive guide for data professionals to master the Databricks platform. You'll learn to effectively build, deploy, and monitor robust data pipelines with Databricks' Delta Live Tables, empowering you to manage and optimize cloud-based data operations effortlessly. What this Book will help me do Understand the foundations and concepts of Delta Live Tables and its role in data pipeline development. Learn workflows to process and transform real-time and batch data efficiently using the Databricks lakehouse architecture. Master the implementation of Unity Catalog for governance and secure data access in modern data applications. Deploy and automate data pipeline changes using CI/CD, leveraging tools like Terraform and Databricks Asset Bundles. Gain advanced insights in monitoring data quality and performance, optimizing cloud costs, and managing DataOps tasks effectively. Author(s) Will Girten, the author, is a seasoned Solutions Architect at Databricks with over a decade of experience in data and AI systems. With a deep expertise in modern data architectures, Will is adept at simplifying complex topics and translating them into actionable knowledge. His books emphasize real-time application and offer clear, hands-on examples, making learning engaging and impactful. Who is it for? This book is geared towards data engineers, analysts, and DataOps professionals seeking efficient strategies to implement and maintain robust data pipelines. If you have a basic understanding of Python and Apache Spark and wish to delve deeper into the Databricks platform for streamlining workflows, this book is tailored for you.

Dive into the technical evolution of Bilt’s data infrastructure as they moved from fragmented, slow, and costly analytics to a streamlined, scalable, and holistic solution with dbt Cloud. In this session, the Bilt team will share how they implemented data modeling practices, established a robust CI/CD pipeline, and leveraged dbt’s Semantic Layer to enable a more efficient and trusted analytics environment. Attendees will gain a deep understanding of Bilt’s approach to data including: cost optimization, enhancing data accessibility and reliability, and most importantly, supporting scale and growth.

Speakers: Ben Kramer Director, Data & Analytics Bilt Rewards

James Dorado VP, Data Analytics Bilt Rewards

Nick Heron Senior Manager, Data Analytics Bilt Rewards

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements

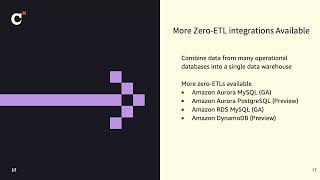

AWS offers the most scalable, highest performing data services to keep up with the growing volume and velocity of data to help organizations to be data-driven in real-time. AWS helps customers unify diverse data sources by investing in a zero ETL future and enable end-to-end data governance so your teams are free to move faster with data. Data teams running dbt Cloud are able to deploy analytics code, following software engineering best practices such as modularity, continuous integration and continuous deployment (CI/CD), and embedded documentation. In this session, we will dive deeper into how to get near real-time insight on petabytes of transaction data using Amazon Aurora zero-ETL integration with Amazon Redshift and dbt Cloud for your Generative AI workloads.

Speakers: Neela Kulkarni Solutions Architect AWS

Neeraja Rentachintala Director, Product Management Amazon

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements

If you are not confident that the data is correct, you cannot use it to make decisions. To act as an effective partner with the rest of the business, your data team needs to know that their data is accurate and high-quality and be able to demonstrate that to their stakeholders. Join Reuben to learn how to use new features such as unit testing, Advanced CI, model-level notifications, and data health tiles to ship trusted data products faster.

Speaker: Reuben McCreanor Product Manager dbt Labs

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements

As part of a rapid modernization initiative, USAA Property and Casualty migrated from a legacy, GUI-based ETL tool and on-prem servers to dbt Cloud and a cloud database. Adopting dbt Cloud enabled near real time data delivery, but dbt Python models opened the door to dependency management. In this session, USAA shares how to go fast and manage vulnerabilities.

Speakers: Kit Alderson USAA Data Engineer, CI/CD Wizard USAA

Ted Douglass Senior Data Engineer USAA

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements

Join Collin as he shares learnings and best practices for scaling dbt on ClickUp's journey as the company rapidly grew from 100 to over 1,000 employees (without losing too much hair). This session is ideal for both new and experienced developers, at any stage of their scaling journey. He'll delve into very practical steps for setting up a robust development environment—covering everything from project structure and IDE setups to advanced CI/CD processes. He'll also share practical learnings, hands-on examples, and best practices for implementing various dbt capabilities as well as tradeoffs his team faced as they balanced cost and business impact at rapid scale.

Speaker: Collin Lenon Senior Analytics Engineer ClickUp

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements

Scaling machine learning at large organizations like Renault Group presents unique challenges, in terms of scales, legal requirements, and diversity of use cases. Data scientists require streamlined workflows and automated processes to efficiently deploy models into production. We present an MLOps pipeline based on python Kubeflow and GCP Vertex AI API designed specifically for this purpose. It enables data scientists to focus on code development for pre-processing, training, evaluation, and prediction. This MLOPS pipeline is a cornerstone of the AI@Scale program, which aims to roll out AI across the Group.

We choose a Python-first approach, allowing Data scientists to focus purely on writing preprocessing or ML oriented Python code, also allowing data retrieval through SQL queries. The pipeline addresses key questions such as prediction type (batch or API), model versioning, resource allocation, drift monitoring, and alert generation. It favors faster time to market with automated deployment and infrastructure management. Although we encountered pitfalls and design difficulties, that we will discuss during the presentation, this pipeline integrates with a CI/CD process, ensuring efficient and automated model deployment and serving.

Finally, this MLOps solution empowers Renault data scientists to seamlessly translate innovative models into production, and smoothen the development of scalable, and impactful AI-driven solutions.

Pixi goes further than existing conda-based package managers in many ways:

- From scratch implemented in Rust and ships as a single binary

- Integrates a new SAT solver called resolvo

- Supports lockfiles like

poetry/yarn/cargodo - Cross-platform task system (simple

bash-like syntax) - Interoperability with PyPI packages by integrating

uv - It's 100% open-source with a permissive licence

We’re looking forward to take a deep-dive together into what conda and PyPI packages are and how we are seamlessly integrating the two worlds in pixi.

We will show you how you can easily setup your new project using just one configuration file and always have a reproducible setup in your pocket. Which means that it will always run the same for your contributors, user and CI machine ( no more "but it worked on my machine!" ).

Using pixi's powerful cross-platform task system you can replace your Makefile and a ton of developer documentation with just pixi run task!

We’ll also look at benchmarks and explain more about the difference between the conda and pypi ecosystems.

This talk is for everyone who ever dealt with dependency hell.

More information about Pixi:

https://pixi.sh https://prefix.dev https://github.com/prefix-dev/pixi

Send us a text Welcome to the cozy corner of the tech world where ones and zeros mingle with casual chit-chat. DataTopics Unplugged is your go-to spot for laid-back banter about the latest in tech, AI, and coding. In this episode, Jonas joins us with fresh takes on AI smarts, sneaky coding tips, and a spicy CI debate: OpenAI's GPT-01 ("Strawberry"): The team explores OpenAI’s newest model, its advanced reasoning capabilities, and potential biases in benchmarksbased on training methods. For a deeper dive, check out the Awesome-LLM-Strawberry project.AI hits 120 IQ: Yep, AI is now officially smarter than most of us. With an IQ of 120, AI is now officially smarter than most humans. We discuss the implications for AI's future role in decision-making and society.Greppability FTW: Ever struggled to find that one line of code? Greppability is the secret weapon you didn’t know you needed. Bart introduces greppability—a key metric for how easy it is to find code in large projects, and why it matters more than you think.Pre-commit hooks: Yay or nay? Is pre-commit the best tool for Continuous Integration, or are there better ways to streamline code quality checks? The team dives into the pros and cons and shares their own experiences.

Learn how to cut your dev time with DataOps.live. Iterate without a local dev environment, test before sharing & use CI/CD to operationalize

Have you ever wondered how to build trusted data products without writing a single line of code? Do you know how to do that for a Snowflake Native App? Learn how to cut your development loop short with DataOps.live. Iterate on your implementation without ever setting up a local development environment, test it before sharing it with your team members, and finally, use CI/CD to operationalize the result as a data pipeline. Automated tests establish trust with your business stakeholders and catch data and schema drift over time in your scheduled data pipeline.