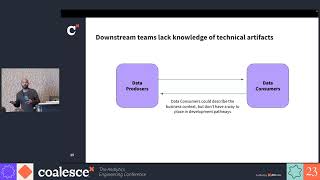

In this session, Chad Sanderson, CEO of Gable.ai and author of the upcoming O’Reilly book: "Data Contracts," tackles the necessity of modern data management in an age of hyper iteration, experimentation, and AI. He will explore why traditional data management practices fail and how the cloud has fundamentally changed data development. The talk will cover a modern application of data management best practices, including data change detection, data contracts, observability, and CI/CD tests, and outline the roles of data producers and consumers. Attendees will leave with a clear understanding of modern data management's components and how to leverage them for better data handling and decision-making.

talk-data.com

talk-data.com

Topic

Data Contracts

64

tagged

Activity Trend

Top Events

Discover how to implement a modern data mesh architecture using Microsoft Azure's Cloud Adoption Framework. In this book, you'll learn the strategies to decentralize data while maintaining strong governance, turning your current analytics struggles into scalable and streamlined processes. Unlock the potential of data mesh to achieve advanced and democratized analytics platforms. What this Book will help me do Learn to decentralize data governance and integrate data domains effectively. Master strategies for building and implementing data contracts suited to your organization's needs. Explore how to design a landing zone for a data mesh using Azure's Cloud Adoption Framework. Understand how to apply key architecture patterns for analytics, including AI and machine learning. Gain the knowledge to scale analytics frameworks using modern cloud-based platforms. Author(s) None Deswandikar is a seasoned data architect with extensive experience in implementing cutting-edge data solutions in the cloud. With a passion for simplifying complex data strategies, None brings real-world customer experiences into practical guidance. This book reflects None's dedication to helping organizations achieve their data goals with clarity and effectiveness. Who is it for? This book is ideal for chief data officers, data architects, and engineers seeking to transform data analytics frameworks to accommodate advanced workloads. Especially useful for professionals aiming to implement cloud-based data mesh solutions, it assumes familiarity with centralized data systems, data lakes, and data integration techniques. If modernizing your organization's data strategy appeals to you, this book is for you.

Send us a text Welcome to the cozy corner of the tech world where ones and zeros mingle with casual chit-chat. Datatopics Unplugged is your go-to spot for relaxed discussions around tech, news, data, and society. Dive into conversations that should flow as smoothly as your morning coffee (but don't), where industry insights meet laid-back banter. Whether you're a data aficionado or just someone curious about the digital age, pull up a chair, relax, and let's get into the heart of data, unplugged style! In episode #41, titled “Regulations and Revelations: Rust Safety, ChatGPT Secrets, and Data Contracts” we're thrilled to have Paolo Léonard joining us as we unpack a host of intriguing developments across the tech landscape: In Rust We Trust? White House Office urges memory safety: A dive into the push for memory safety and what it means for programming languages like Python.ChatGPT's Accidental Leak? Did OpenAI just accidentally leak the next big ChatGPT upgrade?: Speculations on the upcoming enhancements and their impact on knowledge accessibility.EU AI Act Adoption: EU Parliament officially adopts AI Act: Exploring the landmark AI legislation and its broad effects, with a critical look at potential human rights concerns.Meet Devin, the AI Engineer: Exploring the capabilities and potential of the first AI software engineer.Rye's New Stewardship: Astral takes stewardship of Rye: The next big thing in Python packaging and the role of community in driving innovation, with discussions unfolding on GitHub.Data Contract CLI: A look at data contracts and their importance in managing and understanding data across platforms.AI and Academic Papers: The influence of AI on academic research, highlighted by this paper and this paper, and how it's reshaping the landscape of knowledge sharing.

Send us a text Welcome to another engaging episode of Datatopics Unplugged, the podcast where tech and relaxation intersect. Today, we're excited to host two special guests, Paolo and Tim, who bring their unique perspectives to our cozy corner. Guests of Today Paolo: An enthusiast of fantasy and sci-fi reading, Paolo is on a personal mission to reduce his coffee consumption. He has a unique way of measuring his height, at 0.89 Sams tall. With over two and a half years of experience as a data engineer at dataroots, Paolo contributes a rich professional perspective. His hobbies extend to playing field hockey and a preference for the warmer summer season.Tim: Occasionally known as Dr. Dunkenstein, Tim brings a mix of humor and insight. He measures his height at 0.87 Sams tall. As the Head of Bizdev, he prefers to steer clear of grand titles, revealing his views on hierarchical structures and monarchies.Topics Biz Corner: Kyutai: We delve into France's answer to OpenAI with Paolo Leonard, exploring the implications and future of Kyutai: https://techcrunch.com/2023/11/17/kyutai-is-an-french-ai-research-lab-with-a-330-million-budget-that-will-make-everything-open-source/GPT-NL: A discussion led by Bart Smeets on the Netherlands' own open language model and its potential impact: https://www.computerweekly.com/news/366558412/Netherlands-starts-building-its-own-AI-language-modelTech Corner: Data Quality Insights: A blog post by Paolo on data quality vs. data validation. We'll explore when and why data quality is essential, and evaluate tools like dbt, soda, deequ, and great_expectations: https://dataroots.io/blog/state-of-data-quality-october-2023Soda Data Contracts: An overview of the newly released OSS Data Contract Engine by Soda. https://docs.soda.io/soda/data-contracts.htmlFood for Thought Corner: Hare - A 100-Year Programming Language: Bart starts a discussion on the ambition of Hare to remain relevant for a century: https://harelang.org/blog/2023-11-08-100-year-language/.Join us for this mix of expert insights and light-hearted moments. Whether you're deeply embedded in the tech world or just dipping your toes in, this episode promises to be both informative and entertaining!

And, yes. There is a voucher, go to dataroots.io and navigate to the shop (top right) and use voucher code murilos_bargain_blast for a 25EUR discount!

Data contracts have been much discussed in the community of late, with a lot of curiosity around how to approach this concept in practice and how it might enable shift-left developer-first governance and data quality. For organizations adopting dbt while also dealing with non-dbt data that is upstream of the warehouse, it can be challenging to understand how to apply data contracts uniformly across a fragmented stack. We are calling this harmonizing layer the Control Plane for Data - powered by the common thread across these systems: metadata.

In this talk, Shirshanka Das, CTO of Acryl Data and founder of the DataHub Project describes how you can use data contracts and DataHub to make your dbt centered stack more reliable - as well as other use cases that can help build a simpler, more flexible data stack.

Speaker: Shirshanka Das, CTO, Acryl Data

Register for Coalesce at https://coalesce.getdbt.com

Surfline uses Segment to collect product analytics events to understand how surfers use their forecasts and live surf cameras across 9000+ surf spots worldwide. An open source tool was developed to define and manage product analytics event schemas using JSON schema which are used to build dbt staging models for all events.

With this solution, the data team has more time to build intermediate and mart models in dbt, knowing that our staging layer fully reflects Surfline’s product analytics events. This presentation is a real-life example on how schemas (or data contracts) can be used as a medium to build consensus, enforce standards, improve data quality, and speed up the dbt workflow for product analytics.

Speaker: Greg Clunies, Senior Analytics Engineer, Surfline

Register for Coalesce at https://coalesce.getdbt.com/

Uncover the transformative power of decentralising data with host Jason Foster and Nachiket Mehta, Head of Data and Analytics Engineering at Wayfair. They delve into Wayfair's journey from a centralised data approach to a decentralised model, the challenges they encountered, the value created, and how it has led to faster and more informed decision-making. Explore how Wayfair is utilising domain models, data contracts, and a platform-based approach to empower teams and enhance overall performance, and learn how decentralisation can revolutionise your organisation's data practices and drive business growth.

Boring is back. As technology makes the lives of data engineers easier with respect to solving classical data problems, data engineers can now move to tackle "boring" problems like data contracts, semantics, and higher-level and value-add tasks. This also sets us up to tackle the next generation of data problems, namely integrating ML and AI into every business workflow. Boring is good.

This talk will cover in high overview the architecture of a data product DAG, the benefits in a data mesh world and how to implement it easily. Airflow is the de-facto orchestrator we use at Astrafy for all our data engineering projects. Over the years we have developed deep expertise in orchestrating data jobs and recently we have adopted the “data mesh” paradigm of having one Airlfow DAG per data product. Our standard data product DAGs contain the following stages: Data contract: check integrity of data before transforming the data Data transformation: applies dbt transformation via a kubernetes pod operator Data distribution: mainly informing downstream applications that new data is available to be consumed For use cases where different data products need to be finished before triggering another data product, we have a mechanism with an engine in between that keeps track of finished dags and triggers DAGs based on a mapping table containing data products dependencies.

Data contracts have been much discussed in the community of late, with a lot of curiosity around how to approach this concept in practice. We believe data contracts need a harmonizing layer to manage data quality in a uniform manner across a fragmented stack. We are calling this harmonizing layer the Control Plane for Data - powered by the common thread across these systems: metadata. For teams already orchestrating pipelines with Airflow, data contacts can be an effective way to process data that meets preset quality standards. With a control plane as a connecting layer, producers can build data contracts that consumers can rely on, ensuring DAGs only run when a contract is valid. Producers can govern how workflows should behave, and consumers receive the tooling they need to only opt into high quality data. Learn how to use data contracts and DataHub to make your Airflow pipelines more reliable - as well as other use cases that can help build a simpler, more flexible data stack.

ABOUT THE TALK: After two years, three rounds of funding, and hundreds of new employees — Whatnot’s modern data stack has come from not existing to processing tens of millions of events across hundreds of different event types each day.

How does their small (but mighty!) team keep up? This talk explores data contracts — it covers the use of Interface Definition Language (Protobuf) to serve as the source of truth for event definitions, govern event construction in production, automatically generate DBT models in the data warehouse.

ABOUT THE SPEAKER: Zack Klein is a software engineer at Whatnot, where he thoroughly enjoys building data products and narrowly avoiding breaking production each day. Previously, he worked on big data platforms at Blackstone and HBO.

ABOUT DATA COUNCIL: Data Council (https://www.datacouncil.ai/) is a community and conference series that provides data professionals with the learning and networking opportunities they need to grow their careers.

Make sure to subscribe to our channel for the most up-to-date talks from technical professionals on data related topics including data infrastructure, data engineering, ML systems, analytics and AI from top startups and tech companies.

FOLLOW DATA COUNCIL: Twitter: https://twitter.com/DataCouncilAI LinkedIn: https://www.linkedin.com/company/datacouncil-ai/

Metrics are the most important primitive in the data world and driving the use of powerful and reliable metrics is the best way data teams can add value to their enterprises. In this talk, we'll walk through how data teams can best support the metric lifecycle, end-to-end from:

- Designing useful metrics as part of metric trees

- Developing these metrics off stable and standard data contracts

- Operationalizing metrics to drive value

ABOUT THE SPEAKER: Abhi Sivasailam is a Growth and Analytics leader who most recently led Product-Led Growth, Product Analytics, and Analytics Engineering at Flexport, where he helped to lead these and other functions through 10x growth over the past 3 years. Previously, Abhi led growth and data teams at Keap, Hustle, and Honeybook.

👉 Sign up for our “No BS” Newsletter to get the latest technical data & AI content: https://datacouncil.ai/newsletter

ABOUT DATA COUNCIL: Data Council (https://www.datacouncil.ai/) is a community and conference series that provides data professionals with the learning and networking opportunities they need to grow their careers.

Make sure to subscribe to our channel for the most up-to-date talks from technical professionals on data related topics including data infrastructure, data engineering, ML systems, analytics and AI from top startups and tech companies.

FOLLOW DATA COUNCIL: Twitter: https://twitter.com/DataCouncilAI LinkedIn: https://www.linkedin.com/company/datacouncil-ai/

ABOUT THE TALK: Data Contracts are a mechanism for driving accountability and data ownership between producers and consumers. Contracts are used to ensure production-grade data pipelines are treated as part of the product and have clear SLAs and ownership.

Learn about the why, when and how of Data Contracts and the spectrum from culture change to implementation details.

ABOUT THE SPEAKER: Chad Sanderson is the former Head of Data at Convoy. He has implemented Data Contracts at scale on everything from Machine Learning models to Embedded Metrics. He currently operates the Data Quality Camp Slack group.

ABOUT DATA COUNCIL: Data Council (https://www.datacouncil.ai/) is a community and conference series that provides data professionals with the learning and networking opportunities they need to grow their careers.

Make sure to subscribe to our channel for the most up-to-date talks from technical professionals on data related topics including data infrastructure, data engineering, ML systems, analytics and AI from top startups and tech companies.

FOLLOW DATA COUNCIL: Twitter: https://twitter.com/DataCouncilAI LinkedIn: https://www.linkedin.com/company/datacouncil-ai/

If you find yourself in a situation where you don't have a college degree, I want you to know it is possible to land a data job.

In this episode, Teshawn Black shares his experience landing data contracts at Google & LinkedIn despite having no certifications.

🌟 Join the data project club!

“25OFF” to get 25% off (first 50 members).

📊 Come to my next free “How to Land Your First Data Job” training

🏫 Check out my 10-week data analytics bootcamp

Teshawn’s Links:

https://www.tiktok.com/@the6figureanalyst

https://app.mediakits.com/the6figureanalyst

Timestamps:

(6:29) - Providing value is KEY! (10:49) - We don’t have tomorrow, so live today (13:43) - Understand what company looks for (17:05) - Learn to communicate effectively (19:14) - Working contract jobs is a blessing (20:17) - Always market yourself (22:33) - Ask the budget first (25:07) - Is job security really SECURE? (27:35) - We are all expendable (29:32) - Focus on data cleaning & data manipulation (30:42) - Don’t skip Excel!

Connect with Avery:

📺 Subscribe on YouTube

🎙Listen to My Podcast

👔 Connect with me on LinkedIn

🎵 TikTok

Mentioned in this episode: Join the last cohort of 2025! The LAST cohort of The Data Analytics Accelerator for 2025 kicks off on Monday, December 8th and enrollment is officially open!

To celebrate the end of the year, we’re running a special End-of-Year Sale, where you’ll get: ✅ A discount on your enrollment 🎁 6 bonus gifts, including job listings, interview prep, AI tools + more

If your goal is to land a data job in 2026, this is your chance to get ahead of the competition and start strong.

👉 Join the December Cohort & Claim Your Bonuses: https://DataCareerJumpstart.com/daa https://www.datacareerjumpstart.com/daa

Welcome to today's podcast on data contracts for data leaders and executives. Data contracts are a critical component of data management and are essential for any organization that collects, processes, or analyzes data. This podcast will explore data contracts, their importance, and how data leaders and executives can implement them in their organizations. To begin with, let's define what we mean by data contracts. A data contract is a formal agreement between the data provider and the data consumer that specifies the terms and conditions under which the data will be shared, used, and protected. The data contract outlines the obligations and responsibilities of both parties. It clearly explains how the data will be managed, stored, and analyzed.

Summary

There has been a lot of discussion about the practical application of data mesh and how to implement it in an organization. Jean-Georges Perrin was tasked with designing a new data platform implementation at PayPal and wound up building a data mesh. In this episode he shares that journey and the combination of technical and organizational challenges that he encountered in the process.

Announcements

Hello and welcome to the Data Engineering Podcast, the show about modern data management Are you tired of dealing with the headache that is the 'Modern Data Stack'? We feel your pain. It's supposed to make building smarter, faster, and more flexible data infrastructures a breeze. It ends up being anything but that. Setting it up, integrating it, maintaining it—it’s all kind of a nightmare. And let's not even get started on all the extra tools you have to buy to get it to do its thing. But don't worry, there is a better way. TimeXtender takes a holistic approach to data integration that focuses on agility rather than fragmentation. By bringing all the layers of the data stack together, TimeXtender helps you build data solutions up to 10 times faster and saves you 70-80% on costs. If you're fed up with the 'Modern Data Stack', give TimeXtender a try. Head over to dataengineeringpodcast.com/timextender where you can do two things: watch us build a data estate in 15 minutes and start for free today. Your host is Tobias Macey and today I'm interviewing Jean-Georges Perrin about his work at PayPal to implement a data mesh and the role of data contracts in making it work

Interview

Introduction How did you get involved in the area of data management? Can you start by describing the goals and scope of your work at PayPal to implement a data mesh?

What are the core problems that you were addressing with this project? Is a data mesh ever "done"?

What was your experience engaging at the organizational level to identify the granularity and ownership of the data products that were needed in the initial iteration? What was the impact of leading multiple teams on the design of how to implement communication/contracts throughout the mesh? What are the technical systems that you are relying on to power the different data domains?

What is your philosophy on enforcing uniformity in technical systems vs. relying on interface definitions as the unit of consistency?

What are the biggest challenges (technical and procedural) that you have encountered during your implementation? How are you managing visibility/auditability across the different data domains? (e.g. observability, data quality, etc.) What are the most interesting, innovative, or unexpected ways that you have seen PayPal's data mesh used? What are the most interesting, unexpected, or challenging lessons that you have learned while working on data mesh? When is a data mesh the wrong choice? What do you have planned for the future of your data mesh at PayPal?

Contact Info

LinkedIn Blog

Parting Question

From your perspective, what is the biggest gap in the tooling or technology for data management today?

Closing Announcements

Thank you for listening! Don't forget to check out our other shows. Podcast.init covers the Python language, its community, and the innovative ways it is being used. The Machine Learning Podcast helps you go from idea to production with machine learning. Visit the site to subscribe to the show, sign up for the mailing list, and read the show notes. If you've learned something or tried out a project from the show then tell us about it! Email [email protected]) with your story. To help other people find the show please leave a review on Apple Podcasts and tell your friends and co-workers

Links

Data Mesh

O'Reilly Book (affiliate link)

The next generation of Data Platforms is the Data Mesh PayPal Conway's Law Data Mesh For All Ages - US, Data Mesh For All Ages - UK Data Mesh Radio Data Mesh Community Data Mesh In Action Great Expectations

Podcast Episode

The intro and outro music is from The Hug by The Freak Fandango Orchestra / CC BY-SA

Sponsored By:

TimeXtender:  TimeXtender is a holistic, metadata-driven solution for data integration, optimized for agility. TimeXtender provides all the features you need to build a future-proof infrastructure for ingesting, transforming, modelling, and delivering clean, reliable data in the fastest, most efficient way possible.

TimeXtender is a holistic, metadata-driven solution for data integration, optimized for agility. TimeXtender provides all the features you need to build a future-proof infrastructure for ingesting, transforming, modelling, and delivering clean, reliable data in the fastest, most efficient way possible.

You can't optimize for everything all at once. That's why we take a holistic approach to data integration that optimises for agility instead of fragmentation. By unifying each layer of the data stack, TimeXtender empowers you to build data solutions 10x faster while reducing costs by 70%-80%. We do this for one simple reason: because time matters.

Go to dataengineeringpodcast.com/timextender today to get started for free!Support Data Engineering Podcast

When it comes to data, there are data consumers (analysts, builders and users of data products, and various other business stakeholders) and data producers (software engineers and various adjacent roles and systems). It's all too common for data producers to "break" the data as they add new features and functionality to systems as they focus on the operational processes the system supports and not the data that those processes spawn. How can this be avoided? One approach is to implement "data contracts." What that actually means… is the subject of this episode, which Shane Murray from Monte Carlo joined us to discuss! For complete show notes, including links to items mentioned in this episode and a transcript of the show, visit the show page.

Summary

One of the reasons that data work is so challenging is because no single person or team owns the entire process. This introduces friction in the process of collecting, processing, and using data. In order to reduce the potential for broken pipelines some teams have started to adopt the idea of data contracts. In this episode Abe Gong brings his experiences with the Great Expectations project and community to discuss the technical and organizational considerations involved in implementing these constraints to your data workflows.

Announcements

Hello and welcome to the Data Engineering Podcast, the show about modern data management When you're ready to build your next pipeline, or want to test out the projects you hear about on the show, you'll need somewhere to deploy it, so check out our friends at Linode. With their new managed database service you can launch a production ready MySQL, Postgres, or MongoDB cluster in minutes, with automated backups, 40 Gbps connections from your application hosts, and high throughput SSDs. Go to dataengineeringpodcast.com/linode today and get a $100 credit to launch a database, create a Kubernetes cluster, or take advantage of all of their other services. And don't forget to thank them for their continued support of this show! Atlan is the metadata hub for your data ecosystem. Instead of locking your metadata into a new silo, unleash its transformative potential with Atlan's active metadata capabilities. Push information about data freshness and quality to your business intelligence, automatically scale up and down your warehouse based on usage patterns, and let the bots answer those questions in Slack so that the humans can focus on delivering real value. Go to dataengineeringpodcast.com/atlan today to learn more about how Atlan’s active metadata platform is helping pioneering data teams like Postman, Plaid, WeWork & Unilever achieve extraordinary things with metadata and escape the chaos. Struggling with broken pipelines? Stale dashboards? Missing data? If this resonates with you, you’re not alone. Data engineers struggling with unreliable data need look no further than Monte Carlo, the leading end-to-end Data Observability Platform! Trusted by the data teams at Fox, JetBlue, and PagerDuty, Monte Carlo solves the costly problem of broken data pipelines. Monte Carlo monitors and alerts for data issues across your data warehouses, data lakes, dbt models, Airflow jobs, and business intelligence tools, reducing time to detection and resolution from weeks to just minutes. Monte Carlo also gives you a holistic picture of data health with automatic, end-to-end lineage from ingestion to the BI layer directly out of the box. Start trusting your data with Monte Carlo today! Visit dataengineeringpodcast.com/montecarlo to learn more. Your host is Tobias Macey and today I'm interviewing Abe Gong about the technical and organizational implementation of data contracts

Interview

Introduction How did you get involved in the area of data management? Can you describe what your conception of a data contract is?

What are some of the ways that you have seen them implemented?

How has your work on Great Expectations influenced your thinking on the strategic and tactical aspects of adopting/implementing data contracts in a given team/organization?

What does the negotiation process look like for identifying what needs to be included in a contract?

What are the interfaces/integration points where data contracts are most useful/necessary? What are the discussions that need to happen when deciding when/whether a contract "violation" is a blocking action vs. issuing a notification? At what level of detail/granularity are contracts most helpful? At the technical level, what does the implementation/integration/deployment of a contract look like? What are the most interesting, innovative, or unexpected ways that you have seen data contracts used? What are the most interesting, unexpected, or chall

WARNING: This episode contains detailed discussion of data contracts. The modern data stack introduces challenges in terms of collaboration between data producers and consumers. How might we solve them to ultimately build trust in data quality? Chad Sanderson leads the data platform team at Convoy, a late-stage series-E freight technology startup. He manages everything from instrumentation and data ingestion to ETL, in addition to the metrics layer, experimentation software and ML. Prukalpa Sankar is a co-founder of Atlan, where she develops products that enable improved collaboration between diverse users like businesses, analysts, and engineers, creating higher efficiency and agility in data projects. For full show notes and to read 6+ years of back issues of the podcast's companion newsletter, head to https://roundup.getdbt.com. The Analytics Engineering Podcast is sponsored by dbt Labs.

The "socio" is inseparable from the "technical". In fact, technological change often begets social and organizational change.

And in the data space, the technical changes that some now refer to as the "modern data stack" call for changes in how teams work with data, and in turn how data specialists work within those teams. Enter the Modern Data Team.

In this talk, Abhi Sivasailam will unpack the changing landscape of data roles and teams and what this looks like in action at Flexport. Come learn how Flexport approaches data contracts, management, and governance, and the central role that Analytics Engineers and Product Analysts play in these processes.

Check the slides here: https://docs.google.com/presentation/d/1Sgm3J6EkeKQf5D1MKopsLLAMOhAZ05CxDlei2mbDE90/edit#slide=id.g16424dcc8d3_0_1145

Coalesce 2023 is coming! Register for free at https://coalesce.getdbt.com/.