Summary

Data lineage is something that has grown from a convenient feature to a critical need as data systems have grown in scale, complexity, and centrality to business. Alvin is a platform that aims to provide a low effort solution for data lineage capabilities focused on simplifying the work of data engineers. In this episode co-founder Martin Sahlen explains the impact that easy access to lineage information can have on the work of data engineers and analysts, and how he and his team have designed their platform to offer that information to engineers and stakeholders in the places that they interact with data.

Announcements

Hello and welcome to the Data Engineering Podcast, the show about modern data management

When you’re ready to build your next pipeline, or want to test out the projects you hear about on the show, you’ll need somewhere to deploy it, so check out our friends at Linode. With their new managed database service you can launch a production ready MySQL, Postgres, or MongoDB cluster in minutes, with automated backups, 40 Gbps connections from your application hosts, and high throughput SSDs. Go to dataengineeringpodcast.com/linode today and get a $100 credit to launch a database, create a Kubernetes cluster, or take advantage of all of their other services. And don’t forget to thank them for their continued support of this show!

You wake up to a Slack message from your CEO, who’s upset because the company’s revenue dashboard is broken. You’re told to fix it before this morning’s board meeting, which is just minutes away. Enter Metaplane, the industry’s only self-serve data observability tool. In just a few clicks, you identify the issue’s root cause, conduct an impact analysis—and save the day. Data leaders at Imperfect Foods, Drift, and Vendr love Metaplane because it helps them catch, investigate, and fix data quality issues before their stakeholders ever notice they exist. Setup takes 30 minutes. You can literally get up and running with Metaplane by the end of this podcast. Sign up for a free-forever plan at dataengineeringpodcast.com/metaplane, or try out their most advanced features with a 14-day free trial. Mention the podcast to get a free "In Data We Trust World Tour" t-shirt.

RudderStack helps you build a customer data platform on your warehouse or data lake. Instead of trapping data in a black box, they enable you to easily collect customer data from the entire stack and build an identity graph on your warehouse, giving you full visibility and control. Their SDKs make event streaming from any app or website easy, and their state-of-the-art reverse ETL pipelines enable you to send enriched data to any cloud tool. Sign up free… or just get the free t-shirt for being a listener of the Data Engineering Podcast at dataengineeringpodcast.com/rudder.

Data teams are increasingly under pressure to deliver. According to a recent survey by Ascend.io, 95% in fact reported being at or over capacity. With 72% of data experts reporting demands on their team going up faster than they can hire, it’s no surprise they are increasingly turning to automation. In fact, while only 3.5% report having current investments in automation, 85% of data teams plan on investing in automation in the next 12 months. 85%!!! That’s where our friends at Ascend.io come in. The Ascend Data Automation Cloud provides a unified platform for data ingestion, transformation, orchestration, and observability. Ascend users love its declarative pipelines, powerful SDK, elegant UI, and extensible plug-in architecture, as well as its support for Python, SQL, Scala, and Java. Ascend automates workloads on Snowflake, Databricks, BigQuery, and open source Spark, and can be deployed in AWS, Azure, or GCP. Go to dataengineeringpodcast.com/ascend and sign up for a free trial. If you’re a data engineering podcast listener, you get credits worth $5,000 when you become a customer.

Your host is Tobias Macey and today I’m interviewing Martin Sahlen about his work on data lineage at Alvin and how it factors into the day-to-day work of data engineers

Interview

Introduction

How did you get involved in the area of data management?

Can you describe what Alvin is and the story behind it?

What is the core problem that you are trying to solve at Alvin?

Data lineage has quickly become an overloaded term. What are the elements of lineage that you are focused on addressing?

What are some of the other sources/pieces of information that you integrate into the lineage graph?

How does data lineage show up in the work of data engineers?

In what ways does your focus on data engineers inform the way that you model the lineage information?

As with every data asset/product, the lineage graph is only as useful as the data that it stores. What are some of the ways that you focus on establishing and ensuring a complete view of lineage?

How do you account for assets (e.g. tables, dashboards, exports, etc.) that are created outside of the "officially supported" methods? (e.g. someone manually runs a SQL create statement, etc.)

Can you describe how you have implemented the Alvin platform?

How have the design and goals shifted from when you first started exploring the problem?

What are the types of data systems/assets that you are focused on supporting? (e.g. data warehouses vs. lakes, structured vs. unstructured, which BI tools, etc.)

How does Alvin fit into the workflow of data engineers and their downstream customers/collaborators?

What are some of the design choices (both visual and functional) that you focused on to avoid friction in the data engineer’s workflow?

What are some of the open questions/areas for investigation/improvement in the space of data lineage?

What are the factors that contribute to the difficulty of a truly holistic and complete view of lineage across an organization?

What are the most interesting, innovative, or unexpected ways that you have seen Alvin used?

What are the most interesting, unexpected, or challenging lessons that you have learned while working on Alvin?

When is Alvin the wrong choice?

What do you have planned for the future of Alvin?

Contact Info

LinkedIn

@martinsahlen on Twitter

Parting Question

From your perspective, what is the biggest gap in the tooling or technology for data management today?

Closing Announcements

Thank you for listening! Don’t forget to check out our other shows. Podcast.init covers the Python language, its community, and the innovative ways it is being used. The Machine Learning Podcast helps you go from idea to production with machine learning.

Visit the site to subscribe to the show, sign up for the mailing list, and read the show notes.

If you’ve learned something or tried out a project from the show then tell us about it! Email [email protected]) with your story.

To help other people find the show please leave a review on Apple Podcasts and tell your friends and co-workers

Links

Alvin

Unacast

sqlparse Python library

Cython

Podcast.init Episode

Antlr

Kotlin programming language

PostgreSQL

Podcast Episode

OpenSearch

ElasticSearch

Redis

Kubernetes

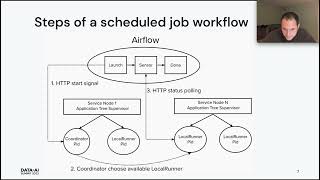

Airflow

BigQuery

Spark

Looker

Mode

The intro and outro music is from The Hug by The Freak Fandango Orchestra / CC BY-SA

Support Data Engineering Podcast