As we look back at 2024, we're highlighting some of our favourite episodes of the year, and with 100 of them to choose from, it wasn't easy! The four guests we'll be recapping with are: Lea Pica - A celebrity in the data storytelling and visualisation space. Richie and Lea cover the full picture of data presentation, how to understand your audience, how to leverage hollywood storytelling and more. Out December 19.Alex Banks - Founder of Sunday Signal. Adel and Alex cover Alex’s journey into AI and what led him to create Sunday Signal, the potential of AI, prompt engineering at its most basic level, chain of thought prompting, the future of LLMs and more. Out December 23.Don Chamberlin - The renowned co-inventor of SQL. Richie and Don explore the early development of SQL, how it became standardized, the future of SQL through NoSQL and SQL++ and more. Out December 26.Tom Tunguz - general Partner at Theory Ventures, a $235m VC firm. Richie and Tom explore trends in generative AI, cloud+local hybrid workflows, data security, the future of business intelligence and data analytics, AI in the corporate sector and more. Out December 30. Rapid change seems to be the new norm within the data and AI space, and due to the ecosystem constantly changing, it can be tricky to keep up. Fortunately, any self-respecting venture capitalist looking into data and AI will stay on top of what’s changing and where the next big breakthroughs are likely to come from. We all want to know which important trends are emerging and how we can take advantage of them, so why not learn from a leading VC. Tomasz Tunguz is a General Partner at Theory Ventures, a $235m early-stage venture capital firm. He blogs sat tomtunguz.com & co-authored Winning with Data. He has worked or works with Looker, Kustomer, Monte Carlo, Dremio, Omni, Hex, Spot, Arbitrum, Sui & many others. He was previously the product manager for Google's social media monetization team, including the Google-MySpace partnership, and managed the launches of AdSense into six new markets in Europe and Asia. Before Google, Tunguz developed systems for the Department of Homeland Security at Appian Corporation. In the episode, Richie and Tom explore trends in generative AI, the impact of AI on professional fields, cloud+local hybrid workflows, data security, and changes in data warehousing through the use of integrated AI tools, the future of business intelligence and data analytics, the challenges and opportunities surrounding AI in the corporate sector. You'll also get to discover Tom's picks for the hottest new data startups. Links Mentioned in the Show: Tom’s BlogTheory VenturesArticle: What Air Canada Lost In ‘Remarkable’ Lying AI Chatbot Case[Course] Implementing AI Solutions in BusinessRelated Episode: Making Better Decisions using Data & AI with Cassie Kozyrkov, Google's First Chief Decision ScientistSign up to RADAR: AI...

talk-data.com

talk-data.com

Topic

Omni

Omni Analytics

35

tagged

Activity Trend

Top Events

The workflow between dbt and BI is slowing us down. A simple change in dbt can require multiple teams to coordinate and hours of manual work to fix broken content. Valuable time is spent managing disconnected analytics tools that should work together seamlessly.

It’s time to tip the scales back toward speed — without compromising control. In this session, we’ll discuss how dbt and BI should work together. We’ll show you a workflow for moving fast in your BI tool, while still maintaining control of your data model in dbt.

Speaker: Chris Merrick Co-Founder & CTO Omni Analytics

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements

Rapid change seems to be the new norm within the data and AI space, and due to the ecosystem constantly changing, it can be tricky to keep up. Fortunately, any self-respecting venture capitalist looking into data and AI will stay on top of what’s changing and where the next big breakthroughs are likely to come from. We all want to know which important trends are emerging and how we can take advantage of them, so why not learn from a leading VC. Tomasz Tunguz is a General Partner at Theory Ventures, a $235m early-stage venture capital firm. He blogs sat tomtunguz.com & co-authored Winning with Data. He has worked or works with Looker, Kustomer, Monte Carlo, Dremio, Omni, Hex, Spot, Arbitrum, Sui & many others. He was previously the product manager for Google's social media monetization team, including the Google-MySpace partnership, and managed the launches of AdSense into six new markets in Europe and Asia. Before Google, Tunguz developed systems for the Department of Homeland Security at Appian Corporation. In the episode, Richie and Tom explore trends in generative AI, the impact of AI on professional fields, cloud+local hybrid workflows, data security, and changes in data warehousing through the use of integrated AI tools, the future of business intelligence and data analytics, the challenges and opportunities surrounding AI in the corporate sector. You'll also get to discover Tom's picks for the hottest new data startups. Links Mentioned in the Show: Tom’s BlogTheory VenturesArticle: What Air Canada Lost In ‘Remarkable’ Lying AI Chatbot Case[Course] Implementing AI Solutions in BusinessRelated Episode: Making Better Decisions using Data & AI with Cassie Kozyrkov, Google's First Chief Decision ScientistSign up to RADAR: AI Edition New to DataCamp? Learn on the go using the DataCamp mobile appEmpower your business with world-class data and AI skills with DataCamp for business

Reduce IT overhead and drive innovation with Database Service for Google Distributed Cloud Hosted. Focus on strategic application development by automating time-consuming tasks. Leverage PostgreSQL, Oracle, and the cutting-edge performance and AI capabilities of AlloyDB Omni for a competitive edge.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

Relying on third-party-hosted artificial intelligence (AI) models may not always be an option for your application. You are also not guaranteed support for those model endpoints through your next software release. By hosting state-of-the-art AI models like Gemma in the same environment as AlloyDB Omni, you can run scalable generative AI apps on any cloud or on premises, for regulatory needs, with low latency. Learn from Neuropace how they run Omni for enterprise-grade vector search capabilities in their local environment, which contains sensitive customer workloads.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

What if your customer’s shopping experience could change with their mood or adapt to what they are specifically looking for today? With generative AI, retailers and consumer package goods companies can create an ultra-personal mind reader-type experience for their shoppers, across the omni-channel. Join Accenture and Google to learn more about how shopping can become hyper-personal, dynamic, and increase business outcomes.

By attending this session, your contact information may be shared with the sponsor for relevant follow up for this event only.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

Ditch legacy and embrace freedom with AlloyDB Omni, your hybrid and multicloud enterprise database. Run anywhere, from data centers to the public clouds of your choice, and unlock performance and ease of management. Elevate your apps with HTAP and built-in generative AI to build vector embeddings for lightning-fast search, remotely or locally – no connectivity needed. Simplify operations with the Kubernetes operator: automate lifecycle, HA/DR, and scale effortlessly. Learn more about AlloyDB Omni and supercharge your data strategy, anywhere.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

In this workshop, you will learn how you can easily create a Retrieval Augmented Generation (RAG) application and how to use it. We will be highlighting AlloyDB Omni (our deploy-anywhere version of AlloyDB) with pgvector's vector search capabilities. You will learn to run an LLM and embedding model locally so that you can run this application anywhere. Creating an app in a secure way with LLMs playing around your data is harder than ever. Come and build with me!

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

In this session we will show how you can query, connect, and report on your data insights across clouds, including AWS and Azure, with BigQuery Omni and Looker. Reduce costly copying and customization and get answers quickly, so you can get back to work.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

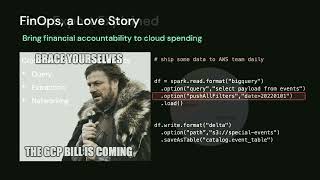

This session begins with data warehouse trivia and lessons learned from production implementations of multicloud data architecture. You will learn to design future-proof low latency data systems that focus on openness and interoperability. You will also gain a gentle introduction to Cloud FinOps principles that can help your organization reduce compute spend and increase efficiency.

Most enterprises today are multicloud. While an assortment of low-code connectors boasts the ability to make data available for analytics in real time, they post long-lasting challenges:

- Inefficient EDW targets

- Inability to evolve schema

- Forbiddingly expensive data exports due to cloud and vendor lock-in

The alternative is an open data lake that unifies batch and streaming workloads. Bronze landing zones in open format eliminate the data extraction costs required by proprietary EDW. Apache Spark™ Structured Streaming provides a unified ingestion interface. Streaming triggers allow us to switch back and forth between batch and stream with one-line code changes. Streaming aggregation enables us to incrementally compute on data that arrives near each other.

Specific examples are given on how to use Autoloader to discover newly arrived data and ensure exactly once, incremental processing. How DLT can be configured effectively to further simplify streaming jobs and accelerate the development cycle. How to apply SWE best practices to Workflows and integrate with popular Git providers, either using the Databricks Project or Databricks Terraform provider.

Talk by: Christina Taylor

Here’s more to explore: Big Book of Data Engineering: 2nd Edition: https://dbricks.co/3XpPgNV The Data Team's Guide to the Databricks Lakehouse Platform: https://dbricks.co/46nuDpI

Connect with us: Website: https://databricks.com Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/databricks Instagram: https://www.instagram.com/databricksinc Facebook: https://www.facebook.com/databricksinc

Summary

Business intelligence has gone through many generational shifts, but each generation has largely maintained the same workflow. Data analysts create reports that are used by the business to understand and direct the business, but the process is very labor and time intensive. The team at Omni have taken a new approach by automatically building models based on the queries that are executed. In this episode Chris Merrick shares how they manage integration and automation around the modeling layer and how it improves the organizational experience of business intelligence.

Announcements

Hello and welcome to the Data Engineering Podcast, the show about modern data management Truly leveraging and benefiting from streaming data is hard - the data stack is costly, difficult to use and still has limitations. Materialize breaks down those barriers with a true cloud-native streaming database - not simply a database that connects to streaming systems. With a PostgreSQL-compatible interface, you can now work with real-time data using ANSI SQL including the ability to perform multi-way complex joins, which support stream-to-stream, stream-to-table, table-to-table, and more, all in standard SQL. Go to dataengineeringpodcast.com/materialize today and sign up for early access to get started. If you like what you see and want to help make it better, they're hiring across all functions! Your host is Tobias Macey and today I'm interviewing Chris Merrick about the Omni Analytics platform and how they are adding automatic data modeling to your business intelligence

Interview

Introduction How did you get involved in the area of data management? Can you describe what Omni Analytics is and the story behind it?

What are the core goals that you are trying to achieve with building Omni?

Business intelligence has gone through many evolutions. What are the unique capabilities that Omni Analytics offers over other players in the market?

What are the technical and organizational anti-patterns that typically grow up around BI systems?

What are the elements that contribute to BI being such a difficult product to use effectively in an organization?

Can you describe how you have implemented the Omni platform?

How have the design/scope/goals of the product changed since you first started working on it?

What does the workflow for a team using Omni look like?

What are some of the developments in the broader ecosystem that have made your work possible?

What are some of the positive and negative inspirations that you have drawn from the experience that you and your team-mates have gained in previous businesses?

What are the most interesting, innovative, or unexpected ways that you have seen Omni used?

What are the most interesting, unexpected, or challenging lessons that you have learned while working on Omni?

When is Omni the wrong choice?

What do you have planned for the future of Omni?

Contact Info

LinkedIn @cmerrick on Twitter

Parting Question

From your perspective, what is the biggest gap in the tooling or technology for data management today?

Closing Announcements

Thank you for listening! Don't forget to check out our other shows. Podcast.init covers the Python language, its community, and the innovative ways it is being used. The Machine Learning Podcast helps you go from idea to production with machine learning. Visit the site to subscribe to the show, sign up for the mailing list, and read the show notes. If you've learned something or tried out a project from the show then tell us about it! Email [email protected]) with your story. To help other people find the show please leave a review on Apple Podcasts and tell your friends and co-workers

Links

Omni Analytics Stitch RJ Metrics Looker

Podcast Episode

Singer dbt

Podcast Episode

Teradata Fivetran Apache Arrow

Podcast Episode

DuckDB

Podcast Episode

BigQuery Snowflake

Podcast Episode

The intro and outro music is from The Hug by The Freak Fandango Orchestra / CC BY-SA

Sponsored By:

Materialize:

Looking for the simplest way to get the freshest data possible to your teams? Because let's face it: if real-time were easy, everyone would be using it. Look no further than Materialize, the streaming database you already know how to use.

Materialize’s PostgreSQL-compatible interface lets users leverage the tools they already use, with unsurpassed simplicity enabled by full ANSI SQL support. Delivered as a single platform with the separation of storage and compute, strict-serializability, active replication, horizontal scalability and workload isolation — Materialize is now the fastest way to build products with streaming data, drastically reducing the time, expertise, cost and maintenance traditionally associated with implementation of real-time features.

Sign up now for early access to Materialize and get started with the power of streaming data with the same simplicity and low implementation cost as batch cloud data warehouses.

Go to materialize.comSupport Data Engineering Podcast

How does a traditional bricks-and-mortar retailer transform itself into an omni-channel business with strong digital and data science capabilities? In this episode of Leaders of Analytics we learn from Bunnings General Manager, Data and Analytics, Genevieve Elliott, how the company is transforming its operations using data and analytics. As Australia and New Zealand’s largest retailer of home improvement products, Bunnings is a highly complex organisation with a large physical footprint, a wide product range and an elaborate supply chain. Bunnings is almost 130 years old and has undergone tremendous growth over the last three decades. The company’s well-known strategy of “lowest price, widest range and best customer experience” is increasingly being driven by the company’s growing data and analytics capability. In this episode we discuss: Genevieve’s career journey and how she ended up in data and analyticsHow Bunnings uses data to create operational efficiencies, improve customer experience and optimise pricingHow the team prioritises projects and engages with the organisationHow the Data & Analytics team is driving a data-driven culture through the companyGenevieve’s advice to other analytics leaders wanting to drive strategically important results for their organisation, and much more.Genevieve Elliott on LinkedIn: https://www.linkedin.com/in/genevieve-elliott/

Send us a text Want to be featured as a guest on Making Data Simple? Reach out to us at [[email protected]] and tell us why you should be next.

Abstract Hosted by Al Martin, VP, IBM Expert Services Delivery, Making Data Simple provides the latest thinking on big data, A.I., and the implications for the enterprise from a range of experts.

This week on Making Data Simple, we have Elo Umeh, from Terragon Africa’s fastest-growing enterprise marketing technology company. Terragon uses its on-demand marketing cloud platform, attribution software, and deep analytics capability to enable thoughtful, targeted omni-channel access to 100m+ mobile-first African consumers. Elo is the Founder and CEO at Terragon Group. Elo career has spanned over 15 years where he has worked in the mobile and digital media across East and West Africa. He was part of the founding team at Mtech Communications. Elo holds a global executive MBA from IESE business of school where he graduated at the top of his class. Elo also has a Bachelor’s degree in Business Administration from Lagos State University. Show Notes 4:02 – What keeps you going? 6:15 – Lets dive into Terragon 8:40 – Who are your customers? 11:06 – Define pre-paid 14:40 – What kind of incites and security are you providing? 20:37- What kind of technology is Terragon using? 23:16 – What was it about the smart phone that made you want to go out on your own? 26:10 – Who’s your biggest competitor? 28:20 – What’s next for Terragon? 31:01 – What are the biggest mistakes entrepreneurs make? Terragon Elo Umeh - LinkedIn

Connect with the Team Producer Kate Brown - LinkedIn. Producer Steve Templeton - LinkedIn. Host Al Martin - LinkedIn and Twitter. Want to be featured as a guest on Making Data Simple? Reach out to us at [email protected] and tell us why you should be next. The Making Data Simple Podcast is hosted by Al Martin, WW VP Technical Sales, IBM, where we explore trending technologies, business innovation, and leadership ... while keeping it simple & fun.

Omni-channel is not a new hype, it's the norm. We have access to omni-channel analytics, omni-channel communication and automation tools. Now we connect that and visualize this connection. Segmentation is needed, not only for people, but for marketing automation.

There are cases when you can’t use Google Cloud services but still want to get all benefits of AlloyDB integration with AI and serve a local model directly to the database. In such cases, AlloyDB Omni deployed in a Kubernetes cluster can be great solution, serving for edge cases and keeping all communications between database and AI model local.