Are you tired of manual and failing dbt deployments? This talk explores how CI/CD and IaC can revolutionize your data transformation workflows, enhancing collaboration and data quality within your dbt projects. Learn the core concepts of CI/CD, including automated testing and deployment pipelines, I will guide you through building a CI/CD pipeline for dbt, triggering it with code changes and running comprehensive tests. Next to that we will dive into Infrastructure as Code (IaC) and how it automates dbt Cloud deployments using tools like Terraform. You will gain practical knowledge for automating dbt Cloud resources, projects, and environments. As a bonus we will do a sneak peek into the recently announced dbt Fusion engine.

talk-data.com

talk-data.com

Topic

Terraform

79

tagged

Activity Trend

Top Events

At Trendyol, Turkey’s leading e-commerce company, Apache Airflow powers our task orchestration, handling DAGs with 500+ tasks, complex interdependencies, and diverse environments. Managing on-prem Airflow instances posed challenges in scalability, maintenance, and deployment. To address these, we built TaskHarbor, a fully managed orchestration platform with a hybrid architecture—combining Airflow on GKE with on-prem resources for optimal performance and efficiency. This talk covers how we: Enabled seamless DAG synchronization across environments using GCS Fuse. Optimized workload distribution via GCP’s HTTPS & TCP Load Balancers. Automated infrastructure provisioning (GKE, CloudSQL, Kubernetes) using Terraform. Simplified Airflow deployments by replacing Helm YAML files with a custom templating tool, reducing configurations to 10-15 lines. Built a fully automated deployment pipeline, ensuring zero developer intervention. We enhanced efficiency, reliability, and automation in hybrid orchestration by embracing a scalable, maintainable, and cloud-native strategy. Attendees will obtain practical insights into architecting Airflow at scale and optimizing deployments.

Leveraging Databricks as a platform, we facilitate the sharing of anonymized datasets across various Databricks workspaces and accounts, spanning multiple cloud environments such as AWS, Azure, and Google Cloud. This capability, powered by Delta Sharing, extends both within and outside Sleep Number, enabling accelerated insights while ensuring compliance with data security and privacy standards. In this session, we will showcase our architecture and implementation strategy for data sharing, highlighting the use of Databricks’ Unity Catalog and Delta Sharing, along with integration with platforms like Jira, Jenkins, and Terraform to streamline project management and system orchestration.

Learn how a financial services company successfully transitioned its petabyte-scale logging platform from an on-premises environment to a scalable, cost-effective, and secure solution on Google Cloud. This talk will delve into the key phases of their journey, including rigorous benchmarking, scale testing, and the implementation of seamless log ingestion. Explore how the solution leveraged Terraform-driven automation to efficiently manage over 250,000 resources and preserved existing content such as searches, alerts, and dashboards.

This talk offers demonstrations and live discussions on how to rapidly deploy production-ready GKE or Slurm clusters using Cluster Toolkit and Terraform. Leverage the latest GPUs to accelerate machine learning workloads and optimize resource utilization with GKE's Kueue, autoscaling Slurm, and Dynamic Workload Scheduler (DWS). Explore storage solutions like Google Cloud Storage (GCS), GCSFuse, Filestore Zonal, and Parallelstore. Leave this session with the tools and knowledge you need to deploy a high-performance ML cluster in minutes.

This hands-on lab equips you with the essential skills to manage and automate your infrastructure using Terraform. Learn to define and provision infrastructure resources across various providers using HashiCorp Configuration Language (HCL). Explore core concepts like resource dependencies and understand how to safely build, change, and destroy infrastructure using Terraform's declarative approach. This hands-on experience will empower you to streamline deployments, enhance consistency, and improve overall infrastructure management efficiency.

If you register for a Learning Center lab, please ensure that you sign up for a Google Cloud Skills Boost account for both your work domain and personal email address. You will need to authenticate your account as well (be sure to check your spam folder!). This will ensure you can arrive and access your labs quickly onsite. You can follow this link to sign up!

It’s time for another episode of the Data Engineering Central Podcast. In this episode, we cover … * AWS Lambda + DuckDB and Delta Lake (Polars, Daft, etc). * IAC - Long Live Terraform. * Databricks Data Quality with DQX. * Unity Catalog releases for DuckDB and Polars * Bespoke vs Managed Data Platforms * Delta Lake vs. Iceberg and UinFORM for a single table. Thanks for b…

This is a public episode. If you'd like to discuss this with other subscribers or get access to bonus episodes, visit dataengineeringcentral.substack.com/subscribe

Organizations often find themselves constrained by domain-specific languages when managing cloud infrastructure, leading to complex workarounds and maintenance challenges. This workshop demonstrates how transitioning to a general-purpose programming language can transform your infrastructure management, making common tasks more intuitive and maintainable.

This book, "Building Modern Data Applications Using Databricks Lakehouse," provides a comprehensive guide for data professionals to master the Databricks platform. You'll learn to effectively build, deploy, and monitor robust data pipelines with Databricks' Delta Live Tables, empowering you to manage and optimize cloud-based data operations effortlessly. What this Book will help me do Understand the foundations and concepts of Delta Live Tables and its role in data pipeline development. Learn workflows to process and transform real-time and batch data efficiently using the Databricks lakehouse architecture. Master the implementation of Unity Catalog for governance and secure data access in modern data applications. Deploy and automate data pipeline changes using CI/CD, leveraging tools like Terraform and Databricks Asset Bundles. Gain advanced insights in monitoring data quality and performance, optimizing cloud costs, and managing DataOps tasks effectively. Author(s) Will Girten, the author, is a seasoned Solutions Architect at Databricks with over a decade of experience in data and AI systems. With a deep expertise in modern data architectures, Will is adept at simplifying complex topics and translating them into actionable knowledge. His books emphasize real-time application and offer clear, hands-on examples, making learning engaging and impactful. Who is it for? This book is geared towards data engineers, analysts, and DataOps professionals seeking efficient strategies to implement and maintain robust data pipelines. If you have a basic understanding of Python and Apache Spark and wish to delve deeper into the Databricks platform for streamlining workflows, this book is tailored for you.

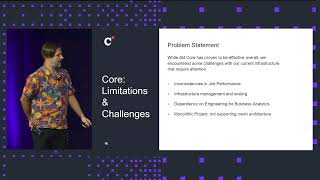

Since the beginning of 2024, the Warner Brothers Discovery team supporting the CNN data platform has been undergoing an extensive migration project from dbt Core to dbt Cloud. Concurrently, the team is also segmenting their project into multi-project frameworks utilizing dbt Mesh. In this talk, Zachary will review how this transition has simplified data pipelines, improved pipeline performance and data quality, and made data collaboration at scale more seamless.

He'll discuss how dbt Cloud features like the Cloud IDE, automated testing, documentation, and code deployment have enabled the team to standardize on a single developer platform while also managing dependencies effectively. He'll share details on how the automation framework they built using Terraform streamlines dbt project deployments with dbt Cloud to a ""push-button"" process. By leveraging an infrastructure as code experience, they can orchestrate the creation of environment variables, dbt Cloud jobs, Airflow connections, and AWS secrets with a unified approach that ensures consistency and reliability across projects.

Speakers: Mamta Gupta Staff Analytics Engineer Warner Brothers Discovery

Zachary Lancaster Manager, Data Engineering Warner Brothers Discovery

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements

There are undoubtedly similarities between the disciplines of analytics engineering and DevOps: in fact, dbt was founded with the goal of helping data professionals embrace DevOps principles as part of the data workflow. As the embedded DevOps engineer for a mature analytics engineering function, Katie Claiborne, Founding Analytics Engineer at Duet, observed parallels between analytics-as-code and infrastructure-as-code, particularly tools like Terraform. In this talk, she'll examine how analytics engineering is a means of empowerment for data practitioners and discuss infrastructure engineering as a means of scaling dbt Cloud deployments. Learn about similarities between analytics and infrastructure configuration tools, how to apply the concepts you've learned about analytics engineering towards new disciplines like DevOps, and how to extend engineering principles beyond data transformation and into the world of infrastructure.

Speaker: Katie Claiborne Founding Analytics Engineer Duet

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements

At TIER Mobility, we successfully reduced our cloud expenses by over 60% in less than two years. While this was a significant achievement, the journey wasn’t without its challenges. In this presentation, I’ll share insights into the potential pitfalls of cost reduction strategies that might end up being more expensive in the long run.

Airflow is not just purpose-built for data applications. It is a job scheduler on steroids. This is exactly what a cloud platform team needs: a configurable and scalable automation tool that can handle thousands of administrative tasks. Come learn how one enterprise platform team used Airflow to support cloud infrastructure at unprecedented scale.

In this talk, we’ll discuss how Instacart leverages Apache Airflow to orchestrate a vast network of data pipelines, powering both our core infrastructure and dbt deployments. As a data-driven company, Airflow plays a critical role in enabling us to execute large and intricate pipelines securely, compliantly, and at scale. We’ll delve into the following key areas: a. High-Throughput Cluster Management: We’ll explore how we manage and maintain our Airflow cluster, ensuring the efficient execution of over 2,000 DAGs across diverse use cases. b. Centralized Airflow Vision: We’ll outline our plans for establishing a company-wide, centralized Airflow cluster, consolidating all Airflow instances at Instacart. c. Custom Airflow Tooling: We’ll showcase the custom tooling we’ve developed to manage YML-based DAGs, execute DAGs on external ECS workers, leverage Terraform for cluster deployment, and implement robust cluster monitoring at scale. By sharing our extensive experience with Airflow, we aim to contribute valuable insights to the Airflow community.

In this workshop, you’ll learn how to build on top of your existing Terraform code with Pulumi or replace Terraform entirely. Learn how to consume TF outputs in Pulumi programs, automatically convert HCL to a general-purpose language, and import resources from Terraform state files into your Pulumi state files.

Send us a text Welcome to the cozy corner of the tech world where ones and zeros mingle with casual chit-chat. Datatopics Unplugged is your go-to spot for relaxed discussions around tech, news, data, and society. Dive into conversations that should flow as smoothly as your morning coffee (but don't), where industry insights meet laid-back banter. Whether you're a data aficionado or just someone curious about the digital age, pull up a chair, relax, and let's get into the heart of data, unplugged style!

In this episode, we're thrilled to have special guest Mehdi Ouazza diving into a plethora of hot tech topics: Mehdi Ouazza's Insights into his career, online community and working with DuckDB and MotherDuck.Demystifying DevRel: Definitions and distinctions in the realm of tech influence (dive deeper here).Terraform's Licensing Shift: Reactions to HashiCorp's recent changes and its new IBM collaboration, more details here.Github Copilot Workspace: Exploring the latest in AI-powered coding assistance, comparing with devin.ai and CodySnowflake's Arctic LLM: Discussing the latest enterprise AI capabilities and their real-world applications. Read more about Arctic - what it excels at, and how its performance was measuredMore legal kerfuffle in the GenAI realm: The ongoing legal debates around AI's use in creative industries, highlighted by a dispute over Drake’s use of late rapper Tupac’s AI-generated voice in diss track & the licensing deal between Financial Times and OpenAIFuture of Data Engineering: Examining the integration of LLMs into data engineering tools. Insights on prompt-based feature engineering and Databricks' English SDKAI in Music Creation: A little bonus with an AI generated song about Murilo, created with Suno

Send us a text Welcome to the cozy corner of the tech world where ones and zeros mingle with casual chit-chat. Datatopics Unplugged is your go-to spot for relaxed discussions around tech, news, data, and society.

Dive into conversations that should flow as smoothly as your morning coffee (but don't), where industry insights meet laid-back banter. Whether you're a data aficionado or just someone curious about the digital age, pull up a chair, relax, and let's get into the heart of data, unplugged style!

In this episode, we're joined by special guest Vitale Sparacello, an MLflow Ambassador, to delve into a myriad of topics shaping the current and future landscape of AI and software development: MLflow Deep Dive: Exploring the MLflow Ambassador program, MLflow's role in promoting MLOps practices, and its expansion to support generative AI technologies.Introducing Llama 3: Discussing Meta's newest language model, Llama 3, its capabilities, and the nuanced policy of its distribution, featured on platforms like Groq. Read more here.Emerging AI Tools: Evaluating Open-Parse for advanced text parsing and debating the longevity of PDF documents in the age of advanced AI technologies.OpenTofu vs. Terraform Drama: Analyzing the ongoing legal dispute between Terraform and OpenTofu, with discussions around code ethics and links to OpenTofu's LinkedIn, and their official blog response.The Future of AI Devices: Are smartphones the endgame, or is there a future for specialized AI wearables? Speculating on the evolution of AI devices, focusing on the Human AI Pin review, Rabbit R1 and Dot Computer Go check out the Youtube video after so you don't miss out Murilo in that suit he promised (with a duck tie of course).

Learn the proven strategies and approaches to creating scalable infrastructure, as code, with HashiCorp Terraform. We'll cover how large organizations find success in structuring projects across teams, architect globally available systems, securely share sensitive information between environments, set up developer-friendly workflows, and more. This session will discuss these topics in-depth and show them in action with a live demo and codebase that deploys many services across multiple teams.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

In this session, Slalom and Bayer AG will discuss how Bayer AG leverages GKE Enterprise, to build an internal developer platform, integrating Anthos features like Config Sync and Service Mesh. You’ll hear how the implementation, automated through Cloud Build and Terraform, empowered Bayer AG to establish robust security and DevOps practices to support its data engineering teams. By attending this session, your contact information may be shared with the sponsor for relevant follow up for this event only.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.