Are you looking to rapidly lift and transform your VMware estate in the cloud, with minimal disruption?Join this session to

- Get a primer on the unique capabilities of Google Cloud VMware Engine (GCVE) like 4 9's of cluster-level uptime, deeply integrated networking and more

- Learn directly from customers on how they have successfully adopted GCVE

- Discover new releases, including zero-config networking, terraform automation, and a flexible ve2 node platform

- Learn how to use Migration Center to assess your landscape before you invest

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

talk-data.com

talk-data.com

Topic

Terraform

79

tagged

Activity Trend

Top Events

Platform/ IT admins: Ever experienced delays setting up a foundational infrastructure on Google Cloud? Look no further than Google's new solution for setting up a foundational infrastructure -- a mix of UI and Infrastructure as Code. Now available to all customers globally in the console, the Google Cloud setup product will guide you through setting up an organization resource, integrating with a 3p identity provider, setting up initial folder/project structure, IAM, Shared VPCs, hybrid networking and more. You can deploy directly from the console or download as Terraform. In this session will demo the new guided product and share how you can test and provide feedback.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

Learn how to deploy your first summarization Gen AI application easily with new Jump Start Solution in 7 minutes. Then we will show how to customize your deployment (e.g. change prompt to auto customer compliant reply) with terraform in order to achieve Gen AI application Infrastructure as Code for better automation, security, efficiency, reliability, consistency and speed to market.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

Marsh McLennan runs a complex Apigee Hybrid configuration, with 36 organizations operating in six global data centers. Keeping all of this in sync across production and nonproduction environments is a challenge. While the infrastructure itself is deployed with Terraform, Marsh McLennan wanted to apply the same declarative approach to the entire environment. See how it used Apigee's management APIs to build a state machine to keep the whole system running smoothly, allowing APIs to flow seamlessly from source control through to production.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

Send us a text Welcome to the cozy corner of the tech world where ones and zeros mingle with casual chit-chat. Datatopics Unplugged is your go-to spot for relaxed discussions around tech, news, data, and society. Dive into conversations that should flow as smoothly as your morning coffee (but don't), where industry insights meet laid-back banter. Whether you're a data aficionado or just someone curious about the digital age, pull up a chair, relax, and let's get into the heart of data, unplugged style! In today's episode: Biz corner AI regulation race https://www.aisnakeoil.com/p/what-the-executive-order-means-forhttps://www.ft.com/content/e0574e79-d723-4d94-9681-fae22648e3adhttps://www.washingtonpost.com/technology/2023/10/25/artificial-intelligence-executive-order-biden/https://www.wired.com/story/joe-biden-wants-us-government-algorithms-tested-for-potential-harm-against-citizensTech corner Adoption of the business source license (BSL)https://www.hashicorp.com/blog/hashicorp-adopts-business-source-licensehttps://www.getdbt.com/blog/licensing-dbthttps://mariadb.com/bsl-faq-mariadb/https://github.com/MaterializeInc/materialize/blob/main/LICENSECompanies responding to HashiCorp's move to BSLhttps://spacelift.io/blog/spacelift-latest-statement-on-hashicorp-bslOpenTofu & HashiCorp's OSS workhttps://opentofu.org/https://medium.com/@andrewhertog/contributing-to-terraform-vs-opentofu-26779d480c7f?sk=5343d4a76b390833af621905c858777bCyber Resilience Act might kill open-source altogetherhttps://www.linuxfoundation.org/blog/understanding-the-cyber-resilience-acthttps://github.blog/2023-07-12-no-cyber-resilience-without-open-source-sustainability/https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=celex:52022PC0454OSS companies - is it a sustainable way of doing open source?https://bun.sh/blog/bun-v1.0https://astral.sh/https://pydantic.dev/https://duckdblabs.com/We haven't figured out OSS sustainability yet, but the BSL comes close.https://curl.se/docs/security.htmlhttps://xkcd.com/2347/Intro music courtesy of fesliyanst

Comment gérer un parc de 400 clusters AKS ? Dans ce REX, nous vous présenterons comment nous pilotons un parc de 400 cluster AKS et comment nous accompagnons les équipes dans la gestion de leurs clusters.

SG APIM - La plateforme d'API Management de Société Générale dans AWS : en passant par les services utilisés pour garantir le fonctionnement de la plateforme jusqu'à l'application réelle sur un cas business, nous expliquerons comment SG APIM est déployée sur AWS.

Timur is a DevOps professional from Berlin with 7 years of experience in the field. He is also an active speaker on various meet-ups, podcasts and conferences (HashiTalks, DevOpsDays), as well as a co-organiser of monthly Berlin AWS User Group meet-ups. He is currently working at TIER Mobility SE as a Senior DevOps Engineer in Core Infrastructure & Developer Experience team.

Do you want to know how Terraform scales with your organisation? You will learn about usual stages of Terraform adoption in a growing environment and learn how to avoid some of the pitfalls of those stages.

I have worked a lot as a consultant and have touched a lot of cloud infrastructures. I’ve been building Terraform-driven infrastructure from scratch for teeny-tiny startups, I’ve been working with Terraform codebase of enterprises, I’ve seen it all. I know how Terraform usage looks like when a company just has started its journey with it and I know what it becomes when it’s used in a big experienced company.

In this talk I will tell you about typical evolution of Terraform code and you might even recognise on which stage you are now. That will hopefully help you to avoid some of the pitfalls awaiting for you around the corner and build a better solution.

In this talk you’ll learn about how Pleo organized on-call schedules for 150+ engineers, how Pleo uses Terraform modules to make on-call the default option, and strategies for training and onboarding to on-call.

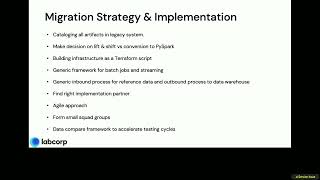

Join this session to learn about the Labcorp data platform transformation from on-premises Hadoop to AWS Databricks Lakehouse. We will share best practices and lessons learned from cloud-native data platform selection, implementation, and migration from Hadoop (within six months) with Unity Catalog.

We will share steps taken to retire several legacy on-premises technologies and leverage Databricks native features like Spark streaming, workflows, job pools, cluster policies and Spark JDBC within Databricks platform. Lessons learned in Implementing Unity Catalog and building a security and governance model that scales across applications. We will show demos that walk you through batch frameworks, streaming frameworks, data compare tools used across several applications to improve data quality and speed of delivery.

Discover how we have improved operational efficiency, resiliency and reduced TCO, and how we scaled building workspaces and associated cloud infrastructure using Terraform provider.

Talk by: Mohan Kolli and Sreekanth Ratakonda

Connect with us: Website: https://databricks.com Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/databricks Instagram: https://www.instagram.com/databricksinc Facebook: https://www.facebook.com/databricksinc

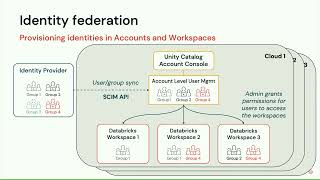

Across industries, a multicloud setup has quickly become the reality for large organizations. Multi-cloud introduces new governance challenges as permissions models often do not translate from one cloud to the other and if they do, are insufficiently granular to accommodate privacy requirements and principles of least privilege. This problem can be especially acute for data and AI workloads that rely on sharing and aggregating large and diverse data sources across business unit boundaries and where governance models need to incorporate assets such as table rows/columns and ML features and models.

In this session, we will provide guidelines on how best to overcome these challenges for companies that have adopted the Databricks Lakehouse as their collaborative space for data teams across the organization, by exploiting some of the unique product features of the Databricks platform. We will focus on a common scenario: a data platform team providing data assets to two different ML teams, one using the same cloud and the other one using a different cloud.

We will explain the step-by-step setup of a unified governance model by leveraging the following components and conventions:

- Unity Catalog for implementing fine-grained access control across all data assets: files in cloud storage, rows and columns in tables and ML features and models

- The Databricks Terraform provider to automatically enforce guardrails and permissions across clouds

- Account level SSO Integration and identity federation to centralize administer access across workspaces

- Delta sharing to seamlessly propagate changes in provider data sets to consumers in near real-time

- Centralized audit logging for a unified view on what asset was accessed by whom

Talk by: Ioannis Papadopoulos and Volker Tjaden

Connect with us: Website: https://databricks.com Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/databricks Instagram: https://www.instagram.com/databricksinc Facebook: https://www.facebook.com/databricksinc

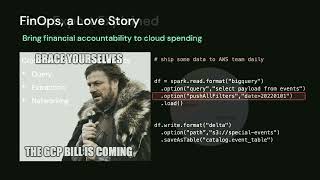

This session begins with data warehouse trivia and lessons learned from production implementations of multicloud data architecture. You will learn to design future-proof low latency data systems that focus on openness and interoperability. You will also gain a gentle introduction to Cloud FinOps principles that can help your organization reduce compute spend and increase efficiency.

Most enterprises today are multicloud. While an assortment of low-code connectors boasts the ability to make data available for analytics in real time, they post long-lasting challenges:

- Inefficient EDW targets

- Inability to evolve schema

- Forbiddingly expensive data exports due to cloud and vendor lock-in

The alternative is an open data lake that unifies batch and streaming workloads. Bronze landing zones in open format eliminate the data extraction costs required by proprietary EDW. Apache Spark™ Structured Streaming provides a unified ingestion interface. Streaming triggers allow us to switch back and forth between batch and stream with one-line code changes. Streaming aggregation enables us to incrementally compute on data that arrives near each other.

Specific examples are given on how to use Autoloader to discover newly arrived data and ensure exactly once, incremental processing. How DLT can be configured effectively to further simplify streaming jobs and accelerate the development cycle. How to apply SWE best practices to Workflows and integrate with popular Git providers, either using the Databricks Project or Databricks Terraform provider.

Talk by: Christina Taylor

Here’s more to explore: Big Book of Data Engineering: 2nd Edition: https://dbricks.co/3XpPgNV The Data Team's Guide to the Databricks Lakehouse Platform: https://dbricks.co/46nuDpI

Connect with us: Website: https://databricks.com Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/databricks Instagram: https://www.instagram.com/databricksinc Facebook: https://www.facebook.com/databricksinc

Learn how to apply DataSecOps patterns powered by Terraform to Unity Catalog to scale your governance efforts and support your organizational data usage.

Talk by: Zeashan Pappa and Deepak Sekar

Connect with us: Website: https://databricks.com Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/databricks Instagram: https://www.instagram.com/databricksinc Facebook: https://www.facebook.com/databricksinc

In today's data-driven landscape, the demands placed upon data engineers are diverse and multifaceted. With the integration of Java, Python, or Go microservices, Databricks SDKs provide a powerful bridge between the established ecosystems and Databricks. They allow data engineers to unlock new levels of integration and collaboration, as well as integrate Unity Catalog into processes to create advanced workflows straight from notebooks.

In this session, learn best practices for when and how to use SDK, command-line interface, or Terraform integration to seamlessly integrate with Databricks and revolutionize how you integrate with the Databricks Lakehouse. The session covers using shell scripts to automate complex tasks and streamline operations that improve scalability.

Talk by: Serge Smertin

Connect with us: Website: https://databricks.com Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/databricks Instagram: https://www.instagram.com/databricksinc Facebook: https://www.facebook.com/databricksinc

At Rivian, we have automated more than 95% of our Databricks resource provisioning workflows using an in-house Terraform module, affording us a lean admin team to manage over 750 users. In this session, we will cover the following elements of our approach and how others can benefit from improved team efficiency.

- User and service principal management

- Our permission model on Unity Catalog for data governance

- Workspace and secrets resource management

- Managing internal package dependencies using init scripts

- Facilitating dashboards, SQL queries and their associated permissions

- Scaling source of truth Petabyte scale Delta Lake table ingestion jobs and workflows

Talk by: Jason Shiverick and Vadivel Selvaraj

Connect with us: Website: https://databricks.com Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/databricks Instagram: https://www.instagram.com/databricksinc Facebook: https://www.facebook.com/databricksinc

In this session, we will introduce Databricks Asset Bundles, provide a demonstration of how they work for a variety of data products, and how to fit them into an overall CICD strategy for the well-architected Lakehouse.

Data teams produce a variety of assets; datasets, reports and dashboards, ML models, and business applications. These assets depend upon code (notebooks, repos, queries, pipelines), infrastructure (clusters, SQL warehouses, serverless endpoints), and supporting services/resources like Unity Catalog, Databricks Workflows, and DBSQL dashboards. Today, each organization must figure out a deployment strategy for the variety of data products they build on Databricks as there is no consistent way to describe the infrastructure and services associated with project code.

Databricks Asset Bundles is a new capability on Databricks that standardizes and unifies the deployment strategy for all data products developed on the platform. It allows developers to describe the infrastructure and resources of their project through a YAML configuration file, regardless of whether they are producing a report, dashboard, online ML model, or Delta Live Tables pipeline. Behind the scenes, these configuration files use Terraform to manage resources in a Databricks workspace, but knowledge of Terraform is not required to use Databricks Asset Bundles.

Talk by: Rafi Kurlansik and Pieter Noordhuis

Connect with us: Website: https://databricks.com Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/databricks Instagram: https://www.instagram.com/databricksinc Facebook: https://www.facebook.com/databricksinc

Today, all major cloud service providers and 3rd party providers include Apache Airflow as a managed service offering in their portfolios. While these cloud based solutions help with the undifferentiated heavy lifting of environment management, some data teams are also looking to operate self-managed Airflow instances to satisfy specific differentiated capabilities. In this session, we would talk about: Why should you might need to run self managed Airflow The available deployment options (with emphasis on Airflow on Kubernetes) How to deploy Airflow on Kubernetes using automation (Helm Charts & Terraform) Developer experience (sync DAGs using automation) Operator experience (Observability) Owned responsibilities and Tradeoffs A thorough understanding would help you understand the end-to-end perspectives of operating a highly available and scalable self managed Airflow environment to meet your ever growing workflow needs.

Summary

Data transformation is a key activity for all of the organizational roles that interact with data. Because of its importance and outsized impact on what is possible for downstream data consumers it is critical that everyone is able to collaborate seamlessly. SQLMesh was designed as a unifying tool that is simple to work with but powerful enough for large-scale transformations and complex projects. In this episode Toby Mao explains how it works, the importance of automatic column-level lineage tracking, and how you can start using it today.

Announcements

Hello and welcome to the Data Engineering Podcast, the show about modern data management RudderStack helps you build a customer data platform on your warehouse or data lake. Instead of trapping data in a black box, they enable you to easily collect customer data from the entire stack and build an identity graph on your warehouse, giving you full visibility and control. Their SDKs make event streaming from any app or website easy, and their extensive library of integrations enable you to automatically send data to hundreds of downstream tools. Sign up free at dataengineeringpodcast.com/rudderstack- Your host is Tobias Macey and today I'm interviewing Toby Mao about SQLMesh, an open source DataOps framework designed to scale data transformations with ease of collaboration and validation built in

Interview

Introduction How did you get involved in the area of data management? Can you describe what SQLMesh is and the story behind it?

DataOps is a term that has been co-opted and overloaded. What are the concepts that you are trying to convey with that term in the context of SQLMesh?

What are the rough edges in existing toolchains/workflows that you are trying to address with SQLMesh?

How do those rough edges impact the productivity and effectiveness of teams using those

Can you describe how SQLMesh is implemented?

How have the design and goals evolved since you first started working on it?

What are the lessons that you have learned from dbt which have informed the design and functionality of SQLMesh? For teams who have already invested in dbt, what is the migration path from or integration with dbt? You have some built-in integration with/awareness of orchestrators (currently Airflow). What are the benefits of making the transformation tool aware of the orchestrator? What do you see as the potential benefits of integration with e.g. data-diff? What are the second-order benefits of using a tool such as SQLMesh that addresses the more mechanical aspects of managing transformation workfows and the associated dependency chains? What are the most interesting, innovative, or unexpected ways that you have seen SQLMesh used? What are the most interesting, unexpected, or challenging lessons that you have learned while working on SQLMesh? When is SQLMesh the wrong choice? What do you have planned for the future of SQLMesh?

Contact Info

tobymao on GitHub @captaintobs on Twitter Website

Parting Question

From your perspective, what is the biggest gap in the tooling or technology for data management today?

Closing Announcements

Thank you for listening! Don't forget to check out our other shows. Podcast.init covers the Python language, its community, and the innovative ways it is being used. The Machine Learning Podcast helps you go from idea to production with machine learning. Visit the site to subscribe to the show, sign up for the mailing list, and read the show notes. If you've learned something or tried out a project from the show then tell us about it! Email [email protected]) with your story. To help other people find the show please leave a review on Apple Podcasts and tell your friends and co-workers

Links

SQLMesh Tobiko Data SAS AirBnB Minerva SQLGlot Cron AST == Abstract Syntax Tree Pandas Terraform dbt

Podcast Episode

SQLFluff

Podcast.init Episode

The intro and outro music is from The Hug by The Freak Fandango Orc

We talked about:

Bart's background What is data governance? Data dictionaries and data lineage Data access management How to learn about data governance What skills are needed to do data governance effectively When an organization needs to start thinking about data governance Good data access management processes Data masking and the importance of automating data access DPO and CISO roles How data access management works with a data mesh approach Avoiding the role explosion problem The importance of data governance integration in DataOps Terraform as a stepping stone to data governance How Raito can help an organization with data governance Open-source data governance tools

Links:

LinkedIn: https://www.linkedin.com/in/bartvandekerckhove/ Twitter: https://twitter.com/Bart_H_VDK Github: https://github.com/raito-io Website: https://www.raito.io/ Data Mesh Learning Slack: https://data-mesh-learning.slack.com/join/shared_invite/zt-1qs976pm9-ci7lU8CTmc4QD5y4uKYtAA#/shared-invite/email DataQG Website: https://dataqg.com/ DataQG Slack: https://dataqgcommunitygroup.slack.com/join/shared_invite/zt-12n0333gg-iTZAjbOBeUyAwWr8I~2qfg#/shared-invite/email DMBOK (Data Management Book of Knowledge): https://www.dama.org/cpages/body-of-knowledge DMBOK Wheel describing the data governance activities: https://www.dama.org/cpages/dmbok-2-wheel-images

Free MLOps course: https://github.com/DataTalksClub/mlops-zoomcamp

Join DataTalks.Club: https://datatalks.club/slack.html

Our events: https://datatalks.club/events.html