Jeroen Janssens and Thijs Nieuwdo join me to chat about all things Polars. We discuss the evolution of the Polars library, its advantages over pandas, and their journey of writing 'Python Polars: The Definitive Guide.'

talk-data.com

talk-data.com

Topic

Polars

38

tagged

Activity Trend

Top Events

Delta Lake has proven to be an excellent storage format. Coupled with the Databricks platform, the storage format has shined as a component of a distributed system on the lakehouse. The pairing of Delta and Spark provides an excellent platform, but users often struggle to perform comparable work outside of the Spark ecosystem. Tools such as delta-rs, Polars and DuckDb have brought access to users outside of Spark, but they are only building blocks of a larger system. In this 40-minute talk we will demonstrate how users can use data products on the Nextdata OS data mesh to interact with the Databricks platform to drive Delta Lake workflows. Additionally, we will show how users can build autonomous data products that interact with their Delta tables both inside and outside of the lakehouse platform. Attendees will learn how to integrate the Nextdata OS data mesh with the Databricks platform as both an external and integral component.

Suppose you want to write a data science tool to do feature engineering. Your experience may go like this: - Expectation: you can focus on state-of-the art techniques for feature engineering. - Reality: you keep having to make you codebase more complex because a new dataframe library has come out and users are demanding support for it.

Or rather, it might have gone like that in the pre-Narwhals era. Because now, you can focus on solving the problems which your tool set out to do, and let Narwhals handle the subtle differences between different kinds of dataframe inputs!

Football analytics has rapidly evolved over the past five years, becoming a crucial part of professional and fan discourse. While much of the cutting-edge research remains hidden behind the fences of club training grounds, a growing ecosystem of open-source tools now enables anyone to develop advanced football analytics models.

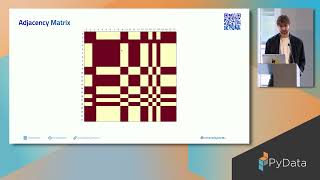

In this talk, I'll showcase key open-source libraries—Polars for high-performance data processing, Keras for deep learning, and Spektral for Graph Neural Networks (GNNs)—to analyze millions of player coordinates from publicly available high-frequency positional tracking data. I'll demonstrate how these tools can be used to build in-game prediction models and extract advanced football metrics that only the most advanced football clubs currently use.

Send us a text Welcome to the cozy corner of the tech world where ones and zeros mingle with casual chit-chat. DataTopics Unpluggedis your go-to spot for relaxed discussions on tech, news, data, and society. This week, we’re unpacking everything from AI-powered vacations (or the lack thereof) to corporate drama, and even a deep dive into the quirks of COBOL. Join Morillo, Bart, and Alex as they navigate the latest happenings in data and tech, including: Airbnb AI: The CEO of Airbnb thinks AI trip planning is still a pipe dream. Is he right?Anthropic’s next AI model: A new Claude model could be just weeks away, promising a hybrid of deep reasoning and speed.OpenAI’s roadmap: Sam Altman lays out vague but ambitious plans, blurring the lines between AI models.Elon vs. OpenAI: Musk offers $97B for OpenAI, Altman claps back. Just another day in AI power struggles.RIP Viktor Antonov: The legendary art lead behind Half-Life 2 and Dishonored passes away at 52.Project Sid AI agents: 1,000 AI agents left to their own devices in Minecraft… What could go wrong?DeepSeek R1 breaks speed records: The latest AI model boasts a staggering 198 tokens per second.Perplexity’s Deep Research is now free: A game-changer for AI-powered search? We discuss.COBOL and the mystery of 1875-05-20: Why do old systems default to weird dates?Polars Cloud: A new distributed architecture to run Polars anywhere.Pickle AI avatars: Deepfake yourself into meetings. Ethical? Useful? Just plain weird?Vim after Bram: How the legendary text editor is surviving after its creator’s passing.Working Fast and Slow: A take on productivity, deep focus, and why some days just don’t work.We were wrong about GPUs: Fly.io admits they misjudged the demand for GPU-powered workloads.

It’s time for another episode of the Data Engineering Central Podcast. In this episode, we cover … * AWS Lambda + DuckDB and Delta Lake (Polars, Daft, etc). * IAC - Long Live Terraform. * Databricks Data Quality with DQX. * Unity Catalog releases for DuckDB and Polars * Bespoke vs Managed Data Platforms * Delta Lake vs. Iceberg and UinFORM for a single table. Thanks for b…

This is a public episode. If you'd like to discuss this with other subscribers or get access to bonus episodes, visit dataengineeringcentral.substack.com/subscribe

DuckDB, an open source in-process database created for OLAP workloads, provides key advantages over more mainstream OLAP solutions: It's embeddable and optimized for analytics. It also integrates well with Python and is compatible with SQL, giving you the performance and flexibility of SQL right within your Python environment. This handy guide shows you how to get started with this versatile and powerful tool. Author Wei-Meng Lee takes developers and data professionals through DuckDB's primary features and functions, best practices, and practical examples of how you can use DuckDB for a variety of data analytics tasks. You'll also dive into specific topics, including how to import data into DuckDB, work with tables, perform exploratory data analysis, visualize data, perform spatial analysis, and use DuckDB with JSON files, Polars, and JupySQL. Understand the purpose of DuckDB and its main functions Conduct data analytics tasks using DuckDB Integrate DuckDB with pandas, Polars, and JupySQL Use DuckDB to query your data Perform spatial analytics using DuckDB's spatial extension Work with a diverse range of data including Parquet, CSV, and JSON

Welcome to DataFramed Industry Roundups! In this series of episodes, Adel & Richie sit down to discuss the latest and greatest in data & AI. In this episode, we touch upon AI agents for data work, will the full-stack data scientist make a return, old languages making a comeback, Python's increase in performance, what they're both thankful for, and much more. Links Mentioned in the Show Fractal’s Data Science Agent: AryaArticle: What Makes a True AI Agent? Rethinking the Pursuit of AutonomyCassie Kozyrkov on DataFramedTIOBE Index for November 2024Community discussion on FortranTutorial: High Performance Data Manipulation in Python: pandas 2.0 vs. polars New to DataCamp? Learn on the go using the DataCamp mobile appEmpower your business with world-class data and AI skills with DataCamp for business

It’s time for another episode of the Data Engineering Central Podcast. In this episode we cover … * Apache Airflow vs Databricks Workflows * End-of-Year Engineering Planning for 2025 * 10 Billion Row Challenge with DuckDB vs Daft vs Polars * Raw Data Ingestion. As usual, the full episode is available to paid subscribers, and a shortened version to you free loaders out there, don’t worry, I still love you though.

This is a public episode. If you'd like to discuss this with other subscribers or get access to bonus episodes, visit dataengineeringcentral.substack.com/subscribe

It’s time for another episode of Data Engineering Central Podcast, our third one! Topics in this episode … * Should you use DuckDB or Polars? * Small Engineering Changes (PR Reviews) * Daft vs Spark on Databricks with Unity Catalog (Delta Lake) * Primary and Foreign keys in the Lake House Enjoy!

This is a public episode. If you'd like to discuss this with other subscribers or get access to bonus episodes, visit dataengineeringcentral.substack.com/subscribe

This book covers modern data engineering functions and important Python libraries, to help you develop state-of-the-art ML pipelines and integration code. The book begins by explaining data analytics and transformation, delving into the Pandas library, its capabilities, and nuances. It then explores emerging libraries such as Polars and CuDF, providing insights into GPU-based computing and cutting-edge data manipulation techniques. The text discusses the importance of data validation in engineering processes, introducing tools such as Great Expectations and Pandera to ensure data quality and reliability. The book delves into API design and development, with a specific focus on leveraging the power of FastAPI. It covers authentication, authorization, and real-world applications, enabling you to construct efficient and secure APIs using FastAPI. Also explored is concurrency in data engineering, examining Dask's capabilities from basic setup to crafting advanced machine learning pipelines. The book includes development and delivery of data engineering pipelines using leading cloud platforms such as AWS, Google Cloud, and Microsoft Azure. The concluding chapters concentrate on real-time and streaming data engineering pipelines, emphasizing Apache Kafka and workflow orchestration in data engineering. Workflow tools such as Airflow and Prefect are introduced to seamlessly manage and automate complex data workflows. What sets this book apart is its blend of theoretical knowledge and practical application, a structured path from basic to advanced concepts, and insights into using state-of-the-art tools. With this book, you gain access to cutting-edge techniques and insights that are reshaping the industry. This book is not just an educational tool. It is a career catalyst, and an investment in your future as a data engineering expert, poised to meet the challenges of today's data-driven world. What You Will Learn Elevate your data wrangling jobs by utilizing the power of both CPU and GPU computing, and learn to process data using Pandas 2.0, Polars, and CuDF at unprecedented speeds Design data validation pipelines, construct efficient data service APIs, develop real-time streaming pipelines and master the art of workflow orchestration to streamline your engineering projects Leverage concurrent programming to develop machine learning pipelines and get hands-on experience in development and deployment of machine learning pipelines across AWS, GCP, and Azure Who This Book Is For Data analysts, data engineers, data scientists, machine learning engineers, and MLOps specialists

Send us a text Welcome to the cozy corner of the tech world where ones and zeros mingle with casual chit-chat. Datatopics Unplugged is your go-to spot for relaxed discussions around tech, news, data, and society. We dive into conversations smoother than your morning coffee (but let’s be honest, just as caffeinated) where industry insights meet light-hearted banter. Whether you’re a data wizard or just curious about the digital chaos around us, kick back and get ready to talk shop—unplugged style! In this episode: Farewell Pandas, Hello Future: Pandas is out, and Ibis is in. We're talking faster, smarter data processing—featuring the rise of DuckDB and the powerhouse that is Polars. Is this the end of an era for Pandas?UV vs. Rye: Forget pip—are these new Python package managers built in Rust the future? We break down UV, Rye, and what it all means for your next Python project.AI-Generated Podcasts: Is AI about to take over your favorite podcasts? We explore the potential of Google’s Notebook LM to transform content into audio gold.When AI Steals Your Voice: Jeff Geerling’s voice gets cloned by AI—without his consent. We dive into the wild world of voice cloning, the ethics, and the future of AI-generated media.Hacking AI with Prompt Injection: Could you outsmart AI? We share some wild strategies from the game Gandalf that challenge your prompt injection skills and teach you how to jailbreak even the toughest guardrails.Jony Ive’s New Gadget Rumor: Is Jony Ive plotting an Apple killer? Rumors are swirling about a new AI-powered handheld device that could shake up the smartphone market.Zero-Downtime Deployments with Kamal Proxy: No more downtime! We geek out over Kamal Proxy, the sleek HTTP tool designed for effortless Docker deployments.Function Calling and LLMs: Get ready for the next evolution in AI—function calling. We discuss its rise in LLMs and dive into the Gorilla project, the leaderboard testing the future of smart APIs.

Polars is a dataframe library taking the world by storm. It is very runtime and memory efficient and comes with a clean and expressive API. Sometimes, however, the built-in API isn't enough. And that's where its killer feature comes in: plugins. You can extend Polars, and solve practically any problem.

No prior Rust experience required, intermediate Python and programming experience required. By the end of the talk, you will know how to write your own Polars Plugin! This talk is aimed at data practitioners.

Dive into the world of data analysis with the Polars Cookbook. This book, ideal for data professionals, covers practical recipes to manipulate, transform, and analyze data using the Python Polars library. You'll learn both the fundamentals and advanced techniques to build efficient and scalable data workflows. What this Book will help me do Master the basics of Python Polars including installation and setup. Perform complex data manipulation like pivoting, grouping, and joining. Handle large-scale time series data for accurate analysis. Understand data integration with libraries like pandas and numpy. Optimize workflows for both on-premise and cloud environments. Author(s) Yuki Kakegawa is an experienced data analytics consultant who has collaborated with companies such as Microsoft and Stanford Health Care. His passion for data led him to create this detailed guide on Polars. His expertise ensures you gain real-world, actionable insights from every chapter. Who is it for? This book is perfect for data analysts, engineers, and scientists eager to enhance their efficiency with Python Polars. If you are familiar with Python and tools like pandas but are new to Polars, this book will upskill you. Whether handling big data or optimizing code for performance, the Polars Cookbook has the guidance you need to succeed.

Rust is a unique language whose traits make it very appealing for data engineering. In this session, we'll walk through the different aspects of the language that make it such a good fit for big data processing including: how it improves performance and how it provides greater safety guarantees and compatibility with a wide range of existing tools that make it well positioned to become a major building block for the future of analytics.

We will also take a hands-on look through real code examples at a few emerging technologies built on top of Rust that utilize these capabilities, and learn how to apply them to our modern lakehouse architecture.

Talk by: Oz Katz

Here’s more to explore: Why the Data Lakehouse Is Your next Data Warehouse: https://dbricks.co/3Pt5unq Lakehouse Fundamentals Training: https://dbricks.co/44ancQs

Connect with us: Website: https://databricks.com Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/databricks Instagram: https://www.instagram.com/databricksinc Facebook: https://www.facebook.com/databricksinc

In this talk, we will report on our experiences switching from Pandas to Polars in a real-world ML project. Polars is a new high-performance dataframe library for Python based on Apache Arrow and written in Rust. We will compare the performance of polars with the popular pandas library, and show how polars can provide significant speed improvements for data manipulation and analysis tasks. We will also discuss the unique features of polars, such as its ability to handle large datasets that do not fit into memory, and how it feels in practice to make the switch from Pandas. This talk is aimed at data scientists, analysts, and anyone interested in fast and efficient data processing in Python.

Pandas is the de-facto standard for data manipulation in python, which I personally love for its flexible syntax and interoperability. But Pandas has well-known drawbacks such as memory in-efficiency, inconsistent missing data handling and lacking multicore-support. Multiple open-source projects aim to solve those issues, the most interesting is Polars.

Polars uses Rust and Apache Arrow to win in all kinds of performance-benchmarks and evolves fast. But is it already stable enough to migrate an existing Pandas' codebase? And does it meet the high-expectations on query language flexibility of long-time Pandas-lovers?

In this talk, I will explain, how Polars can be that fast, and present my insights on where Polars shines and in which scenarios I stay with pandas (at least for now!)

This talk will introduce Polars a blazingly fast DataFrame library written in Rust on top of Apache Arrow. Its a DataFrame library that brings exploratory data analysis closer to the lessons learned in database research.

CPU's today's come with many cores and with their superscalar designs and SIMD registers allow for even more parallelism. Polars is written from the ground up to fully utilize the CPU's of this generation.

Besides blazingly fast algorithms, cache efficient memory layout and multi-threading, it consist of a lazy query engine, allowing Polars to do several optimizations that may improve query time and memory usage.

Read more:

https://github.com/pola-rs/polars https://www.ritchievink.com/blog/2021/02/28/i-wrote-one-of-the-fastest-dataframe-libraries/

Join the talk to learn more.

Connect with us: Website: https://databricks.com Facebook: https://www.facebook.com/databricksinc Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/data... Instagram: https://www.instagram.com/databricksinc/