Summary Gleb Mezhanskiy, CEO and co-founder of DataFold, joins Tobias Macey to discuss the challenges and innovations in data migrations. Gleb shares his experiences building and scaling data platforms at companies like Autodesk and Lyft, and how these experiences inspired the creation of DataFold to address data quality issues across teams. He outlines the complexities of data migrations, including common pitfalls such as technical debt and the importance of achieving parity between old and new systems. Gleb also discusses DataFold's innovative use of AI and large language models (LLMs) to automate translation and reconciliation processes in data migrations, reducing time and effort required for migrations. Announcements Hello and welcome to the Data Engineering Podcast, the show about modern data managementImagine catching data issues before they snowball into bigger problems. That’s what Datafold’s new Monitors do. With automatic monitoring for cross-database data diffs, schema changes, key metrics, and custom data tests, you can catch discrepancies and anomalies in real time, right at the source. Whether it’s maintaining data integrity or preventing costly mistakes, Datafold Monitors give you the visibility and control you need to keep your entire data stack running smoothly. Want to stop issues before they hit production? Learn more at dataengineeringpodcast.com/datafold today!Your host is Tobias Macey and today I'm welcoming back Gleb Mezhanskiy to talk about Datafold's experience bringing AI to bear on the problem of migrating your data stackInterview IntroductionHow did you get involved in the area of data management?Can you describe what the Data Migration Agent is and the story behind it?What is the core problem that you are targeting with the agent?What are the biggest time sinks in the process of database and tooling migration that teams run into?Can you describe the architecture of your agent?What was your selection and evaluation process for the LLM that you are using?What were some of the main unknowns that you had to discover going into the project?What are some of the evolutions in the ecosystem that occurred either during the development process or since your initial launch that have caused you to second-guess elements of the design?In terms of SQL translation there are libraries such as SQLGlot and the work being done with SDF that aim to address that through AST parsing and subsequent dialect generation. What are the ways that approach is insufficient in the context of a platform migration?How does the approach you are taking with the combination of data-diffing and automated translation help build confidence in the migration target?What are the most interesting, innovative, or unexpected ways that you have seen the Data Migration Agent used?What are the most interesting, unexpected, or challenging lessons that you have learned while working on building an AI powered migration assistant?When is the data migration agent the wrong choice?What do you have planned for the future of applications of AI at Datafold?Contact Info LinkedInParting Question From your perspective, what is the biggest gap in the tooling or technology for data management today?Closing Announcements Thank you for listening! Don't forget to check out our other shows. Podcast.init covers the Python language, its community, and the innovative ways it is being used. The AI Engineering Podcast is your guide to the fast-moving world of building AI systems.Visit the site to subscribe to the show, sign up for the mailing list, and read the show notes.If you've learned something or tried out a project from the show then tell us about it! Email [email protected] with your story.Links DatafoldDatafold Migration AgentDatafold data-diffDatafold Reconciliation Podcast EpisodeSQLGlotLark parserClaude 3.5 SonnetLookerPodcast EpisodeThe intro and outro music is from The Hug by The Freak Fandango Orchestra / CC BY-SA

talk-data.com

talk-data.com

Topic

SQL

Structured Query Language (SQL)

1751

tagged

Activity Trend

Top Events

Today's analytics and data science job market seems to be as competitive as it's ever been. So it's more important than ever to know what employers are looking for and have a solid plan of attack in your job search. In this episode, Luke Barousse and Kelly Adams will walk us through their insights from the job market, talk about exactly what employers are looking for, and lay out an actionable plan for you to start building skills that will help you in your career. You'll leave this show with a deeper understanding of the job market, and a concrete roadmap you can use to take your data skills and career to the next level. What You'll Learn: Insights from a deep analysis of the data science and analytics job market The skills employers are looking for, and why they matter A roadmap for building key data science and data analytics skills Register for free to be part of the next live session: https://bit.ly/3XB3A8b About our guests: Luke Barousse is a data analyst, YouTuber, and engineer who helps data nerds be more productive. Follow Luke on LinkedIn Subscribe to Luke's YouTube Channel Luke's Python, SQL, and ChatGPT Courses

Kelly Adams is a data analyst, course creator, and writer. Kelly's Website Follow Kelly on LinkedIn Datanerd.Tech Follow us on Socials: LinkedIn YouTube Instagram (Mavens of Data) Instagram (Maven Analytics) TikTok Facebook Medium X/Twitter

SQL is one of the most widely used data analysis tools around, often discussed as a cornerstone for Data Analysis, Data Science, and Data Engineering careers. In this episode, Thais Cooke talks about how she leverages SQL in her role as a Data Analyst and shares practical tips you can use to take your SQL game to the next level. You'll leave the show with an insider's perspective on where SQL adds the most value, and where you should focus if you want to build SQL skills that will advance your career. What You'll Learn: What makes SQL such a valuable skill set for so many roles Some of the most valuable ways you can use SQL on the job Where you can focus if you want to build job-ready SQL skills Register for free to be part of the next live session: https://bit.ly/3XB3A8b About our guest: Thais Cooke is a Data Analyst proficient in Excel, SQL, and Python with a background in Clinical Healthcare. SQL for Healthcare Professionals Course Follow Thais on LinkedIn

Follow us on Socials: LinkedIn YouTube Instagram (Mavens of Data) Instagram (Maven Analytics) TikTok Facebook Medium X/Twitter

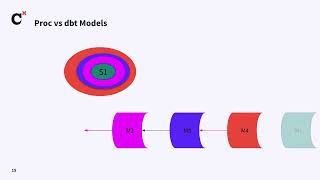

As organizations evolve, many still rely on legacy SQL queries and stored procedures that can become bottlenecks in scaling data infrastructure. In this talk, we will explore how to modernize these workflows by migrating legacy SQL and stored procedures into dbt models, enabling more efficient, scalable, and version-controlled data transformations. We’ll discuss practical strategies for refactoring complex logic, ensuring data lineage, data quality and unit testing benefits, and improving collaboration among teams. This session is ideal for data and analytics engineers, analysts, and anyone looking to optimize their ETL workflows using dbt.

Speaker: Bishal Gupta

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements

In this session Connor will dive into optimizing compute resources, accelerating query performance, and simplifying data transformations with dbt and cover in detail: - SQL-based data transformation, and why is it gaining traction as the preferred language with data engineers - Life cycle management for native objects like fact tables, dimension tables, primary indexes, aggregating indexes, join indexes, and others. - Declarative, version-controlled data modeling - Auto-generated data lineage and documentation

Learn about incremental models, custom materializations, and column-level lineage. Discover practical examples and real-world use cases how Firebolt enables data engineers to efficiently manage complex tasks and optimize data operations while achieving high efficiency and low latency on their data warehouse workloads.

Speaker: Connor Carreras Solutions Architect Firebolt

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements

Unlock the potential of serverless data transformations by integrating Amazon Athena with dbt (Data Build Tool). In this presentation, we'll explore how combining Athena's scalable, serverless query service with dbt's powerful SQL-based transformation capabilities simplifies data workflows and eliminates the need for infrastructure management. Discover how this integration addresses common challenges like managing large-scale data transformations and needing agile analytics, enabling your organization to accelerate insights, reduce costs, and enhance decision-making.

Speakers: BP Yau Partner Solutions Architect AWS

Darshit Thakkar Technical Product Manager AWS

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements

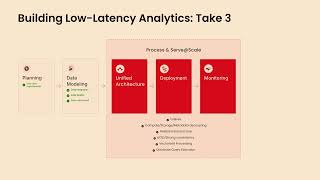

In the fast-paced world of mortgage lending, speed and accuracy are crucial. To support their underwriters, Vontive transformed written rules for loan eligibility from a Google Doc into SQL queries for evaluation in a Postgres database. However, while functional, this setup struggled to scale with business growth, resulting in slow, cumbersome processing times. Executing just a handful of loan eligibility rules could take up to 27 seconds–far too long for user-friendly interactions.

In this session, we’ll explore how Vontive reimagined its underwriting operations using Materialize. By offloading complex SQL queries from Postgres to Materialize, Vontive reduced eligibility check times from 27 seconds to under a second. This not only sped up decision-making but also removed limitations on the number of SQL-based underwriting rules, allowing underwriters to process more loans with greater accuracy and confidence. Additionally, this shift enabled the team to implement more automated checks throughout the underwriting process, catching errors earlier and further streamlining operations. Engineering needs were minimal, since DBT supports both cloud-based Postgres and Materialize.

Whether you're in financial services or any data-driven industry, this session offers valuable insights into leveraging fast-changing data for high-stakes decision-making with confidence.

Speakers: Steffen Hausmann Field Engineer Materialize

Wolf Rendall Director of Data Products Vontive

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements

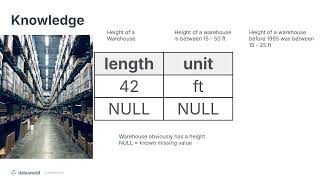

In this talk, we will make the case that the success of enterprise AI depends on an investment in semantics and knowledge, not just data. Our LLM Accuracy benchmark research provided evidence that by layering semantic layers/knowledge graphs on enterprise SQL databases increases the accuracy of LLMs at least 4X for question answering. This work has been reproduced and validated by many others, including dbt labs. It's fantastic that semantics and knowledge are getting the attention it deserves. We need more.

This talk is targeted to 1) those who believe AI accuracy can be improved by simply adding more data to fine-tune/train models, and 2) the believers in semantics and knowledge who need help getting executive buy-in.

We will dive into: - the knowledge engineering work that needs to be done - who should be leading this work (hint: analytics engineers) - what companies lose by not doing this knowledge engineering work

Speaker: Juan Sequeda Principal Scientist and Head of AI Lab data.world

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements

There is a lot of talk when it comes to how LLMs can best be deployed in the analytics space. What are implementation best practices? Are LLMs best suited with text-to-SQL or with a Semantic Layer underneath providing proper constraints?

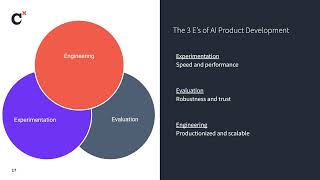

These are fun discussions to have, but LLMs also present a massive opportunity for companies beyond normal analytics work. The team at Newfront has been building LLM-powered products for clients that are external facing, using a cross-functional group that consists of many data team members.

In this talk, they'll go over where data professionals fit best in building these sorts of products and how to get started in participating in direct value generation for your business.

Speaker: Patrick Miller Head of Data & AI Newfront

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements

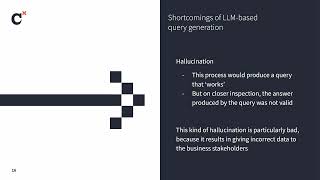

Have you ever wanted to build an AI chatbot to query your data platform? Transforming a business user's natural language question into a valid SQL query that answers their question has huge potential to unlock improved self-service analytics. However, even state-of-the-art LLMs are prone to hallucination, which makes it hard to generate SQL queries that are both conceptually and syntactically correct.

In this talk, you'll learn how the team at M1 Finance tackled this problem using MetricFlow’s rich semantic modeling along with LLM-based tool invocation. This approach enables them to reliably produce valid SQL queries from natural language questions, avoiding issues of hallucination or incorrect metrics.

Speakers: Kelly Wolinetz Senior Data Engineer M1 Finance

Brady Dauzat Machine Learning Engineer M1

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements

This session will explore a few key metrics that can be used to steer your business in the right direction. Retention rate, lifetime value and payback time are crucial for estimating growth, understanding user behavior and determining marketing spend. Those are needed in turn for companies to make informed decisions and drive the business forward so it is important to get them right. While some rules may be specific for each company, this talk will present the work undertaken at Rebtel to calculate these metrics using SQL, and how you could implement similar models quickly.

Speaker: Quentin Coviaux Data Engineer Rebtel

Read the blog to learn about the latest dbt Cloud features announced at Coalesce, designed to help organizations embrace analytics best practices at scale https://www.getdbt.com/blog/coalesce-2024-product-announcements

If you're a developer looking to build a distributed, resilient, scalable, high-performance application, you may be evaluating distributed SQL and NoSQL solutions. Perhaps you're considering the Aerospike database. This practical book shows developers, architects, and engineers how to get the highly scalable and extremely low-latency Aerospike database up and running. You will learn how to power your globally distributed applications and take advantage of Aerospike's hybrid memory architecture with the real-time performance of in-memory plus dependable persistence. After reading this book, you'll be able to build applications that can process up to tens of millions of transactions per second for millions of concurrent users on any scale of data. This practical guide provides: Step-by-step instructions on installing and connecting to Aerospike A clear explanation of the programming models available All the advice you need to develop your Aerospike application Coverage of issues such as administration, connectors, consistency, and security Code examples and tutorials to get you up and running quickly And more

Businesses are collecting more data than ever before. But is bigger always better? Many companies are starting to question whether massive datasets and complex infrastructure are truly delivering results or just adding unnecessary costs and complications. How can you make sure your data strategy is aligned with your actual needs? What if focusing on smaller, more manageable datasets could improve your efficiency and save resources, all while delivering the same insights? Ryan Boyd is the Co-Founder & VP, Marketing + DevRel at MotherDuck. Ryan started his career as a software engineer, but since has led DevRel teams for 15+ years at Google, Databricks and Neo4j, where he developed and executed numerous marketing and DevRel programs. Prior to MotherDuck, Ryan worked at Databricks and focussed the team on building an online community during the pandemic, helping to organize the content and experience for an online Data + AI Summit, establishing a regular cadence of video and blog content, launching the Databricks Beacons ambassador program, improving the time to an “aha” moment in the online trial and launching a University Alliance program to help professors teach the latest in data science, machine learning and data engineering. In the episode, Richie and Ryan explore data growth and computation, the data 1%, the small data movement, data storage and usage, the shift to local and hybrid computing, modern data tools, the challenges of big data, transactional vs analytical databases, SQL language enhancements, simple and ergonomic data solutions and much more. Links Mentioned in the Show: MotherDuckThe Small Data ManifestoConnect with RyanSmall DataSF conferenceRelated Episode: Effective Data Engineering with Liya Aizenberg, Director of Data Engineering at AwayRewatch sessions from RADAR: AI Edition New to DataCamp? Learn on the go using the DataCamp mobile appEmpower your business with world-class data and AI skills with DataCamp for business

This book is your comprehensive guide to building robust Generative AI solutions using the Databricks Data Intelligence Platform. Databricks is the fastest-growing data platform offering unified analytics and AI capabilities within a single governance framework, enabling organizations to streamline their data processing workflows, from ingestion to visualization. Additionally, Databricks provides features to train a high-quality large language model (LLM), whether you are looking for Retrieval-Augmented Generation (RAG) or fine-tuning. Databricks offers a scalable and efficient solution for processing large volumes of both structured and unstructured data, facilitating advanced analytics, machine learning, and real-time processing. In today's GenAI world, Databricks plays a crucial role in empowering organizations to extract value from their data effectively, driving innovation and gaining a competitive edge in the digital age. This book will not only help you master the Data Intelligence Platform but also help power your enterprise to the next level with a bespoke LLM unique to your organization. Beginning with foundational principles, the book starts with a platform overview and explores features and best practices for ingestion, transformation, and storage with Delta Lake. Advanced topics include leveraging Databricks SQL for querying and visualizing large datasets, ensuring data governance and security with Unity Catalog, and deploying machine learning and LLMs using Databricks MLflow for GenAI. Through practical examples, insights, and best practices, this book equips solution architects and data engineers with the knowledge to design and implement scalable data solutions, making it an indispensable resource for modern enterprises. Whether you are new to Databricks and trying to learn a new platform, a seasoned practitioner building data pipelines, data science models, or GenAI applications, or even an executive who wants to communicate the value of Databricks to customers, this book is for you. With its extensive feature and best practice deep dives, it also serves as an excellent reference guide if you are preparing for Databricks certification exams. What You Will Learn Foundational principles of Lakehouse architecture Key features including Unity Catalog, Databricks SQL (DBSQL), and Delta Live Tables Databricks Intelligence Platform and key functionalities Building and deploying GenAI Applications from data ingestion to model serving Databricks pricing, platform security, DBRX, and many more topics Who This Book Is For Solution architects, data engineers, data scientists, Databricks practitioners, and anyone who wants to deploy their Gen AI solutions with the Data Intelligence Platform. This is also a handbook for senior execs who need to communicate the value of Databricks to customers. People who are new to the Databricks Platform and want comprehensive insights will find the book accessible.

Access detailed content and examples on Azure SQL, a set of cloud services that allows for SQL Server to be deployed in the cloud. This book teaches the fundamentals of deployment, configuration, security, performance, and availability of Azure SQL from the perspective of these same tasks and capabilities in SQL Server. This distinct approach makes this book an ideal learning platform for readers familiar with SQL Server on-premises who want to migrate their skills toward providing cloud solutions to an enterprise market that is increasingly cloud-focused. If you know SQL Server, you will love this book. You will be able to take your existing knowledge of SQL Server and translate that knowledge into the world of cloud services from the Microsoft Azure platform, and in particular into Azure SQL. This book provides information never seen before about the history and architecture of Azure SQL. Author Bob Ward is a leading expert with access to and support from the Microsoft engineering team that built Azure SQL and related database cloud services. He presents powerful, behind-the-scenes insights into the workings of one of the most popular database cloud services in the industry. This book also brings you the latest innovations for Azure SQL including Azure Arc, Hyperscale, generative AI applications, Microsoft Copilots, and integration with the Microsoft Fabric. What You Will Learn Know the history of Azure SQL Deploy, configure, and connect to Azure SQL Choose the correct way to deploy SQL Server in Azure Migrate existing SQL Server instances to Azure SQL Monitor and tune Azure SQL’s performance to meet your needs Ensure your data and application are highly available Secure your data from attack and theft Learn the latest innovations for Azure SQL including Hyperscale Learn how to harness the power of AI for generative data-driven applications and Microsoft Copilots for assistance Learn how to integrate Azure SQL with the unified data platform, the Microsoft Fabric Who This Book Is For This book is designed to teach SQL Server in the Azure cloud to the SQL Server professional. Anyone who operates, manages, or develops applications for SQL Server will benefit from this book. Readers will be able to translate their current knowledge of SQL Server—especially of SQL Server 2019 and 2022—directly to Azure. This book is ideal for database professionals looking to remain relevant as their customer base moves into the cloud.

Summary In this episode of the Data Engineering Podcast Lukas Schulte, co-founder and CEO of SDF, explores the development and capabilities of this fast and expressive SQL transformation tool. From its origins as a solution for addressing data privacy, governance, and quality concerns in modern data management, to its unique features like static analysis and type correctness, Lucas dives into what sets SDF apart from other tools like DBT and SQL Mesh. Tune in for insights on building a business around a developer tool, the importance of community and user experience in the data engineering ecosystem, and plans for future development, including supporting Python models and enhancing execution capabilities. Announcements Hello and welcome to the Data Engineering Podcast, the show about modern data managementImagine catching data issues before they snowball into bigger problems. That’s what Datafold’s new Monitors do. With automatic monitoring for cross-database data diffs, schema changes, key metrics, and custom data tests, you can catch discrepancies and anomalies in real time, right at the source. Whether it’s maintaining data integrity or preventing costly mistakes, Datafold Monitors give you the visibility and control you need to keep your entire data stack running smoothly. Want to stop issues before they hit production? Learn more at dataengineeringpodcast.com/datafold today!Your host is Tobias Macey and today I'm interviewing Lukas Schulte about SDF, a fast and expressive SQL transformation tool that understands your schemaInterview IntroductionHow did you get involved in the area of data management?Can you describe what SDF is and the story behind it?What's the story behind the name?What problem are you solving with SDF?dbt has been the dominant player for SQL-based transformations for several years, with other notable competition in the form of SQLMesh. Can you give an overview of the venn diagram for features and functionality across SDF, dbt and SQLMesh?Can you describe the design and implementation of SDF?How have the scope and goals of the project changed since you first started working on it?What does the development experience look like for a team working with SDF?How does that differ between the open and paid versions of the product?What are the features and functionality that SDF offers to address intra- and inter-team collaboration?One of the challenges for any second-mover technology with an established competitor is the adoption/migration path for teams who have already invested in the incumbent (dbt in this case). How are you addressing that barrier for SDF?Beyond the core migration path of the direct functionality of the incumbent product is the amount of tooling and communal knowledge that grows up around that product. How are you thinking about that aspect of the current landscape?What is your governing principle for what capabilities are in the open core and which go in the paid product?What are the most interesting, innovative, or unexpected ways that you have seen SDF used?What are the most interesting, unexpected, or challenging lessons that you have learned while working on SDF?When is SDF the wrong choice?What do you have planned for the future of SDF?Contact Info LinkedInParting Question From your perspective, what is the biggest gap in the tooling or technology for data management today?Links SDFSemantic Data Warehouseasdf-vmdbtSoftware Linting)SQLMeshPodcast EpisodeCoalescePodcast EpisodeApache IcebergPodcast EpisodeDuckDB Podcast Episode SDF Classifiersdbt Semantic Layerdbt expectationsApache DatafusionIbisThe intro and outro music is from The Hug by The Freak Fandango Orchestra / CC BY-SA

Dive into efficient data handling with 'In-Memory Analytics with Apache Arrow.' This book explores Apache Arrow, a powerful open-source project that revolutionizes how tabular and hierarchical data are processed. You'll learn to streamline data pipelines, accelerate analysis, and utilize high-performance tools for data exchange. What this Book will help me do Understand and utilize the Apache Arrow in-memory data format for your data analysis needs. Implement efficient and high-speed data pipelines using Arrow subprojects like Flight SQL and Acero. Enhance integration and performance in analysis workflows by using tools like Parquet and Snowflake with Arrow. Master chaining and reusing computations across languages and environments with Arrow's cross-language support. Apply in real-world scenarios by integrating Apache Arrow with analytics systems like Dremio and DuckDB. Author(s) Matthew Topol, the author of this book, brings 15 years of technical expertise in the realm of data processing and analysis. Having worked across various environments and languages, Matthew offers insights into optimizing workflows using Apache Arrow. His approachable writing style ensures that complex topics are comprehensible. Who is it for? This book is tailored for developers, data engineers, and data scientists eager to enhance their analytic toolset. Whether you're a beginner or have experience in data analysis, you'll find the concepts actionable and transformative. If you are curious about improving the performance and capabilities of your analytic pipelines or tools, this book is for you.

Scaling machine learning at large organizations like Renault Group presents unique challenges, in terms of scales, legal requirements, and diversity of use cases. Data scientists require streamlined workflows and automated processes to efficiently deploy models into production. We present an MLOps pipeline based on python Kubeflow and GCP Vertex AI API designed specifically for this purpose. It enables data scientists to focus on code development for pre-processing, training, evaluation, and prediction. This MLOPS pipeline is a cornerstone of the AI@Scale program, which aims to roll out AI across the Group.

We choose a Python-first approach, allowing Data scientists to focus purely on writing preprocessing or ML oriented Python code, also allowing data retrieval through SQL queries. The pipeline addresses key questions such as prediction type (batch or API), model versioning, resource allocation, drift monitoring, and alert generation. It favors faster time to market with automated deployment and infrastructure management. Although we encountered pitfalls and design difficulties, that we will discuss during the presentation, this pipeline integrates with a CI/CD process, ensuring efficient and automated model deployment and serving.

Finally, this MLOps solution empowers Renault data scientists to seamlessly translate innovative models into production, and smoothen the development of scalable, and impactful AI-driven solutions.

The analytics field has grown increasingly complex, leading to an 'analytics debt' that hinders strategic focus and burdens businesses. This talk will dissect analytics debt and its impact on scaling operations. We'll showcase how MicroStrategy AI can streamline analytics by reducing the need for extensive reporting with AI chat and applications simplification. Features like Auto Answers, Auto Dashboards, Auto SQL and Auto Expert not only speed up development but also democratize data expertise, making vast resources accessible to all. Discover how these innovations can redirect efforts towards business growth, improve margins, and empower stakeholders with smarter, user-friendly analytics tools. Join us to unlock your data's true potential and eliminate analytics debt.

Hands-on session exploring how to analyze time-series data and contextualize it with enterprise data.