talk-data.com

talk-data.com

Topic

AWS Lambda

45

tagged

Activity Trend

Top Events

We will share the case study of Airflow at StyleSeat, where within a year our data grew from 2 million data points per day to 200 million. Our original solution for orchestrating this data was not enough, so we migrated to an Airflow based solution. Previous implementation Our tasks were orchestrated with hourly triggers on AWS Cloudwatch rules in their own log groups. Each task was a lambda individually defined as a task and executed python code from a docker image. As complexity increased, there were frequent downtimes and manual executions for failed tasks and their downstream dependencies.With every downtime, our business stakeholders started losing trust in Data and recovery times were longer with each downtime. We needed a modern orchestration platform which would enable our team to define and instrument complex pipelines as code, provide visibility into executions and define retry criteria on failures. Airflow was identified as a crucial & critical piece in modernizing our orchestration which would help us further onboard DBT. We wanted a managed solution and a partner who could help guide us to a successful migration.

In this episode, Conor and Bryce record live from C++ On Sea 2023 and interview all the other C++ podcasts: TLB HIT, Two’s Complement and CppCast! Link to Episode 136 on WebsiteDiscuss this episode, leave a comment, or ask a question (on GitHub)Twitter ADSP: The PodcastConor HoekstraBryce Adelstein LelbachGuests Interviewed JF BastienMatt GodboltJonathan Müller (Briefly)Tristan Brindle (Briefly)Phil NashTimur DoumlerShow Notes Date Recorded: 2023-06-29 Date Released: 2023-06-30 C++ On Sea ConferenceC++ On Sea 2023 Keynote: Endnote: AI-Assisted Software Engineering - Bryce Adelstein LelbachC++ Club PodcastTLB HIT PodcastJust-in-Time Compilation - JF Bastien - CppCon 2020C++ On Sea 2023: (char)0 = 0; - JF BastienTranslation lookaside buffer (TLB)Photo of JF, Bryce and Conor on TwitterTwo’s Complement PodcastCroc: Legend of the GobbosCompiler ExplorerC++ On Sea 2023: Throwing Tools at Ranges - Tina UlbrichCircle CompilerC++ On Sea 2023: What’s New in Compiler Explorer? - Matt GodboltThink-Cell Is HiringC++ On Sea 2023: Iteration Revisited - Tristan BrindleCppCast PodcastC++ on Sea 2023: C++ and Safety - Timur DoumlerC++ on Sea 2023 Keynote: All the Safeties - Sean ParentC++ Lambda Idioms - Timur Doumler - CppNorth 2022Intro Song Info Miss You by Sarah Jansen https://soundcloud.com/sarahjansenmusic Creative Commons — Attribution 3.0 Unported — CC BY 3.0 Free Download / Stream: http://bit.ly/l-miss-you Music promoted by Audio Library https://youtu.be/iYYxnasvfx8

In this episode, Conor and Bryce record live from Austria while driving and chat about algorithms including scans, unique and more! Link to Episode 135 on WebsiteDiscuss this episode, leave a comment, or ask a question (on GitHub)Twitter ADSP: The PodcastConor HoekstraBryce Adelstein LelbachShow Notes Date Recorded: 2023-06-18 Date Released: 2023-06-23 Lambda Days 2023 WebsiteItalian C++KX Con 2023: Algorithms in q - Conor HoekstraSkyline Problem in Top 10scan in BQNdistinct in qdedup in Ruststd::unique in C++C++Now 2019: Conor Hoekstra “Algorithm Intuition”Rainwater Problem in Top 10C++20 std::views::filterC++20 std::views::takeC++20 std::views::dropIntro Song Info Miss You by Sarah Jansen https://soundcloud.com/sarahjansenmusic Creative Commons — Attribution 3.0 Unported — CC BY 3.0 Free Download / Stream: http://bit.ly/l-miss-you Music promoted by Audio Library https://youtu.be/iYYxnasvfx8

In this episode, Conor interviews speakers & organizers live from Lambda Days 2023! Link to Episode 134 on WebsiteDiscuss this episode, leave a comment, or ask a question (on GitHub)Twitter ADSP: The PodcastConor HoekstraBryce Adelstein LelbachGuests Interviewed Barbara TrojeckaBecca WilliamsJordan MillerSimon Peyton JonesShow Notes Date Recorded: 2023-06-06 Date Released: 2023-06-16 Lambda Days 2023 WebsiteLambda Days on TwitterLambda Days on LinkedInLambda Days on FacebookErlang LanguageElixir LanguageScala LanguageFunctional Females on LinkedInBecca Williams on LinkedInBecca Williams on TwitterOlivia Smith on LinkedInOlivia Smith on TwitterLost in Lambdauhhs PodcastJordan Miller - Cognicast Episode 165defn PodcastClojure CampClojure for the Brave and TrueClojure Languagewww.plrank.comThreading Macros in ClojureLambda Days 2023: Opening Keynote - Beyond functional programming - Simon Peyton Jones & Tim SweeneyVerse Language“Shaping our children’s education in computing” by Simon Peyton JonesBeyond Functional Programming: The Verse Programming Language (Simon Peyton Jones)Lambda Days 2023: Bringing LAMBDA to Excel - Jack WilliamsLAMBDA in ExcelKeynote: Excel meets Lambda - Andy Gordon, Simon Peyton Jones | Lambda Days 2021Download Excel LabsSimon Peyton Jones Home PageRemora LanguageHaskell orthotope Library

In this episode, Conor interviews speakers & attendees live from Lambda Days 2023! Link to Episode 133 on WebsiteDiscuss this episode, leave a comment, or ask a question (on GitHub)Twitter ADSP: The PodcastConor HoekstraBryce Adelstein LelbachGuests Interviewed Toby PfiefferKim HuizingAlexis KingJosé ValimAndor PénzesPeer StritzingerShow Notes Date Recorded: 2023-06-06 Date Released: 2023-06-09 Lambda Days 2023Elixir LanguageElixir Benchee Benchmarking LibraryRuby Berlin User Group MeetingLambda Days 2023: Stories In Open Soure - Toby PfeifferLambda Days 2018 - Tobias Pfeiffer - Stop Guessing and Start Measuring - Benchmarking in PracticeDevon Estes - Digging through the garbage - Code BEAM Lite Berlin 18Scala LanguageAWK LanguageAlexis King’s BlogLambda Days 2023: Delimited Continuations Demystified - Alexis KingZuriHac 2023: Delimited Continuations Demystified - Alexis KingRacket LanguageSlideshow LanguageLambda Days 2023: Meta-programmable functional notebooks with Livebook - José ValimThinking Elixir PodcastLambda Days 2021: Introducing Nx - José ValimElixir NxElixir LiveBookElixir PhoenixElixir MembraneKeynote: Celebrating the 10 Years of Elixir | José Valim | ElixirConf EU 2022Elixir ForumSponsor: Evolution EngineeringLambda Days 2023: Examples of easy dependently typed programming (in Idris) - Andor PénzesType Driven Development by Edwin BradyFunctional FemalesGRiSPErlang LanguageWhatsApp acquired for 19BGRiSP on TwitterIntro Song Info Miss You by Sarah Jansen https://soundcloud.com/sarahjansenmusic Creative Commons — Attribution 3.0 Unported — CC BY 3.0 Free Download / Stream: http://bit.ly/l-miss-you

ABOUT THE TALK: Hybridization approaches such as Lambda and Kappa architecture are powerful tools for combining the most useful characteristics of batch and real time systems. Implementation and management of these architectures is not for the faint of heart, but the last several years have seen a wave of SaaS platforms and managed services that deliver hybrid capabilities with a greatly reduced operational burden. This talk details the anticipated impact of these hybrid technologies on future data stacks, and touches on the mythical “one database to rule them all.”

ABOUT THE SPEAKER: Matt Housley holds a Ph.D. in mathematics and is co-author of the bestselling O’Reilly book Fundamentals of Data Engineering.

ABOUT DATA COUNCIL: Data Council (https://www.datacouncil.ai/) is a community and conference series that provides data professionals with the learning and networking opportunities they need to grow their careers.

Make sure to subscribe to our channel for the most up-to-date talks from technical professionals on data related topics including data infrastructure, data engineering, ML systems, analytics and AI from top startups and tech companies.

FOLLOW DATA COUNCIL: Twitter: https://twitter.com/DataCouncilAI LinkedIn: https://www.linkedin.com/company/datacouncil-ai/

Summary

This podcast started almost exactly six years ago, and the technology landscape was much different than it is now. In that time there have been a number of generational shifts in how data engineering is done. In this episode I reflect on some of the major themes and take a brief look forward at some of the upcoming changes.

Announcements

Hello and welcome to the Data Engineering Podcast, the show about modern data management Your host is Tobias Macey and today I'm reflecting on the major trends in data engineering over the past 6 years

Interview

Introduction 6 years of running the Data Engineering Podcast Around the first time that data engineering was discussed as a role

Followed on from hype about "data science"

Hadoop era Streaming Lambda and Kappa architectures

Not really referenced anymore

"Big Data" era of capture everything has shifted to focusing on data that presents value

Regulatory environment increases risk, better tools introduce more capability to understand what data is useful

Data catalogs

Amundsen and Alation

Orchestration engine

Oozie, etc. -> Airflow and Luigi -> Dagster, Prefect, Lyft, etc. Orchestration is now a part of most vertical tools

Cloud data warehouses Data lakes DataOps and MLOps Data quality to data observability Metadata for everything

Data catalog -> data discovery -> active metadata

Business intelligence

Read only reports to metric/semantic layers Embedded analytics and data APIs

Rise of ELT

dbt Corresponding introduction of reverse ETL

What are the most interesting, unexpected, or challenging lessons that you have learned while working on running the podcast? What do you have planned for the future of the podcast?

Parting Question

From your perspective, what is the biggest gap in the tooling or technology for data management today?

Closing Announcements

Thank you for listening! Don't forget to check out our other shows. Podcast.init covers the Python language, its community, and the innovative ways it is being used. The Machine Learning Podcast helps you go from idea to production with machine learning. Visit the site to subscribe to the show, sign up for the mailing list, and read the show notes. If you've learned something or tried out a project from the show then tell us about it! Email [email protected]) with your story. To help other people find the show please leave a review on Apple Podcasts and tell your friends and co-workers

The intro and outro music is from The Hug by The Freak Fandango Orchestra / CC BY-SA

Sponsored By:

Materialize:

Looking for the simplest way to get the freshest data possible to your teams? Because let's face it: if real-time were easy, everyone would be using it. Look no further than Materialize, the streaming database you already know how to use.

Materialize’s PostgreSQL-compatible interface lets users leverage the tools they already use, with unsurpassed simplicity enabled by full ANSI SQL support. Delivered as a single platform with the separation of storage and compute, strict-serializability, active replication, horizontal scalability and workload isolation — Materialize is now the fastest way to build products with streaming data, drastically reducing the time, expertise, cost and maintenance traditionally associated with implementation of real-time features.

Sign up now for early access to Materialize and get started with the power of streaming data with the same simplicity and low implementation cost as batch cloud data warehouses.

Go to materialize.comSupport Data Engineering Podcast

In this episode, Conor and Bryce talk to Barry Revzin about the pipeline operator |>, C++ Ranges and more! Link to Episode 113 on Website

Twitter ADSP: The PodcastConor HoekstraBryce Adelstein LelbachAbout the Guest Barry Revzin is a senior C++ developer at Jump Trading in Chicago, a research and technology driven trading firm. After programming for many years, he got really into the nuances and intricacies of C++ by being unreasonably active on StackOverflow (where he is the top contributor in C++14, C++17, and C++20). A lot of his C++ knowledge comes from just answering questions that he doesn’t know the answers to, especially when he answers them incorrectly at first.

His C++ involvement escalated when he started attending standards committee meetings in 2016, having written dozens of papers for C++20 and now C++23. You might know him from such features as , pack expansion in lambda init-capture, explicit(bool), conditionally trivial special member functions and, recently approved for C++23, deducing this.

Outside of the C++ world, Barry is an obsessive swimming fan. He writes fun data articles for SwimSwam and also does analysis for the DC Trident, a professional swim team featuring Olympic Gold Medalists Zach Apple and Anna Hopkin, managed by two-time Olympian Kaitlin Sandeno.

Show Notes

Date Recorded: 2023-01-15 Date Released: 2023-01-20 Iterators and Ranges: Comparing C++ to D to Rust - Barry Revzin - [CppNow 2021]Keynote: Iterators and Ranges: Comparing C++ to D, Rust, and Others - Barry Revzin - CPPP 2021Kona Photo of Barry and Michael SwimmingCppCast Episode 237: Packs and PipelinesP2011 A pipeline-rewrite operatorP2672 Exploring the Design Space for a Pipeline OperatorC++20/23 Ranges LibaryRanges-v3 LibraryBoost.Lambda LibraryBoost.Lambda2 LibraryTC39 Pipe Operator (|>) for JavaScriptIntro Song Info Miss You by Sarah Jansen https://soundcloud.com/sarahjansenmusic Creative Commons — Attribution 3.0 Unported — CC BY 3.0 Free Download / Stream: http://bit.ly/l-miss-you Music promoted by Audio Library https://youtu.be/iYYxnasvfx8

Streaming data pipelines can fail due to various reasons. Since the source data, such as Kafka topics, often have limited retention, prolonged job failures can lead to data loss. Thus, streaming jobs need to be backfillable at all times to prevent data loss in case of failures. One solution is to increase the source's retention so that backfilling is simply replaying source streams, but extending Kafka retention is very costly for Netflix's data sizes. Another solution is to utilize source data stored in DWH, commonly known as the Lambda architecture. However, this method introduces significant code duplication, as it requires engineers to maintain a separate equivalent batch job. At Netflix, we have created the Iceberg Source Connector to provide backfilling capabilities to Flink streaming applications. It allows Flink to stream data stored in Apache Iceberg while mirroring Kafka's ordering semantics, enabling us to backfill large-scale stateful Flink pipelines at low retention cost.

Connect with us: Website: https://databricks.com Facebook: https://www.facebook.com/databricksinc Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/data... Instagram: https://www.instagram.com/databricksinc/

Huge volumes of data flow through a robust Kafka architecture, into several ETLs, receiving, transforming and storing the data. We clearly understood our ETLs’ workflow and our data architecture, from source to destination.

But how much did we know about the way our data makes though our systems? And what about the life long question, is it the end of the day yet?

In this talk I’m going to present to you the design process behind our Data Auditing system, Life Line. From tracking and producing, to analyzing and storing auditing information, using technologies such as Kafka, Avro, Spark, Lambda functions and complex SQL queries. We’re going to cover: * AVRO Audit header * Auditing heart beat - designing your metadata * Designing and optimizing your auditing table - what does this data look like anyway? * Creating an alert based monitoring system * Answering the most important question of all - is it the end of the day yet?

Connect with us: Website: https://databricks.com Facebook: https://www.facebook.com/databricksinc Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/data... Instagram: https://www.instagram.com/databricksinc/

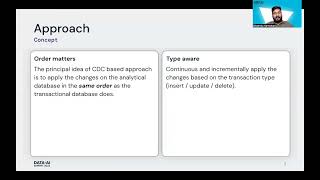

Many companies are trying to solve the challenges of ingesting transactional data in Data lake and dealing with late-arriving updates and deletes.

To address this at Swiggy, we have built CDC(Change Data Capture) system, an incremental processing framework to power all business-critical data pipelines at low latency and high efficiency.

It offers: Freshness: It operates in near real-time with configurable latency requirements. Performance: Optimized read and write performance with tuned compaction parameters and partitions and delta table optimization. Consistency: It supports reconciliation based on transaction types. Basically applying insert, update, and delete on existing data.

To implement this system, AWS DMS helped us with initial bootstrapping and CDC replication for Mysql sources. AWS Lambda and DynamoDB streams helped us to solve the bootstrapping and CDC replication for DynamoDB source.

After setting up the bootstrap and cdc replication process we have used Databricks delta merge to reconcile the data based on the transaction types.

To support the merge we have implemented supporting features - * Deduplicating multiple mutations of the same record using log offset and time stamp. * Adding optimal partition of the data set. * Infer schema and apply proper schema evolutions(Backward compatible schema) * We have extended the delta table snapshot generation technique to create a consistent partition for partitioned delta tables.

FInally to read the data we are using Spark sql with Hive metastore and Snowflake. Delta tables read with Spark sql have implicit support for hive metastore. We have built our own implementation of the snowflake sync process to create external, internal tables and materialized views on Snowflake.

Stats: 500m CDC logs/day 600+ tables

Connect with us: Website: https://databricks.com Facebook: https://www.facebook.com/databricksinc Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/data... Instagram: https://www.instagram.com/databricksinc/

Summary Metadata is the lifeblood of your data platform, providing information about what is happening in your systems. A variety of platforms have been developed to capture and analyze that information to great effect, but they are inherently limited in their utility due to their nature as storage systems. In order to level up their value a new trend of active metadata is being implemented, allowing use cases like keeping BI reports up to date, auto-scaling your warehouses, and automated data governance. In this episode Prukalpa Sankar joins the show to talk about the work she and her team at Atlan are doing to push this capability into the mainstream.

Announcements

Hello and welcome to the Data Engineering Podcast, the show about modern data management When you’re ready to build your next pipeline, or want to test out the projects you hear about on the show, you’ll need somewhere to deploy it, so check out our friends at Linode. With their new managed database service you can launch a production ready MySQL, Postgres, or MongoDB cluster in minutes, with automated backups, 40 Gbps connections from your application hosts, and high throughput SSDs. Go to dataengineeringpodcast.com/linode today and get a $100 credit to launch a database, create a Kubernetes cluster, or take advantage of all of their other services. And don’t forget to thank them for their continued support of this show! RudderStack helps you build a customer data platform on your warehouse or data lake. Instead of trapping data in a black box, they enable you to easily collect customer data from the entire stack and build an identity graph on your warehouse, giving you full visibility and control. Their SDKs make event streaming from any app or website easy, and their state-of-the-art reverse ETL pipelines enable you to send enriched data to any cloud tool. Sign up free… or just get the free t-shirt for being a listener of the Data Engineering Podcast at dataengineeringpodcast.com/rudder. Data teams are increasingly under pressure to deliver. According to a recent survey by Ascend.io, 95% in fact reported being at or over capacity. With 72% of data experts reporting demands on their team going up faster than they can hire, it’s no surprise they are increasingly turning to automation. In fact, while only 3.5% report having current investments in automation, 85% of data teams plan on investing in automation in the next 12 months. 85%!!! That’s where our friends at Ascend.io come in. The Ascend Data Automation Cloud provides a unified platform for data ingestion, transformation, orchestration, and observability. Ascend users love its declarative pipelines, powerful SDK, elegant UI, and extensible plug-in architecture, as well as its support for Python, SQL, Scala, and Java. Ascend automates workloads on Snowflake, Databricks, BigQuery, and open source Spark, and can be deployed in AWS, Azure, or GCP. Go to dataengineeringpodcast.com/ascend and sign up for a free trial. If you’re a data engineering podcast listener, you get credits worth $5,000 when you become a customer. Today’s episode is Sponsored by Prophecy.io – the low-code data engineering platform for the cloud. Prophecy provides an easy-to-use visual interface to design & deploy data pipelines on Apache Spark & Apache Airflow. Now all the data users can use software engineering best practices – git, tests and continuous deployment with a simple to use visual designer. How does it work? – You visually design the pipelines, and Prophecy generates clean Spark code with tests on git; then you visually schedule these pipelines on Airflow. You can observe your pipelines with built in metadata search and column level lineage. Finally, if you have existing workflows in AbInitio, Informatica or other ETL formats that you want to move to the cloud, you can import them automatically into Prophecy making them run productively on Spark. Create your free account today at dataengineeringpodcast.com/prophecy. Your host is Tobias Macey and today I’m interviewing Prukalpa Sankar about how data platforms can benefit from the idea of "active metadata" and the work that she and her team at Atlan are doing to make it a reality

Interview

Introduction How did you get involved in the area of data management? Can you describe what "active metadata" is and how it differs from the current approaches to metadata systems? What are some of the use cases that "active metadata" can enable for data producers and consumers?

What are the points of friction that those users encounter in the current formulation of metadata systems?

Central metadata systems/data catalogs came about as a solution to the challenge of integrating every data tool with every other data tool, giving a single place to integrate. What are the lessons that are being learned from the "modern data stack" that can be applied to centralized metadata? Can you describe the approach that you are taking at Atlan to enable the adoption of "active metadata"?

What are the architectural capabilities that you had to build to power the outbound traffic flows?

How are you addressing the N x M integration problem for pushing metadata into the necessary contexts at Atlan?

What are the interfaces that are necessary for receiving systems to be able to make use of the metadata that is being delivered? How does the type/category of metadata impact the type of integration that is necessary?

What are some of the automation possibilities that metadata activation offers for data teams?

What are the cases where you still need a human in the loop?

What are the most interesting, innovative, or unexpected ways that you have seen active metadata capabilities used? What are the most interesting, unexpected, or challenging lessons that you have learned while working on activating metadata for your users? When is an active approach to metadata the wrong choice? What do you have planned for the future of Atlan and active metadata?

Contact Info

LinkedIn @prukalpa on Twitter

Parting Question

From your perspective, what is the biggest gap in the tooling or technology for data management today?

Closing Announcements

Thank you for listening! Don’t forget to check out our other shows. Podcast.init covers the Python language, its community, and the innovative ways it is being used. The Machine Learning Podcast helps you go from idea to production with machine learning. Visit the site to subscribe to the show, sign up for the mailing list, and read the show notes. If you’ve learned something or tried out a project from the show then tell us about it! Email [email protected]) with your story. To help other people find the show please leave a review on Apple Podcasts and tell your friends and co-workers

Links

Atlan What is Active Metadata? Segment

Podcast Episode

Zapier ArgoCD Kubernetes Wix AWS Lambda Modern Data Culture Blog Post

The intro and outro music is from The Hug by The Freak Fandango Orchestra / CC BY-SA

Support Data Engineering Podcast

Apply different enterprise integration and processing strategies available with Pulsar, Apache's multi-tenant, high-performance, cloud-native messaging and streaming platform. This book is a comprehensive guide that examines using Pulsar Java libraries to build distributed applications with message-driven architecture. You'll begin with an introduction to Apache Pulsar architecture. The first few chapters build a foundation of message-driven architecture. Next, you'll perform a setup of all the required Pulsar components. The book also covers work with Apache Pulsar client library to build producers and consumers for the discussed patterns. You'll then explore the transformation, filter, resiliency, and tracing capabilities available with Pulsar. Moving forward, the book will discuss best practices when building message schemas and demonstrate integration patterns using microservices. Security is an important aspect of any application;the book will cover authentication and authorization in Apache Pulsar such as Transport Layer Security (TLS), OAuth 2.0, and JSON Web Token (JWT). The final chapters will cover Apache Pulsar deployment in Kubernetes. You'll build microservices and serverless components such as AWS Lambda integrated with Apache Pulsar on Kubernetes. After completing the book, you'll be able to comfortably work with the large set of out-of-the-box integration options offered by Apache Pulsar. What You'll Learn Examine the important Apache Pulsar components Build applications using Apache Pulsar client libraries Use Apache Pulsar effectively with microservices Deploy Apache Pulsar to the cloud Who This Book Is For Cloud architects and software developers who build systems in the cloud-native technologies.

Summary One of the perennial challenges posed by data lakes is how to keep them up to date as new data is collected. With the improvements in streaming engines it is now possible to perform all of your data integration in near real time, but it can be challenging to understand the proper processing patterns to make that performant. In this episode Ori Rafael shares his experiences from Upsolver and building scalable stream processing for integrating and analyzing data, and what the tradeoffs are when coming from a batch oriented mindset.

Announcements

Hello and welcome to the Data Engineering Podcast, the show about modern data management When you’re ready to build your next pipeline, or want to test out the projects you hear about on the show, you’ll need somewhere to deploy it, so check out our friends at Linode. With their managed Kubernetes platform it’s now even easier to deploy and scale your workflows, or try out the latest Helm charts from tools like Pulsar and Pachyderm. With simple pricing, fast networking, object storage, and worldwide data centers, you’ve got everything you need to run a bulletproof data platform. Go to dataengineeringpodcast.com/linode today and get a $100 credit to try out a Kubernetes cluster of your own. And don’t forget to thank them for their continued support of this show! Atlan is a collaborative workspace for data-driven teams, like Github for engineering or Figma for design teams. By acting as a virtual hub for data assets ranging from tables and dashboards to SQL snippets & code, Atlan enables teams to create a single source of truth for all their data assets, and collaborate across the modern data stack through deep integrations with tools like Snowflake, Slack, Looker and more. Go to dataengineeringpodcast.com/atlan today and sign up for a free trial. If you’re a data engineering podcast listener, you get credits worth $3000 on an annual subscription Modern Data teams are dealing with a lot of complexity in their data pipelines and analytical code. Monitoring data quality, tracing incidents, and testing changes can be daunting and often takes hours to days. Datafold helps Data teams gain visibility and confidence in the quality of their analytical data through data profiling, column-level lineage and intelligent anomaly detection. Datafold also helps automate regression testing of ETL code with its Data Diff feature that instantly shows how a change in ETL or BI code affects the produced data, both on a statistical level and down to individual rows and values. Datafold integrates with all major data warehouses as well as frameworks such as Airflow & dbt and seamlessly plugs into CI workflows. Go to dataengineeringpodcast.com/datafold today to start a 30-day trial of Datafold. Your host is Tobias Macey and today I’m interviewing Ori Rafael about strategies for building stream and batch processing patterns for data lake analytics

Interview

Introduction How did you get involved in the area of data management? Can you start by giving an overview of the state of the market for data lakes today?

What are the prevailing architectural and technological patterns that are being used to manage these systems?

Batch and streaming systems have been used in various combinations since the early days of Hadoop. The Lambda architecture has largely been abandoned, so what is the answer for today’s data lakes? What are the challenges presented by streaming approaches to data transformations?

The batch model for processing is intuitive despite its latency problems. What are the benefits that it provides?

The core concept for data orchestration is the DAG. How does that manifest in a streaming context? In batch processing idempotent/immutable datasets are created by re-running the entire pipeline when logic changes need to be made. Given that there is no definitive start or end of a stream, what are the options for amending logical errors in transformations? What are some of the da

Summary Data lakes offer a great deal of flexibility and the potential for reduced cost for your analytics, but they also introduce a great deal of complexity. What used to be entirely managed by the database engine is now a composition of multiple systems that need to be properly configured to work in concert. In order to bring the DBA into the new era of data management the team at Upsolver added a SQL interface to their data lake platform. In this episode Upsolver CEO Ori Rafael and CTO Yoni Iny describe how they have grown their platform deliberately to allow for layering SQL on top of a robust foundation for creating and operating a data lake, how to bring more people on board to work with the data being collected, and the unique benefits that a data lake provides. This was an interesting look at the impact that the interface to your data can have on who is empowered to work with it.

Announcements

Hello and welcome to the Data Engineering Podcast, the show about modern data management What are the pieces of advice that you wish you had received early in your career of data engineering? If you hand a book to a new data engineer, what wisdom would you add to it? I’m working with O’Reilly on a project to collect the 97 things that every data engineer should know, and I need your help. Go to dataengineeringpodcast.com/97things to add your voice and share your hard-earned expertise. When you’re ready to build your next pipeline, or want to test out the projects you hear about on the show, you’ll need somewhere to deploy it, so check out our friends at Linode. With their managed Kubernetes platform it’s now even easier to deploy and scale your workflows, or try out the latest Helm charts from tools like Pulsar and Pachyderm. With simple pricing, fast networking, object storage, and worldwide data centers, you’ve got everything you need to run a bulletproof data platform. Go to dataengineeringpodcast.com/linode today and get a $60 credit to try out a Kubernetes cluster of your own. And don’t forget to thank them for their continued support of this show! You listen to this show because you love working with data and want to keep your skills up to date. Machine learning is finding its way into every aspect of the data landscape. Springboard has partnered with us to help you take the next step in your career by offering a scholarship to their Machine Learning Engineering career track program. In this online, project-based course every student is paired with a Machine Learning expert who provides unlimited 1:1 mentorship support throughout the program via video conferences. You’ll build up your portfolio of machine learning projects and gain hands-on experience in writing machine learning algorithms, deploying models into production, and managing the lifecycle of a deep learning prototype. Springboard offers a job guarantee, meaning that you don’t have to pay for the program until you get a job in the space. The Data Engineering Podcast is exclusively offering listeners 20 scholarships of $500 to eligible applicants. It only takes 10 minutes and there’s no obligation. Go to dataengineeringpodcast.com/springboard and apply today! Make sure to use the code AISPRINGBOARD when you enroll. Your host is Tobias Macey and today I’m interviewing Ori Rafael and Yoni Iny about building a data lake for the DBA at Upsolver

Interview

Introduction How did you get involved in the area of data management? Can you start by sharing your definition of what a data lake is and what it is comprised of? We talked last in November of 2018. How has the landscape of data lake technologies and adoption changed in that time?

How has Upsolver changed or evolved since we last spoke?

How has the evolution of the underlying technologies impacted your implementation and overall product strategy?

What are some of the common challenges that accompany a data lake implementation? How do those challenges influence the adoption or viability of a data lake? How does the introduction of a universal SQL layer change the staffing requirements for building and maintaining a data lake?

What are the advantages of a data lake over a data warehouse if everything is being managed via SQL anyway?

What are some of the underlying realities of the data systems that power the lake which will eventually need to be understood by the operators of the platform? How is the SQL layer in Upsolver implemented?

What are the most challenging or complex aspects of managing the underlying technologies to provide automated partitioning, indexing, etc.?

What are the main concepts that you need to educate your customers on? What are some of the pitfalls that users should be aware of? What features of your platform are often overlooked or underutilized which you think should be more widely adopted? What have you found to be the most interesting, unexpected, or challenging lessons learned while building the technical and business elements of Upsolver? What do you have planned for the future?

Contact Info

Ori

Yoni

yoniiny on GitHub LinkedIn

Parting Question

From your perspective, what is the biggest gap in the tooling or technology for data management today?

Links

Upsolver

Podcast Episode

DBA == Database Administrator IDF == Israel Defense Forces Data Lake Eventual Consistency Apache Spark Redshift Spectrum Azure Synapse Analytics SnowflakeDB

Podcast Episode

BigQuery Presto

Podcast Episode

Apache Kafka Cartesian Product kSQLDB

Podcast Episode

Eventador

Podcast Episode

Materialize

Podcast Episode

Common Table Expressions Lambda Architecture Kappa Architecture Apache Flink

Podcast Episode

Reinforcement Learning Cloudformation GDPR

The intro and outro music is from The Hug by The Freak Fandango Orchestra / CC BY-SA

Support Data Engineering Podcast

Applied Supervised Learning with R equips you with the essential knowledge and practical skills to leverage machine learning techniques for solving business problems using R. With this book, you'll gain hands-on experience in implementing various supervised learning models, assessing their performance, and selecting the best-suited method for your objectives. What this Book will help me do Gain expertise in identifying and framing business problems suitable for supervised learning. Acquire skills in data wrangling and visualization using R packages like dplyr and ggplot2. Master techniques for tuning hyperparameters to optimize machine learning models. Understand methods for feature selection and dimensionality reduction to enhance model performance. Learn how to deploy machine learning models to production environments, such as AWS Lambda. Author(s) Karthik Ramasubramanian and Jojo Moolayil are both seasoned data science practitioners and educators who bring a wealth of experience in machine learning and analytics. With a deep understanding of R and its applications in real-world scenarios, they offer practical insights and actionable examples to their readers. Their teaching style focuses on clarity and practical application. Who is it for? This book is ideal for data analysts, data scientists, and data engineers at a beginner to intermediate level who aim to master supervised machine learning with R. Readers should have basic knowledge of statistics, probabilities, and R programming. It is designed for those eager to apply machine learning techniques to real-world problems and improve their decision-making capabilities.

Summary

Building a data pipeline that is reliable and flexible is a difficult task, especially when you have a small team. Astronomer is a platform that lets you skip straight to processing your valuable business data. Ry Walker, the CEO of Astronomer, explains how the company got started, how the platform works, and their commitment to open source.

Preamble

Hello and welcome to the Data Engineering Podcast, the show about modern data infrastructure When you’re ready to launch your next project you’ll need somewhere to deploy it. Check out Linode at www.dataengineeringpodcast.com/linode?utm_source=rss&utm_medium=rss and get a $20 credit to try out their fast and reliable Linux virtual servers for running your data pipelines or trying out the tools you hear about on the show. Go to dataengineeringpodcast.com to subscribe to the show, sign up for the newsletter, read the show notes, and get in touch. You can help support the show by checking out the Patreon page which is linked from the site. To help other people find the show you can leave a review on iTunes, or Google Play Music, and tell your friends and co-workers This is your host Tobias Macey and today I’m interviewing Ry Walker, CEO of Astronomer, the platform for data engineering.

Interview

Introduction How did you first get involved in the area of data management? What is Astronomer and how did it get started? Regulatory challenges of processing other people’s data What does your data pipelining architecture look like? What are the most challenging aspects of building a general purpose data management environment? What are some of the most significant sources of technical debt in your platform? Can you share some of the failures that you have encountered while architecting or building your platform and company and how you overcame them? There are certain areas of the overall data engineering workflow that are well defined and have numerous tools to choose from. What are some of the unsolved problems in data management? What are some of the most interesting or unexpected uses of your platform that you are aware of?

Contact Information

Email @rywalker on Twitter

Links

Astronomer Kiss Metrics Segment Marketing tools chart Clickstream HIPAA FERPA PCI Mesos Mesos DC/OS Airflow SSIS Marathon Prometheus Grafana Terraform Kafka Spark ELK Stack React GraphQL PostGreSQL MongoDB Ceph Druid Aries Vault Adapter Pattern Docker Kinesis API Gateway Kong AWS Lambda Flink Redshift NOAA Informatica SnapLogic Meteor

The intro and outro music is from The Hug by The Freak Fandango Orchestra / CC BY-SA Support Data Engineering Podcast

"Data Lake for Enterprises" is a comprehensive guide to building data lakes using the Lambda Architecture. It introduces big data technologies like Hadoop, Spark, and Flume, showing how to use them effectively to manage and leverage enterprise-scale data. You'll gain the skills to design and implement data systems that handle complex data challenges. What this Book will help me do Master the use of Lambda Architecture to create scalable and effective data management systems. Understand and implement technologies like Hadoop, Spark, Kafka, and Flume in an enterprise data lake. Integrate batch and stream processing techniques using big data tools for comprehensive data analysis. Optimize data lakes for performance and reliability with practical insights and techniques. Implement real-world use cases of data lakes and machine learning for predictive data insights. Author(s) None Mishra, None John, and Pankaj Misra are recognized experts in big data systems with a strong background in designing and deploying data solutions. With a clear and methodical teaching style, they bring years of experience to this book, providing readers with the tools and knowledge required to excel in enterprise big data initiatives. Who is it for? This book is ideal for software developers, data architects, and IT professionals looking to integrate a data lake strategy into their enterprises. It caters to readers with a foundational understanding of Java and big data concepts, aiming to advance their practical knowledge of building scalable data systems. If you're eager to delve into cutting-edge technologies and transform enterprise data management, this book is for you.