Unifying storage for your data analytics workloads doesn‘t have to be hard. See how Google Cloud Storage brings your data closer to compute and meets your applications where they are, all while achieving exabyte scale, strong consistency, and lower costs. You'll get new product announcements and see enterprise customers present real-world solutions using Cloud Storage with BigQuery, Hadoop, Spark, Kafka, and more.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

talk-data.com

talk-data.com

Topic

Spark

Apache Spark

581

tagged

Activity Trend

Top Events

Get a behind-the-scenes look at Walmart's data and AI platform. We'll dissect their use of BigQuery, Spark, and large language models to run complex multi-modal data pipelines. We will deep dive into the choices with various engines (SQL, pySPARK) and technologies along with the corresponding tradeoffs. Gain exclusive insights to implement into your own projects.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

This session explores new features of Serverless Spark and its integration with BigQuery. Discover how to harness the strengths of BigQuery with generative AI and open-source tools for flexible, powerful data processing and AI model development. With Spark in BigQuery, you'll benefit from:

Rapid Spark development in BigQuery's secure, scalable environment

Flexible data processing: Choose local execution, Spark, or BigQuery

*Streamlined Spark workflows with BigQuery's workflow orchestration

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

Join this session to learn the latest innovations for BigQuery to support all data, be it structured or unstructured, across multiple and open data formats, and cross-clouds; all workloads, be they Cloud SQL, Spark, or Python; and built-in AI, to supercharge the work of data teams and unlock generative AI across new use cases. Learn how you can take advantage of BigQuery, a single, unified data platform that combines capabilities including data processing, streaming, and governance.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

Big Data Europe Onsite and online on 22-25 November in 2022 Learn more about the conference: https://bit.ly/3BlUk9q

Join our next Big Data Europe conference on 22-25 November in 2022 where you will be able to learn from global experts giving technical talks and hand-on workshops in the fields of Big Data, High Load, Data Science, Machine Learning and AI. This time, the conference will be held in a hybrid setting allowing you to attend workshops and listen to expert talks on-site or online. By Josef Habdank

TwoSigma will provide an overview of its research and AI/ML Platform. The Google Kubernetes Engine-based platform seamlessly integrates with popular frameworks like Ray, Spark, and Dask allowing researchers to test investment strategies. This session will focus on the platform's architecture and capabilities and highlight a recent integration with Google Cloud's Dynamic workload Scheduler and Kueue providing researchers on-demand access to A100 and H100 graphics processing units.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

Learn how Dataproc can support your hybrid multicloud strategy and help you meet your business goals for your big data open source analytics workloads. Discover how LiveRamp achieved performance boosts and cost reductions by migrating to Dataproc. Learn their migration secrets, overcome common hurdles, and leverage Dataproc's hidden gems for a seamless transition.

Click the blue “Learn more” button above to tap into special offers designed to help you implement what you are learning at Google Cloud Next 25.

Dive into the wonders of Microsoft Fabric, the ultimate solution for mastering data analytics in the AI era. Through engaging real-world examples and hands-on scenarios, this book will equip you with all the tools to design, build, and maintain analytics systems for various use cases like lakehouses, data warehouses, real-time analytics, and data science. What this Book will help me do Understand and utilize the key components of Microsoft Fabric for modern analytics. Build scalable and efficient data analytics solutions with medallion architecture. Implement real-time analytics and machine learning models to derive actionable insights. Monitor and administer your analytics platform for high performance and security. Leverage AI-powered assistant Copilot to boost analytics productivity. Author(s) Arshad Ali and None Schacht bring years of expertise in data analytics and system architecture to this book. Arshad is a seasoned professional specialized in AI-integrated analytics platforms, while None Schacht has a proven track record in deploying enterprise data solutions. Together, they provide deep insights and practical knowledge with a structured and approachable teaching method. Who is it for? Ideal for data professionals such as data analysts, engineers, scientists, and AI/ML experts aiming to enhance their data analytics skills and master Microsoft Fabric. It's also suited for students and new entrants to the field looking to establish a firm foundation in analytics systems. Requires a basic understanding of SQL and Spark.

Databricks started out as a platform for using Spark, a big data analytics engine, but it's grown a lot since then. Databricks now allows users to leverage their data and AI projects in the same place, ensuring ease of use and consistency across operations. The Databricks platform is converging on the idea of data intelligence, but what does this mean, how will it help data teams and organizations, and where does AI fit in the picture? Ari is Databricks’ Head of Evangelism and "The Real Moneyball Guy" - the popular movie was partly based on his analytical innovations in Major League Baseball. He is a leading influencer in analytics, artificial intelligence, data science, and high-growth business innovation. Ari was previously the Global AI Evangelist at DataRobot, Nielsen’s regional VP of Analytics, Caltech Alumni of the Decade, President Emeritus of the worldwide Independent Oracle Users Group, on Intel’s AI Board of Advisors, Sports Illustrated Top Ten GM Candidate, an IBM Watson Celebrity Data Scientist, and on the Crain’s Chicago 40 Under 40. He's also written 5 books on analytics, databases, and baseball. Robin is the Field CTO at Databricks. She has consulted with hundreds of organizations on data strategy, data culture, and building diverse data teams. Robin has had an eclectic career path in technical and business functions with more than two decades in tech companies, including Microsoft and Databricks. She also has achieved multiple academic accomplishments from her juris doctorate to a masters in law to engineering leadership. From her first technical role as an entry-level consumer support engineer to her current role in the C-Suite, Robin supports creating an inclusive workplace and is the current co-chair of Women in Data Safety Committee. She was also recognized in 2023 as a Top 20 Women in Data and Tech, as well as DataIQ 100 Most Influential People in Data. In the episode, Richie, Ari, and Robin explore Databricks, the application of generative AI in improving services operations and providing data insights, data intelligence, and lakehouse technology, the wide-ranging applications of generative AI, how AI tools are changing data democratization, the challenges of data governance and management and how tools like Databricks can help, how jobs in data and AI are changing and much more. About the AI and the Modern Data Stack DataFramed Series This week we’re releasing 4 episodes focused on how AI is changing the modern data stack and the analytics profession at large. The modern data stack is often an ambiguous and all-encompassing term, so we intentionally wanted to cover the impact of AI on the modern data stack from different angles. Here’s what you can expect: Why the Future of AI in Data will be Weird with Benn Stancil, CTO at Mode & Field CTO at ThoughtSpot — Covering how AI will change analytics workflows and tools How Databricks is Transforming Data Warehousing and AI with Ari Kaplan, Head Evangelist & Robin Sutara, Field CTO at Databricks — Covering Databricks, data intelligence and how AI tools are changing data democratizationAdding AI to the Data Warehouse with Sridhar Ramaswamy, CEO at Snowflake — Covering Snowflake and its uses, how generative AI is changing the attitudes of leaders towards data, and how to improve your data managementAccelerating AI Workflows with Nuri Cankaya, VP of AI Marketing & La Tiffaney Santucci, AI Marketing Director at Intel — Covering AI’s impact on marketing analytics, how AI is being integrated into existing products, and the democratization of AI Links Mentioned in the Show: DatabricksDelta Lakea href="https://mlflow.org/" rel="noopener...

Data Engineering with Scala and Spark guides you through building robust data pipelines that process massive datasets efficiently. You will learn practical techniques leveraging Scala and Spark with a hands-on approach to mastering data engineering tasks including ingestion, transformation, and orchestration. What this Book will help me do Set up a data pipeline development environment using Scala Utilize Spark APIs like DataFrame and Dataset for effective data processing Implement CI/CD and testing strategies for pipeline maintainability Optimize pipeline performance through tuning techniques Apply data profiling and quality enforcement using tools like Deequ Author(s) Eric Tome, Rupam Bhattacharjee, and David Radford bring decades of combined experience in data engineering and distributed systems. Their work spans cutting-edge data processing solutions using Scala and Spark. They aim to help professionals excel in building reliable, scalable pipelines. Who is it for? This book is tailored for working data engineers familiar with data workflow processes who desire to enhance their expertise in Scala and Spark. If you aspire to build scalable, high-performance data solutions or transition raw data into strategic assets, this book is ideal.

Send us a text 🎙️ Episode Special: Insights from RootsConf – The Data Dialogue Series, Part 2

Welcome to part two of our special RootsConf series, presented by Dataroots. This episode delves into more insightful conversations from the fifth annual RootsConf. Join us as we explore two engaging interviews, each shedding light on key aspects of AI, tech, and data evolution. 🤖 Rage Against the Machine with Chiel Mues: Delve into a socio-historical analysis of the AI industry. Chiel Mues takes us on a journey, linking the dots between the industrial revolution and today's tech era. Inspired by the book “Blood in the Machine”, this session will focus on intellectual property and worker’s rights within the AI realm. 📈 Modern BI Explored with Mathieu Sencie & Julien Dosogne: Join us as we unravel the intricacies of Modern BI. Mathieu and Julien dissect how these solutions outpace traditional BI by empowering users through self-service capabilities and data democratization. A futuristic look at the expectations and lessons learned from the past in Business Intelligence. This episode promises to be a treasure trove of insights for anyone passionate about understanding the depths of technology and its societal impact. Whether you're a seasoned data professional or just dipping your toes into the digital waters, these discussions are sure to spark your curiosity and broaden your horizons.

Don't miss out on this compelling continuation of our RootsConf special!

Intro music courtesy of fesliyanstudios.com 🎵

Join Igor Khrol as he delves into the world of Big Data with Open Source Solutions at Automattic, a company rooted in the power of open source. 📊🌐 Discover their unique approach to maintaining a data ecosystem based on Hadoop, Spark, Trino, Airflow, Superset, and JupyterHub, all hosted on bare metal infrastructure, and gain insights on how it compares to cloud-based alternatives in 2023. 💡🚀 #BigData #opensource

✨ H I G H L I G H T S ✨

🙌 A huge shoutout to all the incredible participants who made Big Data Conference Europe 2023 in Vilnius, Lithuania, from November 21-24, an absolute triumph! 🎉 Your attendance and active participation were instrumental in making this event so special. 🌍

Don't forget to check out the session recordings from the conference to relive the valuable insights and knowledge shared! 📽️

Once again, THANK YOU for playing a pivotal role in the success of Big Data Conference Europe 2023. 🚀 See you next year for another unforgettable conference! 📅 #BigDataConference #SeeYouNextYear

Embark on a journey of Apache Spark application development with Pasha Finkelshteyn! 🚀 Explore the stages from concept to execution, delving into data exploration, transformation, and analysis powered by Spark's high-level APIs. 📊 Learn testing and validation approaches for accuracy and reliability, and empower yourself to create robust Spark applications for unlocking insights from massive datasets. 💡🔥 #ApacheSpark #BigData #DevelopmentJourney

✨ H I G H L I G H T S ✨

🙌 A huge shoutout to all the incredible participants who made Big Data Conference Europe 2023 in Vilnius, Lithuania, from November 21-24, an absolute triumph! 🎉 Your attendance and active participation were instrumental in making this event so special. 🌍

Don't forget to check out the session recordings from the conference to relive the valuable insights and knowledge shared! 📽️

Once again, THANK YOU for playing a pivotal role in the success of Big Data Conference Europe 2023. 🚀 See you next year for another unforgettable conference! 📅 #BigDataConference #SeeYouNextYear

This session focuses on the use of open-source connectors to enable real-time analytics in Microsoft Fabric and will cover the use of connectors such as Apache Kafka, Apache Flink, Apache Spark, Open Telemetry, Logstash etc. to ingest and process data in real-time. Attendees will learn how to analyze data ingested via open-source connectors to generate insights.

𝗦𝗽𝗲𝗮𝗸𝗲𝗿𝘀: * Akshay Dixit

𝗦𝗲𝘀𝘀𝗶𝗼𝗻 𝗜𝗻𝗳𝗼𝗿𝗺𝗮𝘁𝗶𝗼𝗻: This video is one of many sessions delivered for the Microsoft Ignite 2023 event. View sessions on-demand and learn more about Microsoft Ignite at https://ignite.microsoft.com

OD46 | English (US) | Data

MSIgnite

There are more data tools available than ever before, and it is easier to build a pipeline than it has ever been. These tools and advancements have created an explosion of innovation, resulting in data within today's organizations becoming increasingly distributed and can't be contained within a single brain, a single team, or a single platform. Data lineage can help by tracing the relationships between datasets and providing a map of your entire data universe.

OpenLineage provides a standard for lineage collection that spans multiple platforms, including Apache Airflow, Apache Spark™, Flink®, and dbt. This empowers teams to diagnose and address widespread data quality and efficiency issues in real time. In this session, we will show how to trace data lineage across Apache Spark and Apache Airflow. There will be a walk-through of the OpenLineage architecture and a live demo of a running pipeline with real-time data lineage.

Talk by: Julien Le Dem,Willy Lulciuc

Here’s more to explore: Data, Analytics, and AI Governance: https://dbricks.co/44gu3YU

Connect with us: Website: https://databricks.com Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/databricks Instagram: https://www.instagram.com/databricksinc Facebook: https://www.facebook.com/databricksinc

Databricks workflows has come a long way since the initial days of orchestrating simple notebooks and jar/wheel files. Now we can orchestrate multi-task jobs and create a chain of tasks with lineage and DAG with either fan-in or fan-out among multiple other patterns or even run another Databricks job directly inside another job.

Databricks workflows takes its tag: “orchestrate anything anywhere” pretty seriously and is a truly fully-managed, cloud-native orchestrator to orchestrate diverse workloads like Delta Live Tables, SQL, Notebooks, Jars, Python Wheels, dbt, SQL, Apache Spark™, ML pipelines with excellent monitoring, alerting and observability capabilities as well. Basically, it is a one-stop product for all orchestration needs for an efficient lakehouse. And what is even better is, it gives full flexibility of running your jobs in a cloud-agnostic and cloud-independent way and is available across AWS, Azure and GCP.

In this session, we will discuss and deep dive on some of the very interesting features and will showcase end-to-end demos of the features which will allow you to take full advantage of Databricks workflows for orchestrating the lakehouse.

Talk by: Prashanth Babu

Connect with us: Website: https://databricks.com Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/databricks Instagram: https://www.instagram.com/databricksinc Facebook: https://www.facebook.com/databricksinc

Most have experienced the frustration and disappointment of a flight delay or cancelation due to aircraft issues. The Collins Aerospace business unit at Raytheon Technologies is committed to redefining aerospace by using data to deliver a more reliable, sustainable, efficient, and enjoyable aviation industry.

Ascentia is a product example of this with focus on helping airlines make smarter and more sustainable decisions by anticipating aircraft maintenance issues in advance, leading to more reliable flight schedules and fewer delays. Over the past five years a variety of products from the Databricks technology suite were employed to achieve this. Leveraging cloud infrastructure and harnessing the Databricks Lakehouse, Apache Spark™ development, and Databricks’ dynamic platform, Collins has been able to accelerate development and deployment of predictive health monitoring (PHM) analytics to generate Ascentia’s aircraft maintenance recommendations.

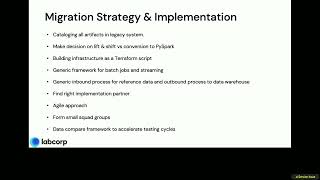

Join this session to learn about the Labcorp data platform transformation from on-premises Hadoop to AWS Databricks Lakehouse. We will share best practices and lessons learned from cloud-native data platform selection, implementation, and migration from Hadoop (within six months) with Unity Catalog.

We will share steps taken to retire several legacy on-premises technologies and leverage Databricks native features like Spark streaming, workflows, job pools, cluster policies and Spark JDBC within Databricks platform. Lessons learned in Implementing Unity Catalog and building a security and governance model that scales across applications. We will show demos that walk you through batch frameworks, streaming frameworks, data compare tools used across several applications to improve data quality and speed of delivery.

Discover how we have improved operational efficiency, resiliency and reduced TCO, and how we scaled building workspaces and associated cloud infrastructure using Terraform provider.

Talk by: Mohan Kolli and Sreekanth Ratakonda

Connect with us: Website: https://databricks.com Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/databricks Instagram: https://www.instagram.com/databricksinc Facebook: https://www.facebook.com/databricksinc

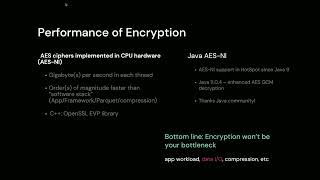

Sensitive data sets can be encrypted directly by new Apache Spark™ versions (3.2 and higher). Setting several configuration parameters and DataFrame options will trigger the Apache Parquet modular encryption mechanism that protects select columns with column-specific keys. The upcoming Spark 3.4 version will also support uniform encryption, where all DataFrame columns are encrypted with the same key.

Spark data encryption is already leveraged by a number of companies to protect personal or business confidential data in their production environments. The main integration effort is focused on key access control and on building a Spark/Parquet plug-in code that can interact with company’s key management service (KMS).

In this session, we will briefly cover the basics of Spark/Parquet encryption usage, and dive into the details of encryption key management that will help in integrating this Spark data protection mechanism in your deployment. You will learn how to run a HelloWorld encryption sample, and how to extend it into a real world production code integrated with your organization’s KMS and access control policies. We will talk about the standard envelope encryption approach to big data protection, the performance-vs-security trade-offs between single and double envelope wrapping, internal and external key metadata storage. We will see a demo, and discuss the new features such as uniform encryption and two-tier management of encryption keys.

Talk by: Gidon Gershinsky

Here’s more to explore: Data, Analytics, and AI Governance: https://dbricks.co/44gu3YU

Connect with us: Website: https://databricks.com Twitter: https://twitter.com/databricks LinkedIn: https://www.linkedin.com/company/databricks Instagram: https://www.instagram.com/databricksinc Facebook: https://www.facebook.com/databricksinc